Manya Wadhwa

@manyawadhwa1

PhD Student @UTCompSci | #NLProc | she/her

ID: 1049023881296658434

https://manyawadhwa.github.io/ 07-10-2018 19:49:18

189 Tweet

363 Followers

903 Following

Thrilled to announce that I will be joining UT Austin Computer Science at UT Austin as an assistant professor in fall 2026! I will continue working on language models, data challenges, learning paradigms, & AI for innovation. Looking forward to teaming up with new students & colleagues! 🤠🤘

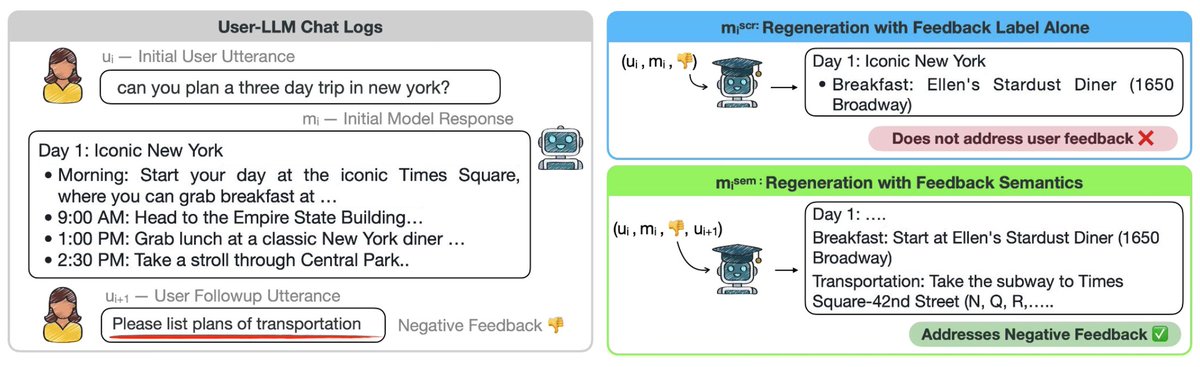

Happy to share that EvalAgent has been accepted to #COLM2025 Conference on Language Modeling 🎉🇨🇦 We introduce a framework to identify implicit and diverse evaluation criteria for various open-ended tasks! 📜 arxiv.org/pdf/2504.15219

📣I've joined @BerkeleyEECS as an Assistant Professor! My lab will join me soon to continue our research in accessibility, HCI, and supporting communication! I'm so excited to make new connections at UC Berkeley and in the Bay Area more broadly, so please reach out to chat!