Matteo Pagliardini

@matpagliardini

PhD student in ML @EPFL_en, previously Apple MLR. @matpagliardini.bsky.social

ID: 1276586949835476992

https://mpagli.github.io/ 26-06-2020 18:44:11

110 Tweet

868 Followers

445 Following

GPTs are generating sequences in a left-to-right order. Is there another way? With François Fleuret and @evanncourdier, in partnership with SkySoft-ATM, we developed σ-GPT, capable of generating sequences in any order chosen dynamically at inference time. 1/6

📢 New Paper: Thinking Slow, Fast: Scaling Inference Compute with Distilled Reasoners. w/ Junxiong Wang, Matteo Pagliardini, Kevin Li , Aviv Bick, Zico Kolter, Albert Gu, François Fleuret , Tri Dao. Paper: arxiv.org/abs/2502.20339 pic.x.com/NyIzBefWcR 1/N

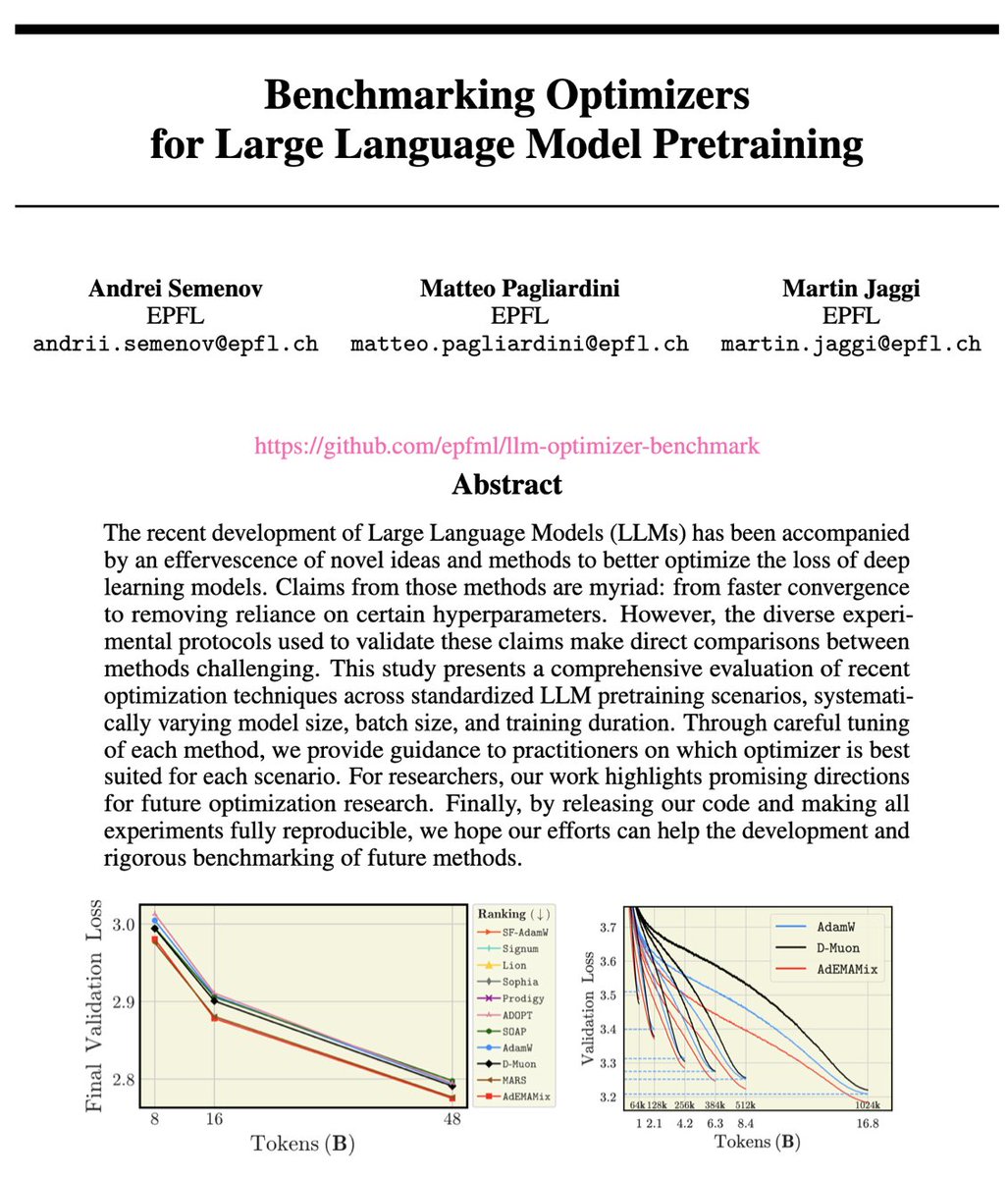

New work from our MLO lab EPFL: Benchmarking the variety of different proposed LLM optimizers: Muon, AdEMAMix, ... all in the same setting, tuned, with varying model size, batch size, and training duration! Huge sweep of experiments by Andrei Semenov Matteo Pagliardini M Jaggi

Amazing "competing" work from Kaiyue Wen Tengyu Ma Percy Liang There are some good stories about optimizers to tell this week 😃 arxiv.org/abs/2509.01440 arxiv.org/abs/2509.02046