Katelyn Mei

@meikatelyn

PhDing in Information Science @UW| Human-AI interaction, AI-assisted Decision-making |psychology & mathematics @Middlebury’22

ID: 894191917327581186

http://www.katelynmei.com 06-08-2017 13:42:21

118 Tweet

149 Followers

944 Following

🤔💭To answer or not to answer? We survey research on when language models should abstain in our new paper, "The Art of Refusal." . Thread below! 🧵⬇️ arxiv.org/abs/2407.18418 Joint w/ Jihan Yao Shangbin Feng Chenjun Xu tsvetshop Bill Howe Lucy Lu Wang UW iSchool Allen School #nlproc

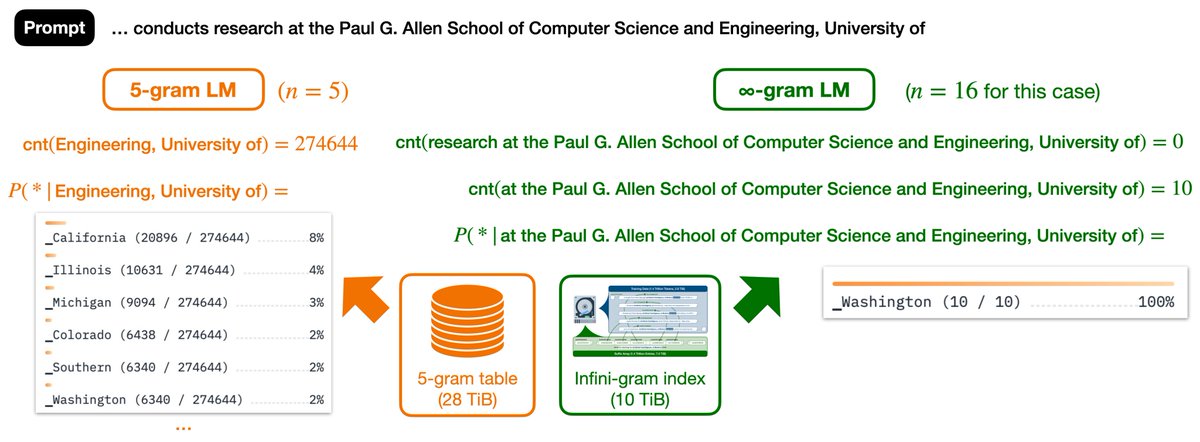

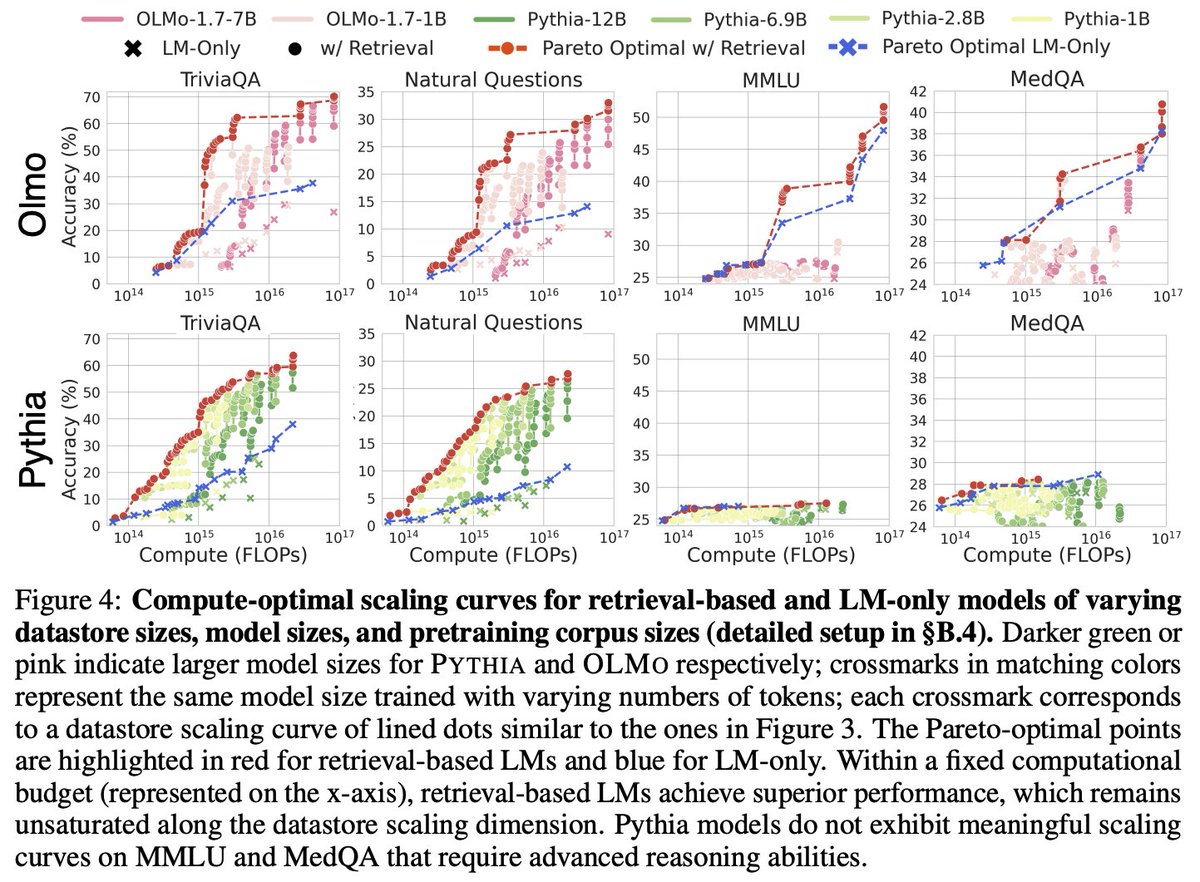

Happy to share our work on RAG scaling is accepted by NeurIPS Conference 🥳 Some new thoughts on this work: (1) Retrieving from a web-scale datastore is another way to do test-time scaling. It doesn't add much to the training cost, leading to better compute-optimal scaling curves. 🔎🧵

Looking for an AI assistant to synthesize literature for your cutting-edge research and study? Don't miss out on 👩🔬OpenScholar-8B led by Akari Asai ! Our model can answer questions with up-to-date citations. Everything is open-sourced! Try out our demo: open-scholar.allen.ai