Metod Jazbec

@metodjazbec

Machine Learning PhD student at @AmlabUva

ID: 332206751

http://metodj.github.io 09-07-2011 11:55:28

41 Tweet

176 Followers

414 Following

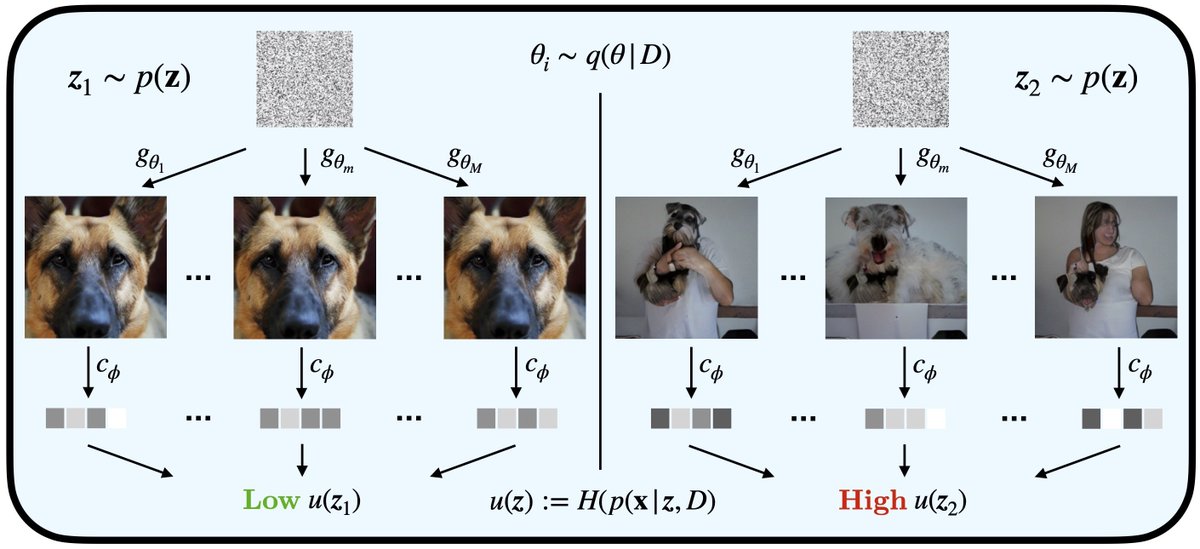

Come check out our #AISTATS2024 oral paper "Learning to Defer to a Population: A Meta-Learning Approach": - Oral Session: Fri 3 May, 4-5pm - Poster: Fri 3 May, 5-7pm. Multipurpose Room 1 #33 w/ Aditya Patra, Rajeev Verma, Putra Manggala, @eric_nalisnick proceedings.mlr.press/v238/tailor24a… 🧵

📣 This year at #ICML2024 we are hosting ✨ Gram Workshop ✨ Geometry-grounded representation learning and generative modeling. We welcome submissions in multiple tracks i.e. 📄 Proceedings, 🆕 extended abstract, 📝Blogpost/tutorial track as well as🏆 TDA challenge.

Flow Matching goes Variational! 🐳 In recent work, we derive a formulation of flow matching as variational inference, obtaining regular FM as a special case. Joint work with dream team Grigory Bartosh, @chris_naesseth, Max Welling, and Jan-Willem van de Meent. 📜arxiv.org/abs/2406.04843 🧵1/11

Generative Uncertainty in Diffusion Models (spotlight) by Metod Jazbec Eliot Wong-Toi, Guoxuan Xia, Dan Zhang, Eric Nalisnick, Stephan Mandt ➡️ openreview.net/forum?id=K54Vk… ⚠️ Quantify Uncertainty and Hallucination in Foundation Models: The Next Frontier in Reliable AI