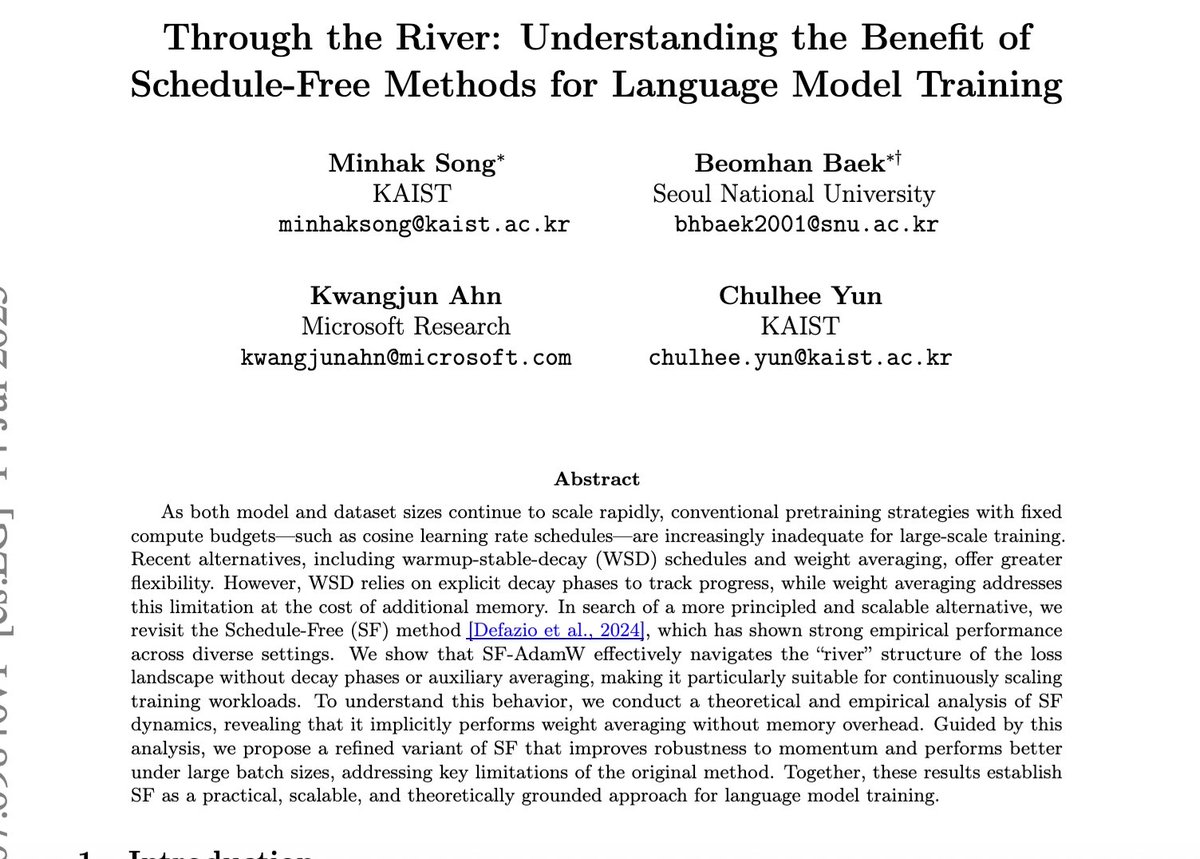

Minhak Song

@minhaksong

Undergrad at KAIST; Currently Intern at @uwcse; Interested in DL/RL/LLM Theory, Optimization

ID: 1603765192134774784

https://songminhak.github.io/ 16-12-2022 14:53:32

7 Tweet

51 Followers

99 Following

PPO vs. DPO? 🤔 Our new paper proves that it depends on whether your models can represent the optimal policy and/or reward. Paper: arxiv.org/abs/2505.19770 Led by Ruizhe Shi Minhak Song

Even with full-batch gradients, DL optimizers defy classical optimization theory, as they operate at the *edge of stability.* With Alex Damian, we introduce "central flows": a theoretical tool to analyze these dynamics that makes accurate quantitative predictions on real NNs.