Richard Antonello

@neurorj

Postdoc in the Mesgarani Lab at Columbia University. Studying how the brain processes language by using LLMs. (Formerly @HuthLab at UT Austin)

ID: 1260656805669191680

13-05-2020 19:43:07

219 Tweet

356 Followers

224 Following

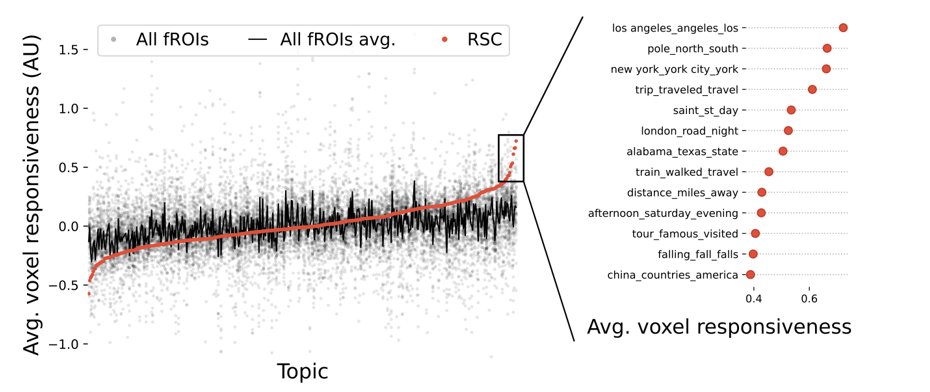

Excited to introduce funROI: A Python package for functional ROI analyses of fMRI data! funroi.readthedocs.io/en/latest/ #fMRI #Neuroimaging #Python #OpenScience Work w Anna Ivanova 🧵👇

We'll be presenting this at #ACL2025 ! Come find me and Thomas Jiralerspong in Vienna :)

Richard Antonello Very cool video. I created an infographic to try to visualize the full study in more depth studyvisuals.com/artificial-int…

*Harnessing the Universal Geometry of Embeddings* by Rishi Jha Jack Morris Vitaly Shmatikov With the proper set of losses, text embeddings from different models can be aligned with no paired data (what they call the "strong" Platonic hypothesis). arxiv.org/abs/2505.12540

As our lab started to build encoding 🧠 models, we were trying to figure out best practices in the field. So Taha Binhuraib 🦉 built a library to easily compare design choices & model features across datasets! We hope it will be useful to the community & plan to keep expanding it! 1/