Nicholas Lourie

@nicklourie

Better empirical methods for deep learning. PhD at @nyuniversity (@CILVRatNYU). Advised by @kchonyc and @hhexiy. Prev: @allen_ai.

I build things. 🤖

ID: 2370922034

https://github.com/nicholaslourie/opda 03-03-2014 20:43:21

33 Tweet

1,1K Followers

1,1K Following

CDS Prof. Kyunghyun Cho (Kyunghyun Cho) has published two new papers, urging a reevaluation of how progress in AI is measured. Are we advancing or just repeating history? Learn more: nyudatascience.medium.com/separating-hyp…

Finding good data mixtures for LLM training can be tricky - Aioli provides a unified framework to construct pre-training data mixtures. Talk to the authors Mayee Chen Michael Hu Nicholas Lourie Kyunghyun Cho Chris Re hazyresearch directly here!

Anthropic put out a great primer on statistical methods for LLM evals by Evan Miller. Check out his blog too! He's written gems on A/B testing and other topics---just make sure you don't mind losing an afternoon like I did when I first came across it! 😆 evanmiller.org

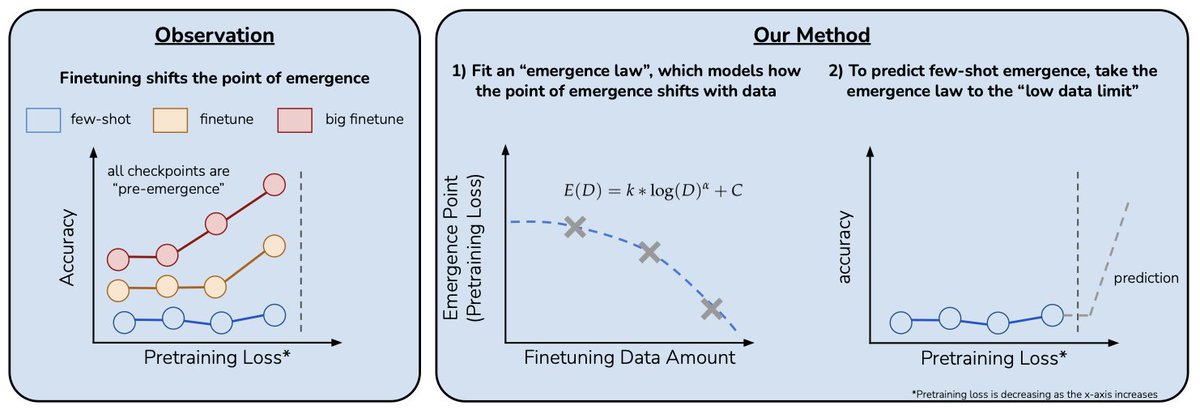

A great idea by Charlie Snell: Use finetuning to predict where zero-shot capabilities emerge. This lets you experiment at a smaller scale. The more finetuning data you have, the smaller of a model you can use. Here's how I think about it: a one-time cost collecting data saves you