Nithum

@nithum

ID: 29586736

08-04-2009 00:13:20

31 Tweet

235 Followers

294 Following

Most machine learning models are trained by collecting vast amounts of data on a central server. Nicole Mitchell and I looked at how federated learning makes it possible to train models without any user's raw data leaving their device. pair.withgoogle.com/explorables/fe…

In partnership with Google Magenta Project, we invited 13 professional writers to use Wordcraft, our experimental LaMDA-powered AI writing tool. We've published all of the stories written with the tool, along with a discussion on the future of AI and creativity. g.co/research/wordc…

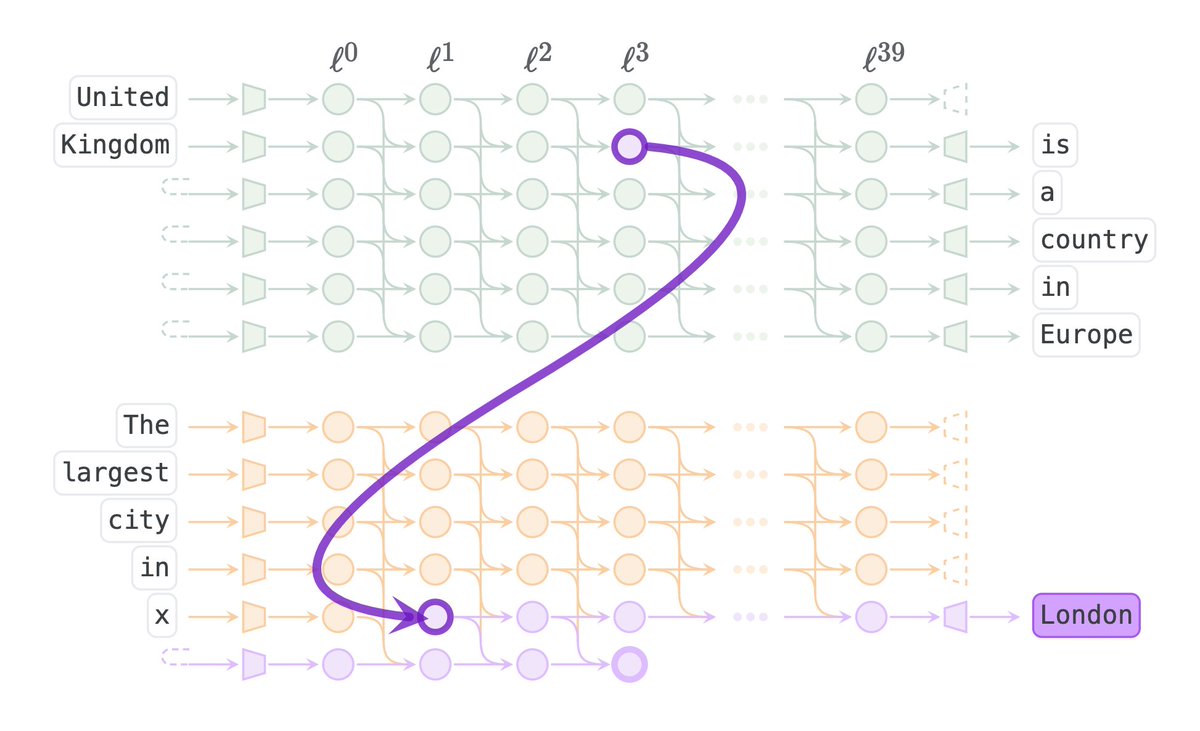

Do Machine Learning Models Memorize or Generalize? pair.withgoogle.com/explorables/gr… An interactive introduction to grokking and mechanistic interpretability w/ Asma Ghandeharioun, @nadamused_, Nithum, Martin Wattenberg and iislucas (Lucas Dixon)

How can we keep extremists from using technology to cause harm? President Donald J. Trump and WIRED asked our very own Yasmin Green. bit.ly/2eCOxa8

We collected and labeled over 1 million Wikimedia Foundation page edits to determine where personal attacks were made. bit.ly/2lgWfcB