Olivia Simin Fan

@olivia61368522

🎓Ph.D.@EPFL_en-MLO|| B.Sc.@UMich. || B.Sc.@sjtu1896. ML&LLM research🧐 NOT a physicist.

ID: 1313263210946940929

http://olivia-fsm.github.io 05-10-2020 23:43:19

92 Tweet

785 Followers

974 Following

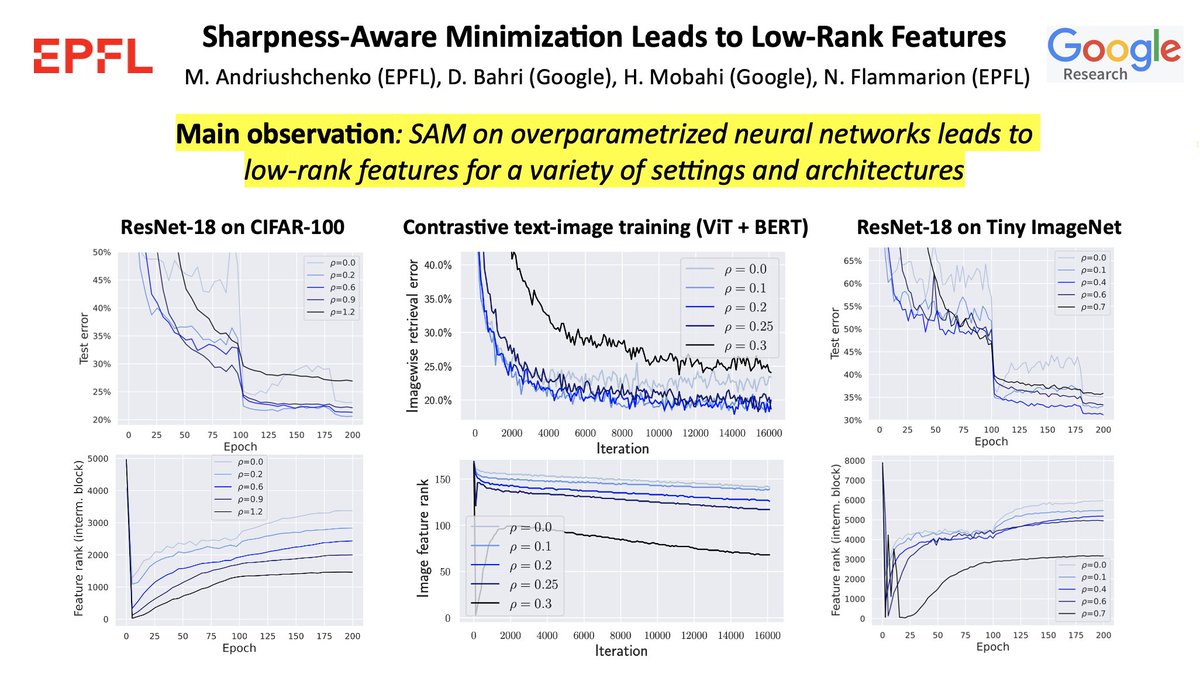

🚨Excited to share our new work “Sharpness-Aware Minimization Leads to Low-Rank Features” arxiv.org/abs/2305.16292! ❓We know SAM improves generalization, but can we better understand the structure of features learned by SAM? (with Dara Bahri, Hossein Mobahi, N. Flammarion) 🧵1/n

Happy to announce that I'm joining as an Asst. Prof. in CS at UToronto U of T Department of Computer Science+Vector Institute in Fall '25, working on #NLProc, Causality, and AI Safety! I want to sincerely thank my dear mentors, friends, collabs & many who mean a lot to me. Welcome #PhDs/Research MSc to apply!