OpenMMLab

@openmmlab

From MMDetection to AI Exploration. Empowering AI research and development with OpenMMLab.

Discord:discord.gg/raweFPmdzG

ID: 1267501967703588864

https://github.com/open-mmlab 01-06-2020 17:04:58

683 Tweet

6,6K Followers

131 Following

🚀Introducing InternLM3-8B-Instruct with Apache License 2.0. -Trained on only 4T tokens, saving more than 75% of the training cost. -Supports deep thinking for complex reasoning and normal mode for chat. Model:Hugging Face huggingface.co/internlm/inter… GitHub: github.com/InternLM/Inter…

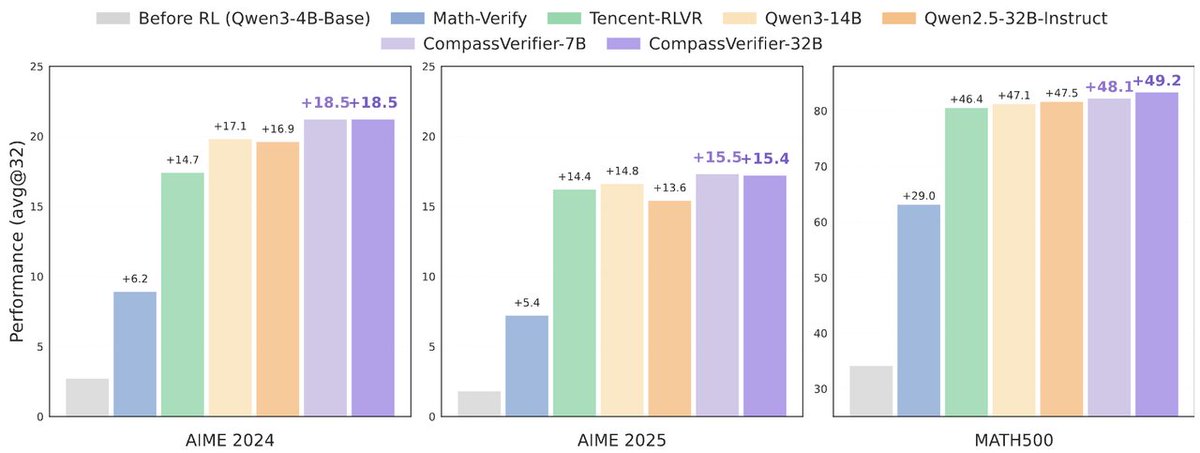

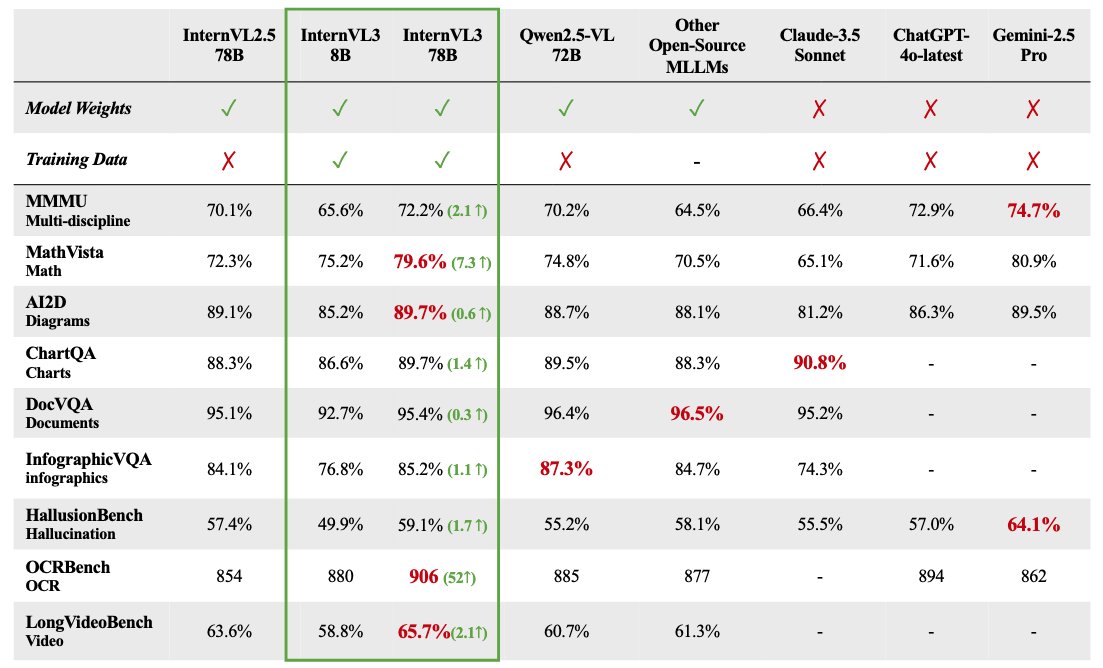

🥳We have released #InternVL3, an advanced #MLLM series ranging from 1B to 78B, on Hugging Face. 😉InternVL3-78B achieves a score of 72.2 on the MMMU benchmark, setting a new SOTA among open-source MLLMs. ☺️Highlights: - Native multimodal pre-training: Simultaneous language and

🔥China’s Open-source VLMs boom—Intern-S1, MiniCPM-V-4, GLM-4.5V, Step3, OVIS 🧐Join the AI Insight Talk with Hugging Face, OpenCompass, ModelScope and Zhihu Frontier 🚀Tech deep-dives & breakthroughs 🚀Roundtable debates ⏰Aug 21, 5 AM PDT 📺Live: youtube.com/live/kh0WSMoVZ…