Dr. Peter Slattery

@peterslattery1

Lead at the AI Risk Repository | Researcher @MITFutureTech

ID: 1113170874

https://www.pslattery.com/ 23-01-2013 02:07:29

775 Tweet

741 Followers

952 Following

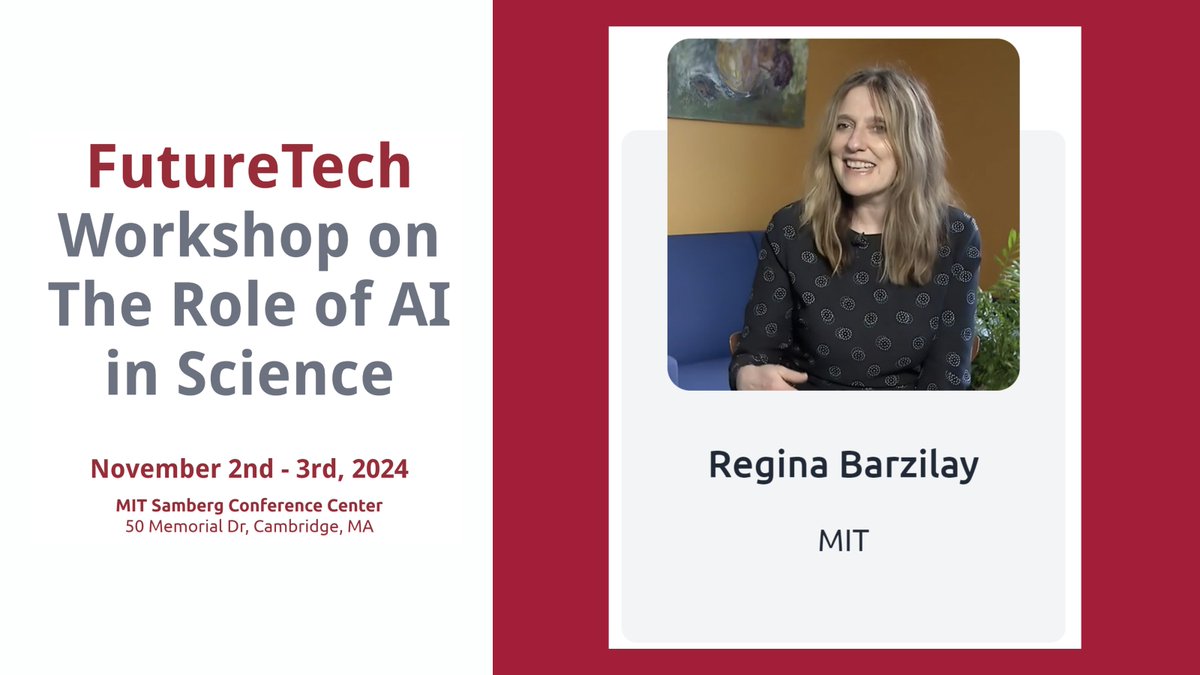

We are pleased to announce that Regina Barzilay (Regina Barzilay), from the MIT Jameel Clinic (MIT Jameel Clinic for AI & Health), will be presenting at the FutureTech Workshop on The Role of AI in Science, scheduled for November 2nd and 3rd, 2024. Regina's talk, titled 'How AI contributes to the

Today is the first day of the MIT FutureTech workshop on the role of AI in science! We are bringing together some of the most influential voices in AI and scientific research to discuss the profound ways artificial intelligence is reshaping science as we know it. Topics

Today is the second day of our workshop on the role of AI in science! First up, we have Neil Thompson (Neil Thompson) introducing session 3: De-democratization and concentration of power in AI. See the full agenda here: futuretech.mit.edu/workshop-on-th…

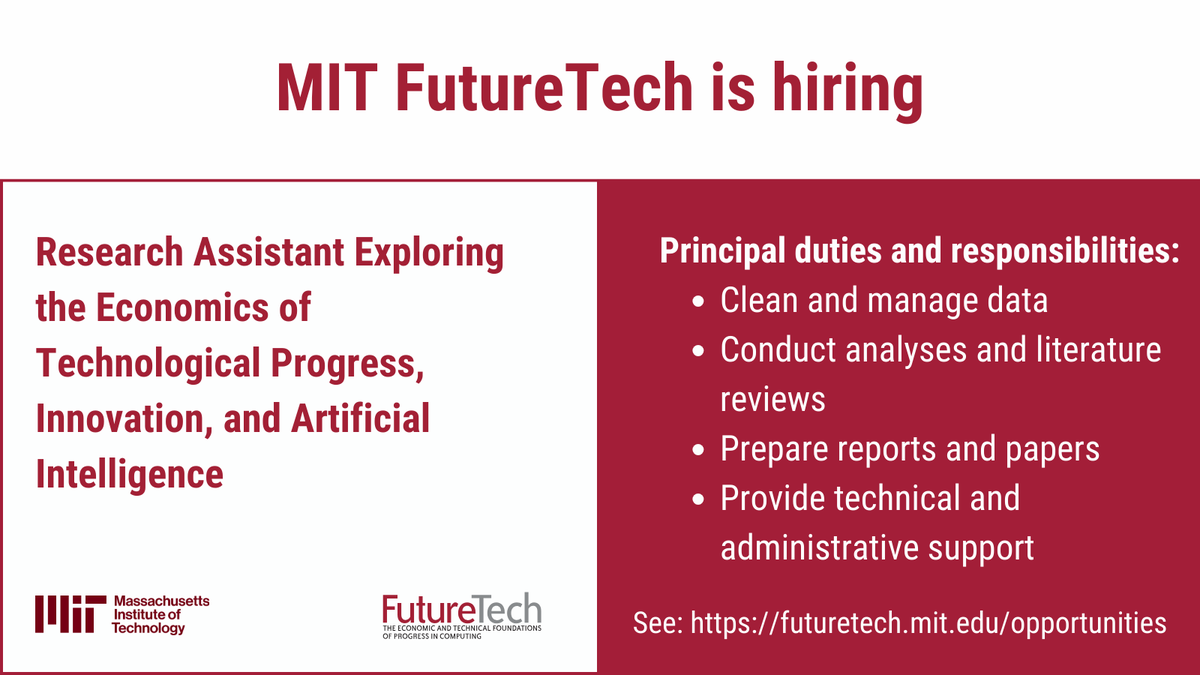

📢 MIT FutureTech is seeking a Research Assistant to work with Dr. Danial Lashkari (Danial Lashkari) on projects related to economics of technological progress, innovation, and artificial intelligence. This is an exciting opportunity to support a rapidly growing lab who work on

📢 The MIT AI Risk Repository (MIT AI Risk Repository) was selected by the Paris Peace Forum for showcasing at the AI Action Summit! Come and see our booth on February 10 👉 bit.ly/4hx5OMP. Visit airisk.mit.edu #AIActionSummit

Peter Slattery (Dr. Peter Slattery) recently spoke about his work on the MIT AI Risk Repository (MIT AI Risk Repository) on a panel about the future of AI at the U.S. Securities and Exchange Commission (U.S. Securities and Exchange Commission) in Washington, DC. See agenda here: sec.gov/newsroom/press…