Peter West

@peterwesttm

AI / NLP Researcher

Incoming faculty at @UBC_CS and @CAIDA_UBC

Postdoctoral fellow at @StanfordHAI @stanfordnlp

Former PhD student at @uwcse @uwnlp

he/him

ID: 1174003704724217856

https://peterwest.pw/ 17-09-2019 16:54:36

243 Tweet

1,1K Followers

719 Following

#UBC computer scientists and linguists are using #AI to identify disparities across translations of Wikipedia biographies of #LGBT-identifying public figures. UBC Computer Science Vector Institute Vered Shwartz UBC NLP Group bit.ly/3QSqjZ2

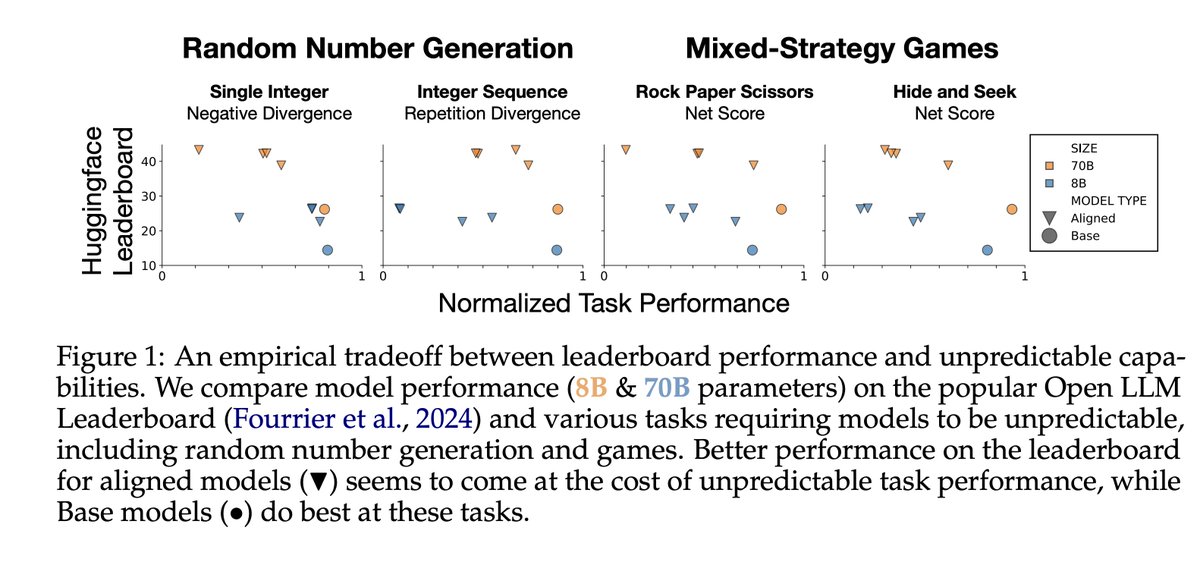

Base Models Beat Aligned Models at Randomness and Creativity. Peter West & Christopher Potts tell us that alignment don't only extract abilities hidden in the pretraining, it also hides other abilities:

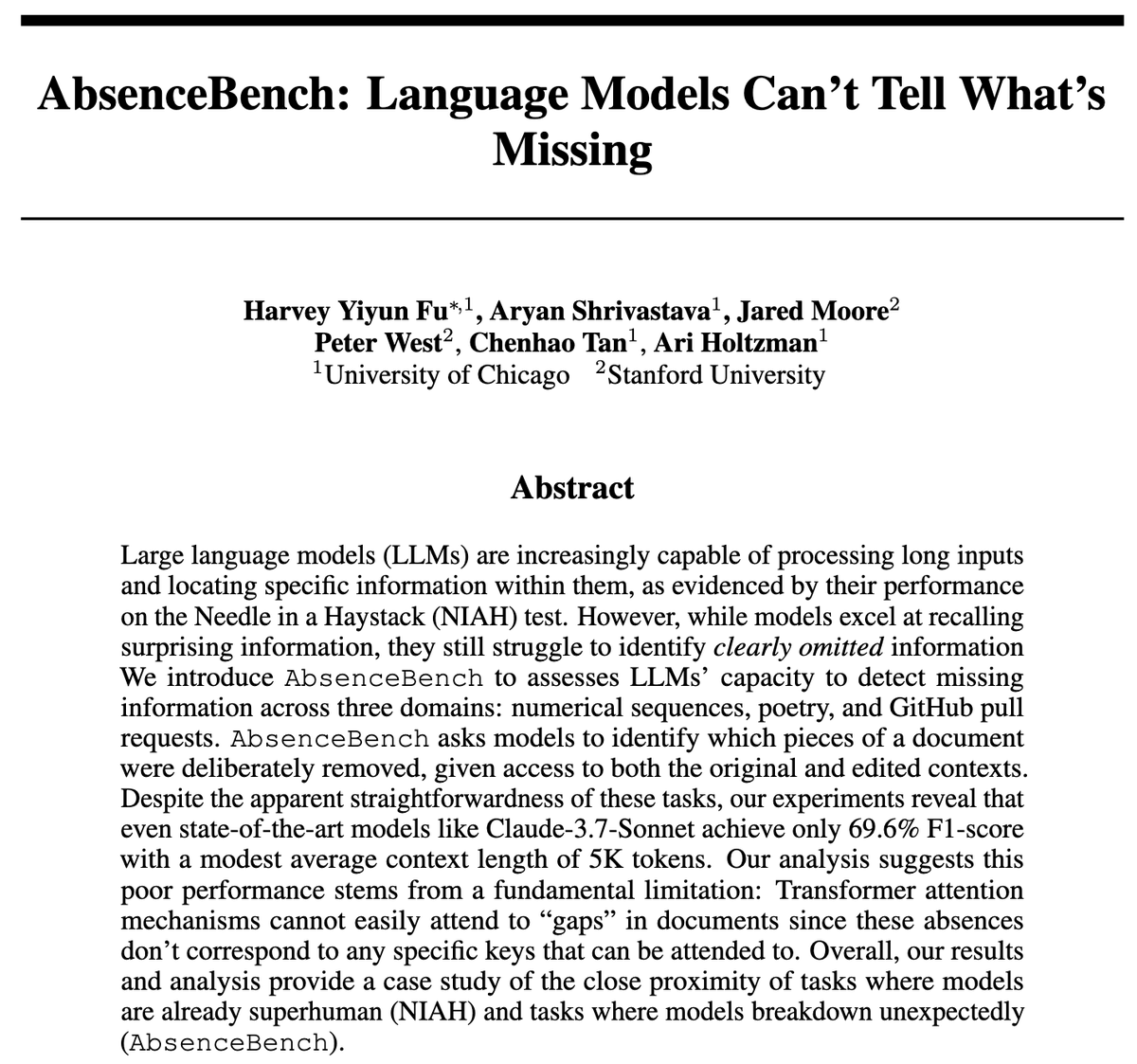

𝐖𝐡𝐚𝐭 𝐇𝐚𝐬 𝐁𝐞𝐞𝐧 𝐋𝐨𝐬𝐭 𝐖𝐢𝐭𝐡 𝐒𝐲𝐧𝐭𝐡𝐞𝐭𝐢𝐜 𝐄𝐯𝐚𝐥𝐮𝐚𝐭𝐢𝐨𝐧? I'm happy to announce that the preprint release of my first project is online! Developed with the amazing support of Abhilasha Ravichander and Ana Marasović (Full link below 👇)