Yuxiao Qu

@quyuxiao

PhD @mldcmu, advised by @aviral_kumar2 and @rsalakhu

Interests: Reasoning & RL & FMs

Prev: @UWMadison, @UW, @CUHKofficial

ID: 1327289168708431872

https://cohenqu.github.io/ 13-11-2020 16:36:20

20 Tweet

266 Followers

95 Following

I’ll be at #NeurIPS2024 next week to present our work on 📎Recursive Introspection: Teaching Language Model Agents How to Self-Improve 📌Poster Session 3 East #2805 🗓️Dec 12, 11:00-2:00 This is joint work with amazing collaborators Tianjun Zhang, Naman, Aviral Kumar

blog.ml.cmu.edu/2025/01/08/opt… How can we train LLMs to solve complex challenges beyond just data scaling? In a new blogpost, Amrith Setlur, Yuxiao Qu Matthew Yang, Lunjun Zhang , Virginia Smith and Aviral Kumar demonstrate that Meta RL can help LLMs better optimize test time compute

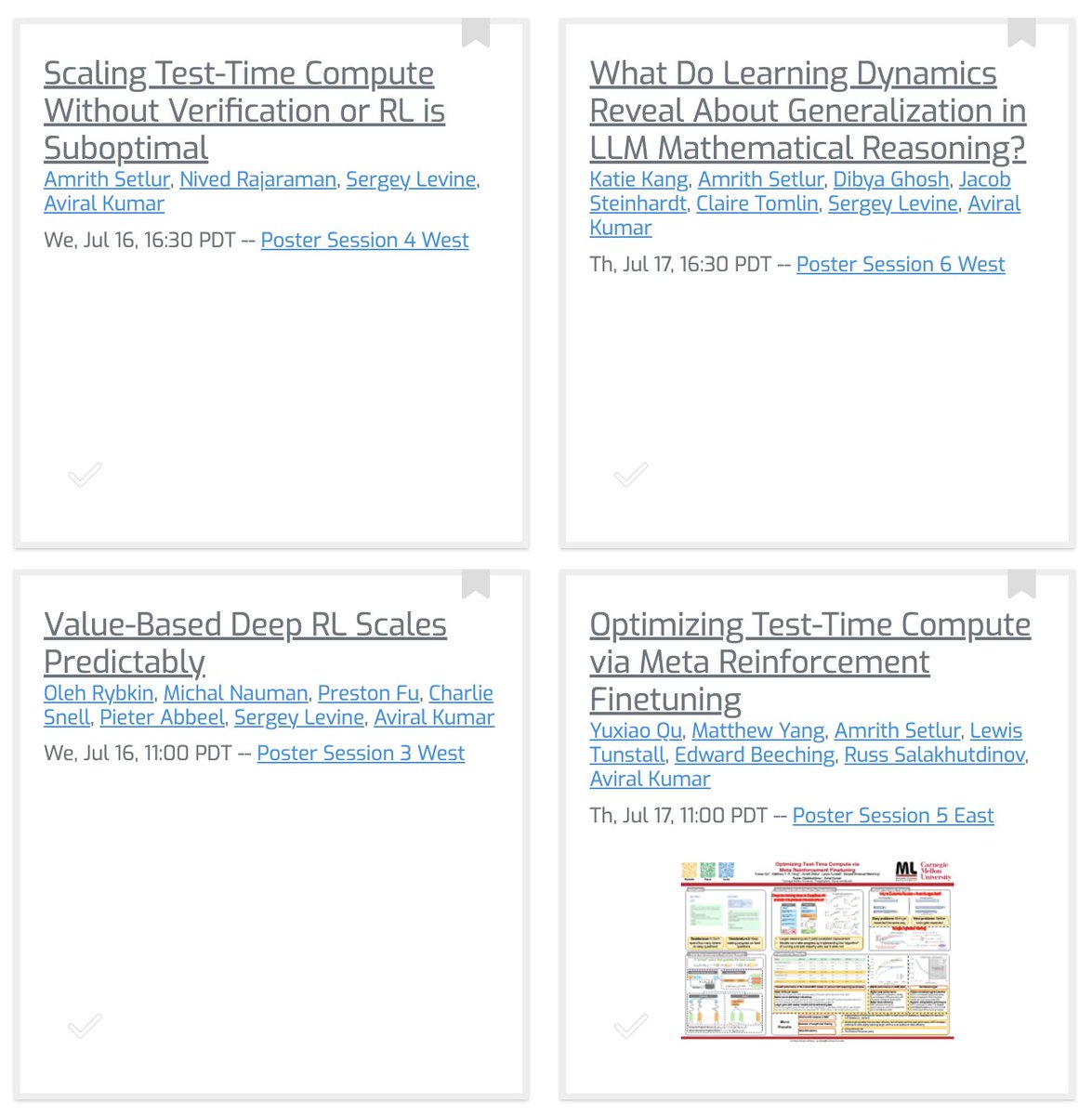

Heading to ICML Conference #ICML2025 this week! DM me if you’d like to chat ☕️ Come by our poster sessions on: 🧠 Optimizing Test-Time Compute via Meta Reinforcement Fine-Tuning (arxiv.org/abs/2503.07572) 🔍 Learning to Discover Abstractions for LLM Reasoning (drive.google.com/file/d/1Sfafrk…)