Quanquan Gu

@quanquangu

Professor @UCLA, Research Scientist at ByteDance | Recent work: SPIN, SPPO, DPLM, GPM, CryoFM, MARS, TPA | Opinions are my own

ID: 901303999529312256

http://www.cs.ucla.edu/~qgu/ 26-08-2017 04:43:13

1,1K Tweet

13,13K Followers

1,1K Following

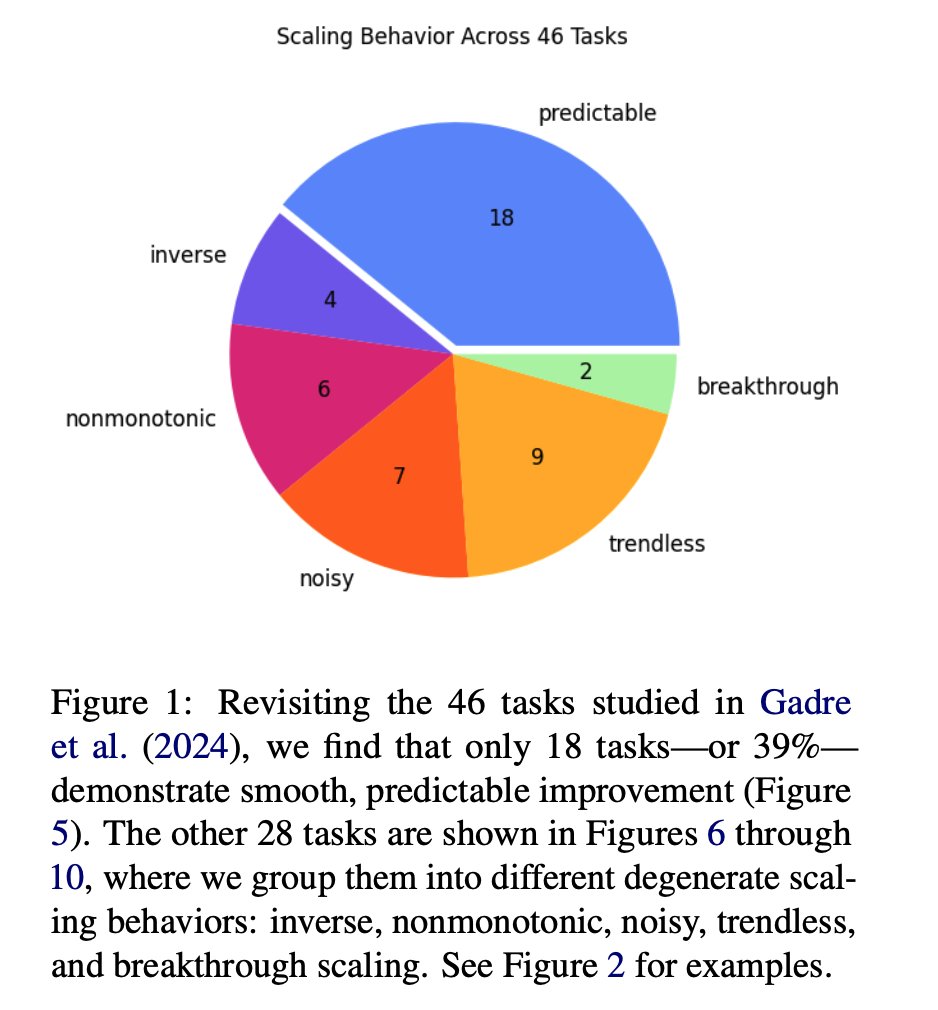

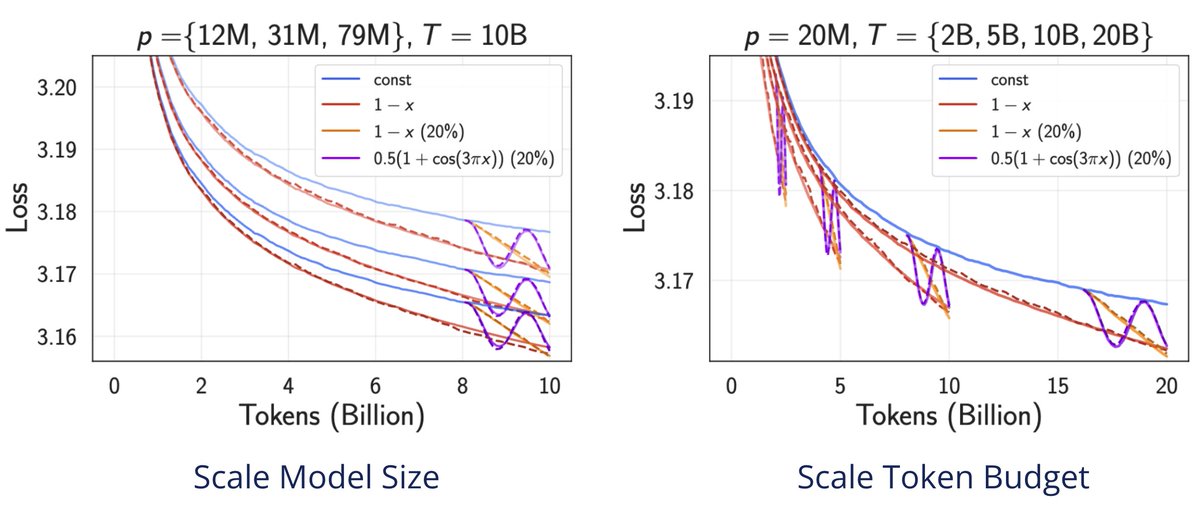

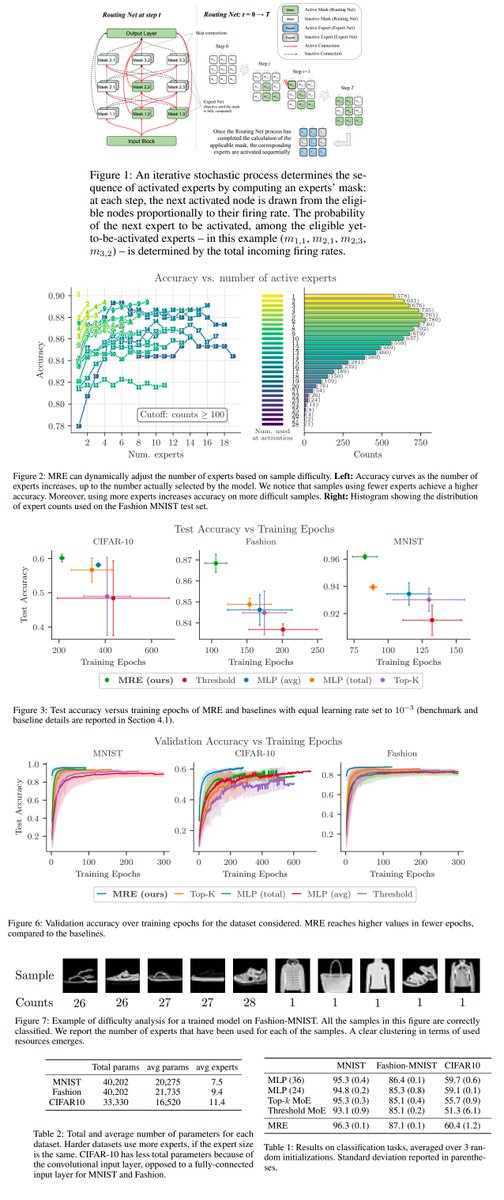

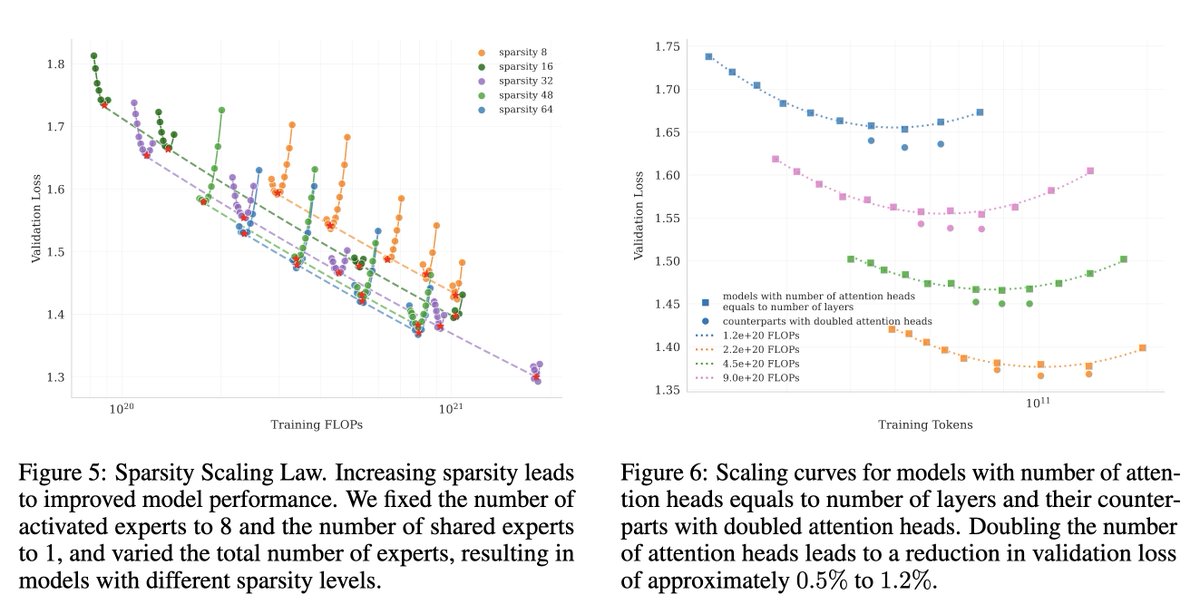

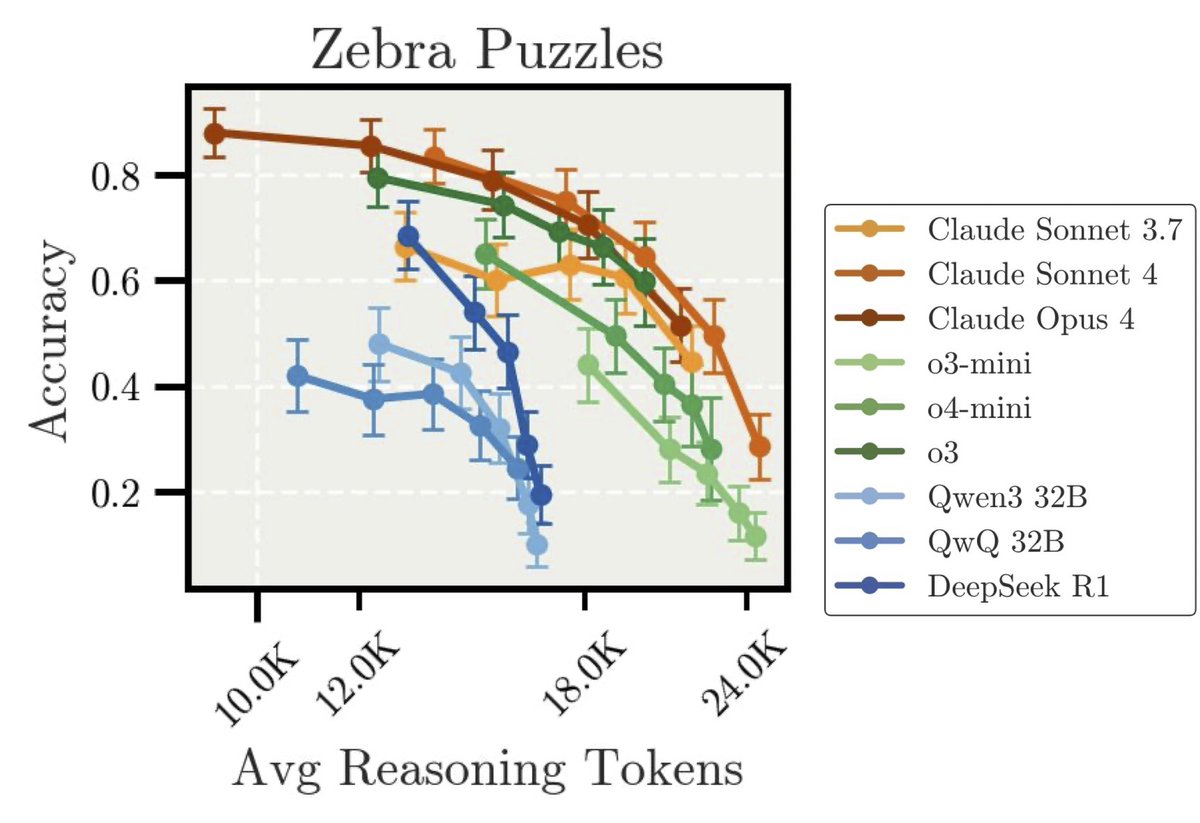

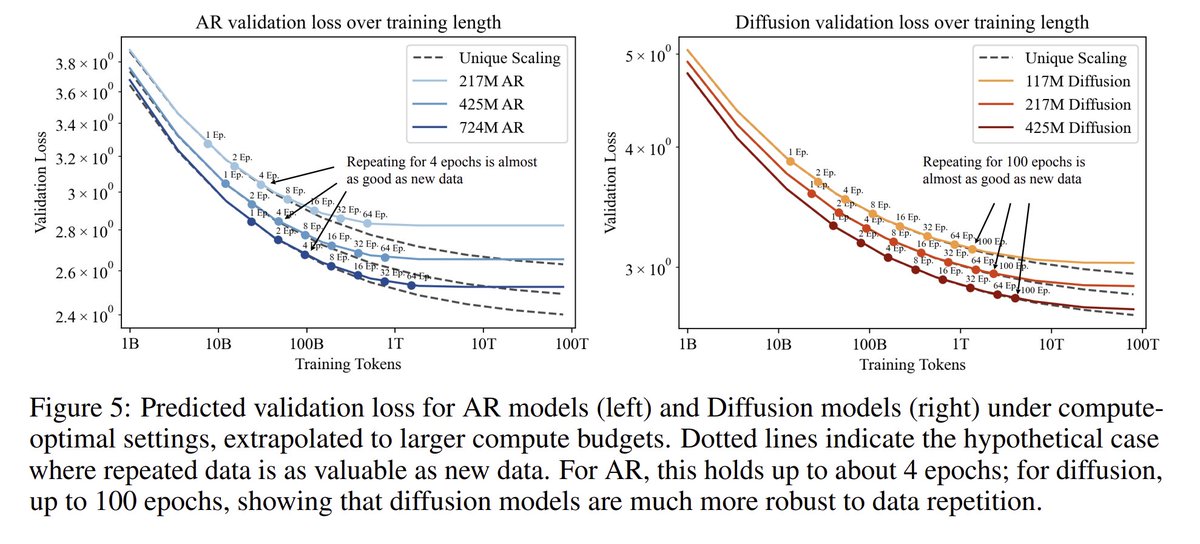

Excited to give a tutorial with Leena C Vankadara on Training Neural Networks at Any Scale (TRAINS) ICML Conference at 13:30 (West Ballroom A). Our slides can be found here: go.epfl.ch/ICML25TRAINS Please join us.