Rogerio Feris

@rogerioferis

Principal scientist and manager at the MIT-IBM Watson AI Lab

ID: 1226554235770298369

http://rogerioferis.org 09-02-2020 17:11:45

47 Tweet

1,1K Followers

353 Following

We are looking for a summer intern (MSc/PhD) to work on large language models for sports & entertainment, with the goal of improving the experience of millions of fans as part of major tournaments (US Open/Wimbledon) @IBMSports MIT-IBM Watson AI Lab Apply at: krb-sjobs.brassring.com/TGnewUI/Search…

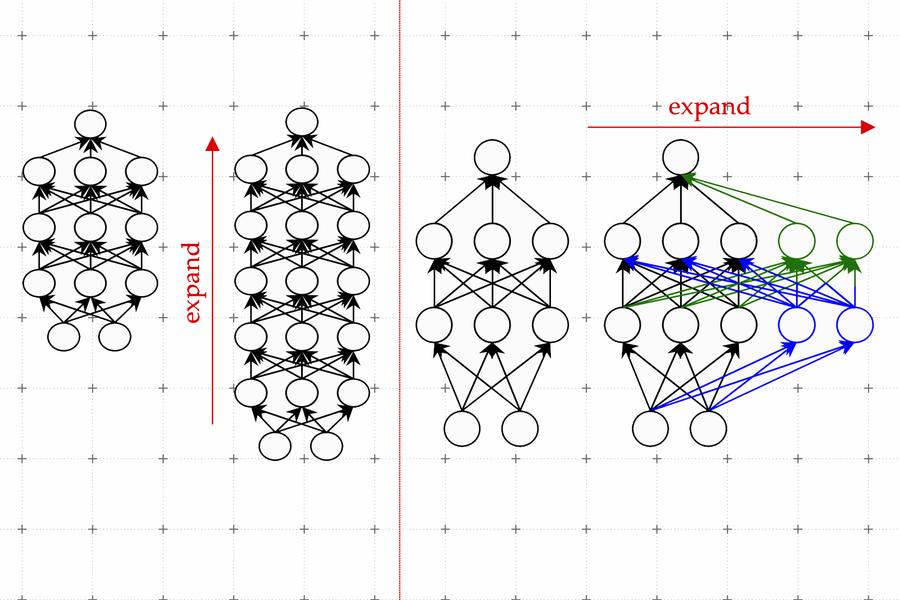

New technique from the MIT-IBM Watson AI Lab and its collaborators learns to "grow" a larger machine-learning model from a smaller, pre-trained model, reducing the monetary and environmental cost of developing AI applications and with similar or improved performance. news.mit.edu/2023/new-techn…

🚨Can we self-align LLMs with an expert domain like biomedicine with limited supervision? Introducing Self-Specialization, uncovering expertise latent within LLMs to boost their utility in specialized domains. arxiv.org/abs/2310.00160 Georgia Tech School of Interactive Computing Machine Learning at Georgia Tech MIT CSAIL MIT-IBM Watson AI Lab 1/8