Ruohan Gao

@ruohangao1

Assistant Professor @umdcs. Ph.D. @UTCompSci and PostDoc @StanfordAILab. I teach machines see👀, hear👂, feel🖐️.

ID: 1283223973254164485

https://ruohangao.github.io/ 15-07-2020 02:17:04

134 Tweet

1,1K Followers

460 Following

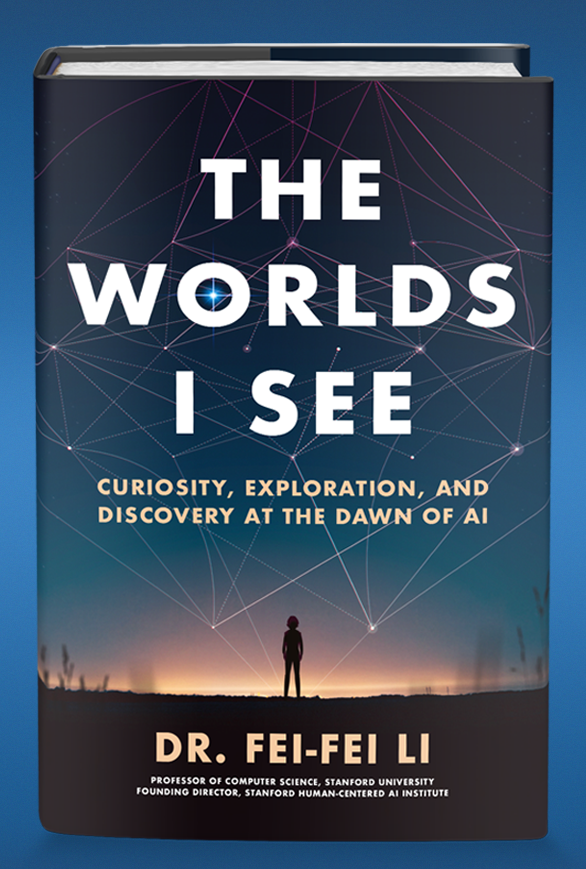

Excited to share that my book, The Worlds I See, will be published on Nov 7, 2023 from Flatiron Books & Melinda French Gates' Moment of Lift Books. I believe AI can help people & I hope you’ll come along on the journey. Preorder momentoflift.com/the-worlds-i-s… #MoemtnofLiftBooks #WorldsISee 1/

Introducing our new work Conference on Robot Learning 2023, a novel brain-robot interface system: NOIR (Neural Signal Operated Intelligent Robots). Website: noir-corl.github.io Paper: arxiv.org/abs/2311.01454 🧠🤖

Really enjoyed reading the book by my postdoc advisor Fei-Fei Li. Fei-Fei is not only my enduring mentor but also the best role model for my 8-month-old daughter and for young kids in the generations to come, inspiring them to actively embrace the transformative era of AI! 🦾 🧭

Grad school application season! We will be recruiting highly motivated PhD students for our lab! We have so many vibrant research groups UMD Department of Computer Science working on cutting-edge CV/ML work! Don't miss out on the opportunity! 🤩

“What would an AI research & education mission that crossed the whole campus look like?” This is the question posed to me & a working group by Univ. of Maryland's Provost Rice summer'23. Now we’re announcing the answer: #AIMaryland, the AI Interdisciplinary Institute at Maryland. >

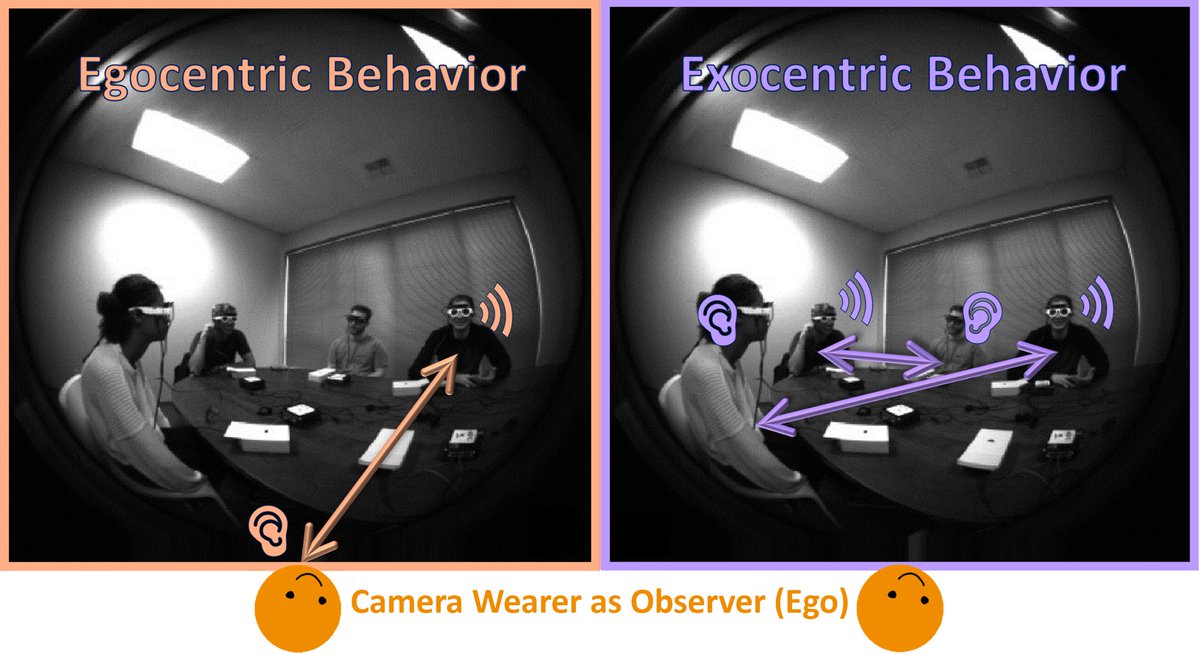

Want to make computers that can see *and* hear? Come to the Sight and Sound workshop on Monday, 6/17 at #CVPR2024! Schedule: sightsound.org Invited talks by: Hilde Kuehne, Shyam Gollakota, Ruohan Gao, Tengda Han, Samuel Clarke, Alexander Richard

In case you were wondering what’s going on with the back of the #CVPR2024 T-shirt: it’s a hybrid image made by Aaron Inbum Park and Daniel Geng! When you look at it up close, you’ll just see the Seattle skyline, but when you view it from a distance, the text “CVPR” should appear.

Thank you for the kind words, Brendan Iribe ! It’s truly inspiring to be part of a department housed in a building made possible by your generosity and vision. I’m so excited to contribute to advancing Vision ML with brilliant colleagues and students here at UMD!

Super excited to welcome Ritwik Gupta 🇺🇦 ✈️ ACL to UMD Department of Computer Science!