Michigan SLED Lab

@sled_ai

Situated Language and Embodied Dialogue (SLED) research lab at @michigan_AI, led by Joyce Chai.

ID: 1663360763195523072

https://sled.eecs.umich.edu/ 30-05-2023 01:45:13

103 Tweet

283 Followers

114 Following

How well can VLMs detect and explain humans' procedural mistakes, like in cooking or assembly? 🧑🍳🧑🔧 My new pre-print with Itamar Bar-Yossef, Yayuan Li, Brian Zheyuan Zhang, Jason Corso, and Joyce Chai (Michigan SLED Lab MichiganAI Computer Science and Engineering at Michigan) dives into this! arxiv.org/pdf/2412.11927

I'm excited to join #ACL ACL Mentorship as a mentor! Your ideas can help make mentorship more impactful. Let’s plan together!🚀 forms.gle/dURA4QUANH3pBx…

Excited that this work got in ICLR as Oral. Kudos to my collaborators Brian Zheyuan Zhang @Hu_FY_ Jayjun Lee Freda Shi Parisa Kordjamshidi Michigan SLED Lab! ☺️

Meet VEGGIE🥦Adobe Research VEGGIE is a video generative model trained solely with diffusion loss, designed for both video concept grounding and instruction-based editing. It effectively handles diverse video concept editing tasks by leveraging pixel-level grounded training in a

On my way to NAACL✈️! If you're also there and interested in grounding, don't miss our tutorial on "Learning Language through Grounding"! Mark your calendar: May 3rd, 14:00-17:30, Ballroom A. Another exciting collaboration with Martin Ziqiao Ma Jiayuan Mao Parisa Kordjamshidi Michigan SLED Lab!

Thrilled to share that VEGGIE is accepted to #ICCV2025! 🎉 Check out the full thread by Shoubin Yu for details. Funny enough — it’s been 6 years since I came to the US, and this might be my first time setting foot in Hawaii. 🌴

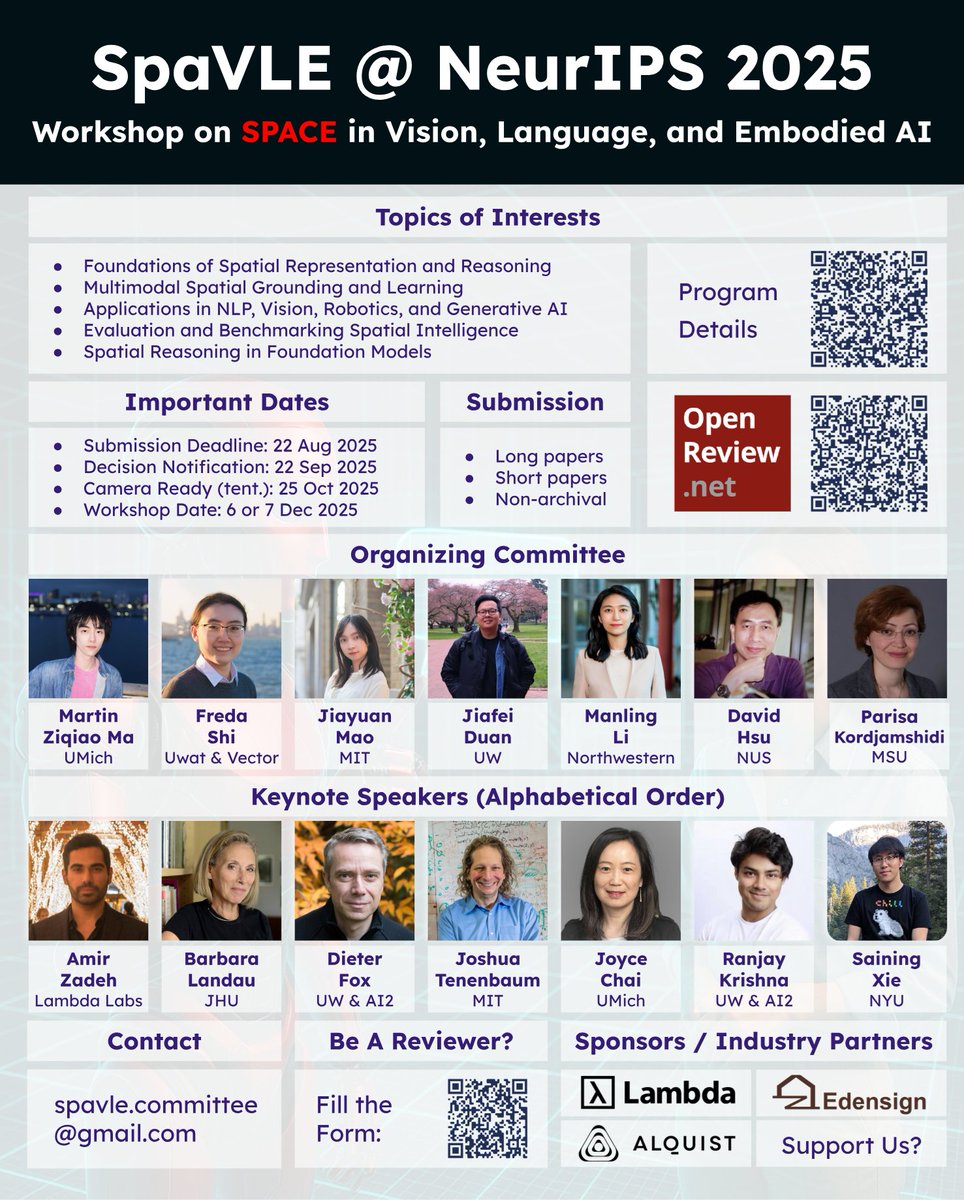

📣 Excited to announce SpaVLE: #NeurIPS2025 Workshop on Space in Vision, Language, and Embodied AI! 👉 …vision-language-embodied-ai.github.io 🦾Co-organized with an incredible team → Freda Shi · Jiayuan Mao · Jiafei Duan · Manling Li · David Hsu · Parisa Kordjamshidi 🌌 Why Space & SpaVLE? We