Samuel Schmidgall

@srschmidgall

PhD @JohnsHopkins // student researcher @Google @GoogleDeepmind // prev intern @AMD @Stanford

ID: 1323123590020169728

https://samuelschmidgall.github.io/ 02-11-2020 04:43:47

520 Tweet

2,2K Followers

413 Following

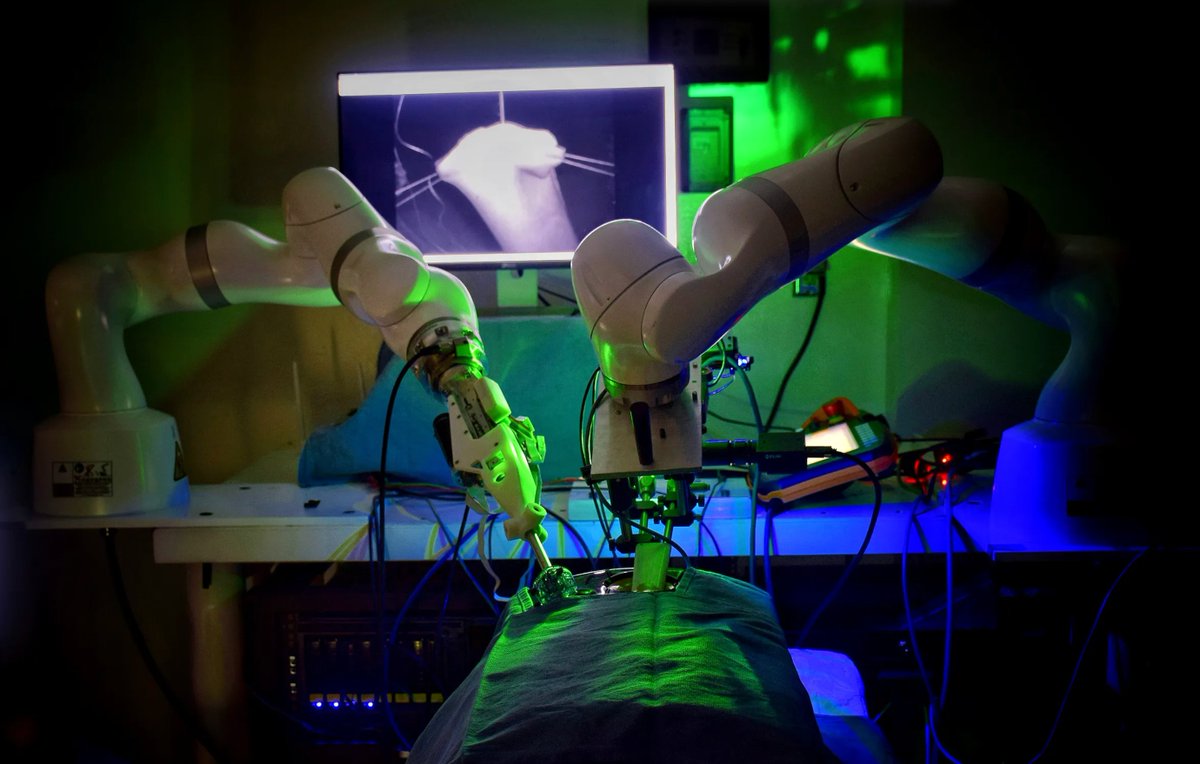

🏥🤖 Autonomous surgical robots are expected to be capable of higher levels of autonomy due to the increased capabilities of AI Check out our new article in IEEE Spectrum which discusses the history and future of autonomous robotic surgery!