Sham Kakade

@shamkakade6

Harvard Professor.

Full stack ML and AI.

Co-director of the Kempner Institute for the Study of Artificial and Natural Intelligence.

ID: 1069106254927273984

https://shamulent.github.io 02-12-2018 05:49:28

418 Tweet

14,14K Followers

463 Following

New in the Deeper Learning blog: Kempner researchers show how VLMs speak the same semantic language across images and text. bit.ly/KempnerVLM by Isabel Papadimitriou, Chloe H. Su, Thomas Fel, Stephanie Gil, and Sham Kakade #AI #ML #VLMs #SAEs

We have a new SSM theory paper, just accepted to COLT, revisiting recall properties of linear RNNs. It's surprising how much one can delve into, and how beautiful it can become. With (and only thanks to) the amazing Alexandre and Francis Bach arxiv.org/pdf/2502.09287

[1/n] New work [JSKZ25] w/ Jikai Jin, Vasilis Syrgkanis, Sham Kakade. We introduce new formulations and tools for evaluating language model capabilities, which help explain recent observations of post-training behaviors of Qwen-series models — there is a sensitive causal link

![Hanlin Zhang (@_hanlin_zhang_) on Twitter photo [1/n] New work [JSKZ25] w/ <a href="/JikaiJin2002/">Jikai Jin</a>, <a href="/syrgkanis/">Vasilis Syrgkanis</a>, <a href="/ShamKakade6/">Sham Kakade</a>.

We introduce new formulations and tools for evaluating language model capabilities, which help explain recent observations of post-training behaviors of Qwen-series models — there is a sensitive causal link [1/n] New work [JSKZ25] w/ <a href="/JikaiJin2002/">Jikai Jin</a>, <a href="/syrgkanis/">Vasilis Syrgkanis</a>, <a href="/ShamKakade6/">Sham Kakade</a>.

We introduce new formulations and tools for evaluating language model capabilities, which help explain recent observations of post-training behaviors of Qwen-series models — there is a sensitive causal link](https://pbs.twimg.com/media/GtviUVkWAAAs6zM.png)

I took this class. Good times! Thank you Zoubin Ghahramani and Geoffrey Hinton!! Yeah, no backprop. I view this as more the “modeling phase” of deep learning vs “scale”. I’m going with the ideas are still relevant for AI4science.

Thrilled to share that our work received the Outstanding Paper Award at ICML! I will be giving the oral presentation on Tuesday at 4:15 PM. Jaeyeon (Jay) Kim @ICML and I both will be at the poster session shortly after the oral presentation. Please attend if possible!

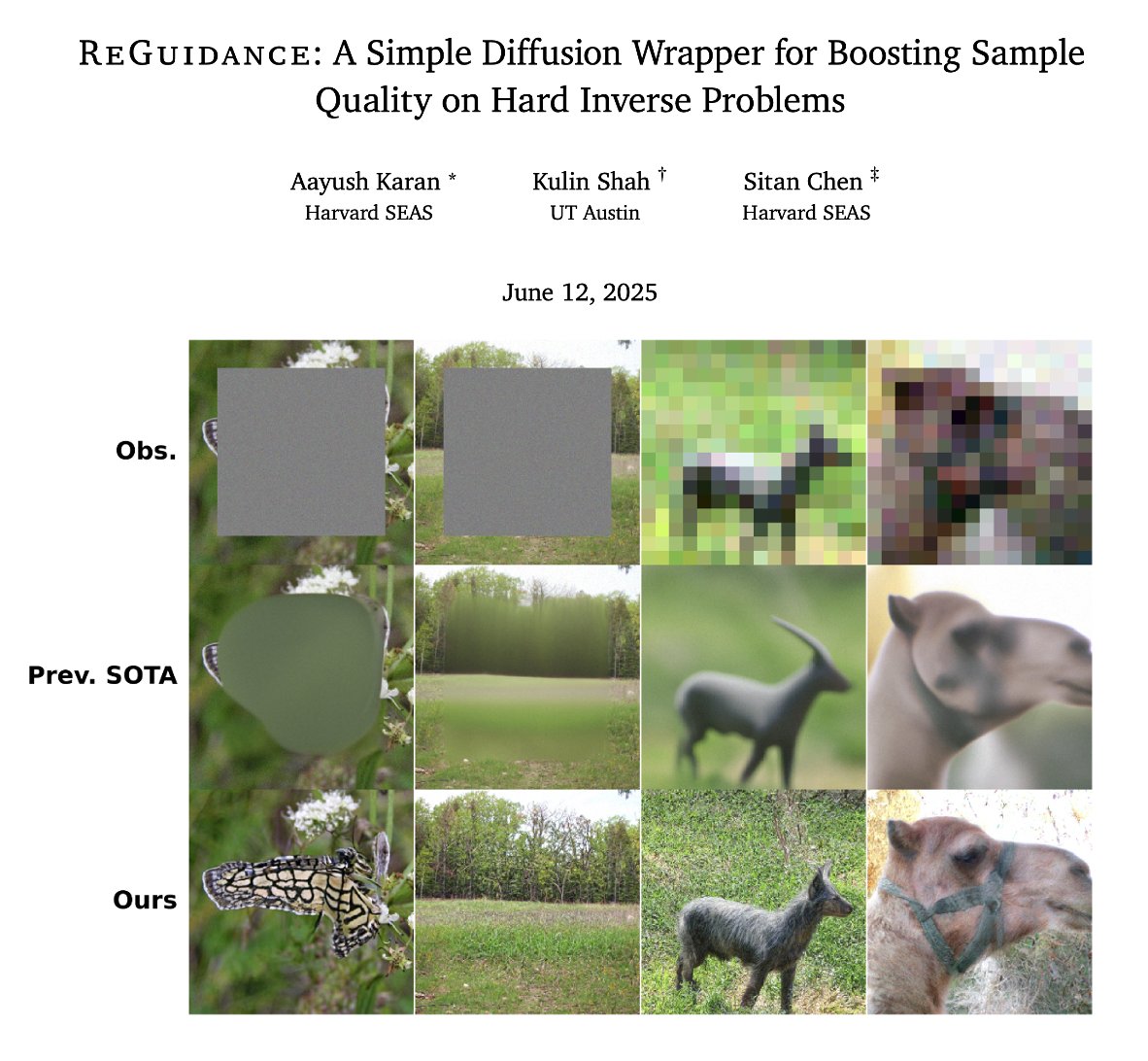

A team from #KempnerInstitute, Harvard SEAS & Computer Science at UT Austin has won a best paper award at #ICML2025 for work unlocking the potential of masked diffusion models. Congrats to Jaeyeon (Jay) Kim @ICML, Kulin Shah, Vasilis Kontonis, Sham Kakade and Sitan Chen. kempnerinstitute.harvard.edu/news/kempner-i… #AI