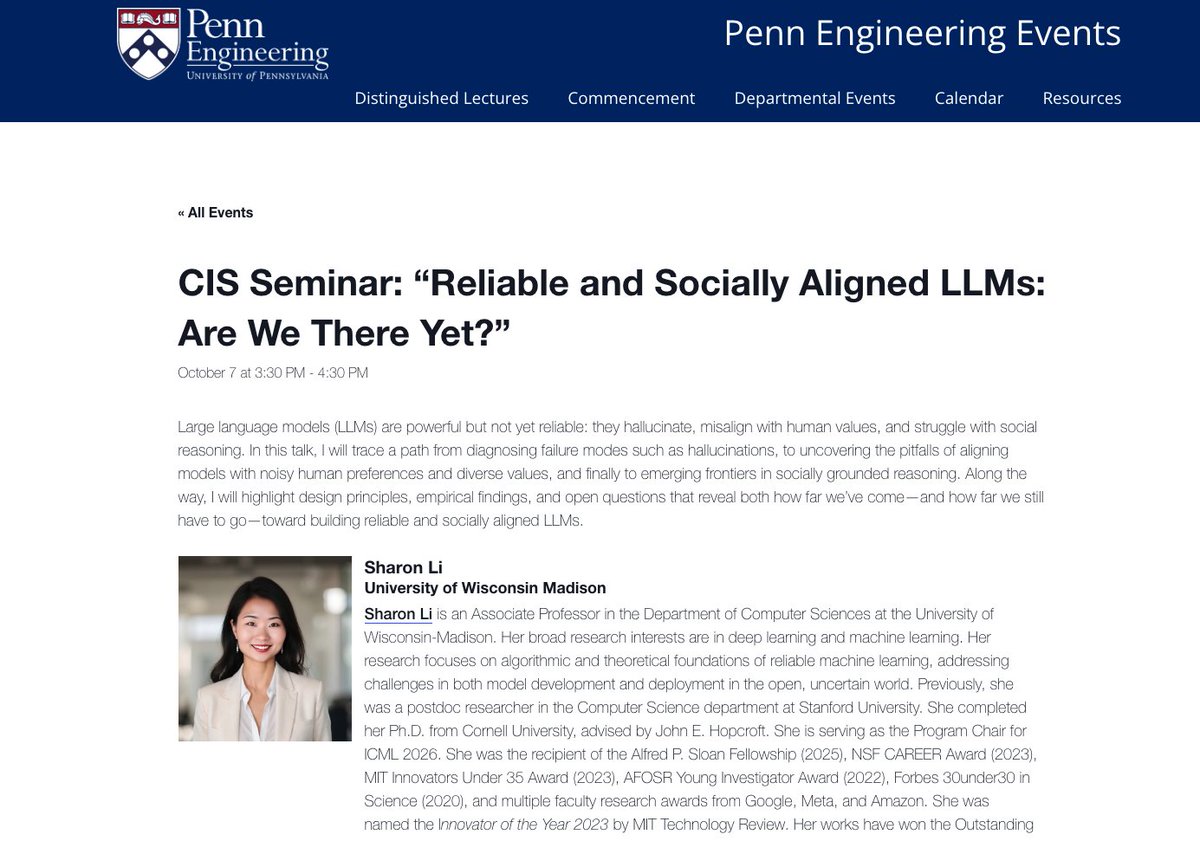

Sharon Y. Li

@sharonyixuanli

Assistant Professor @WisconsinCS. Formerly postdoc @Stanford, Ph.D. @Cornell. Making AI safe and reliable for the open world.

ID: 1107711818997395458

https://pages.cs.wisc.edu/~sharonli 18-03-2019 18:34:12

707 Tweet

9,9K Followers

757 Following

Check out our recent work led by Leitian Tao with the AI at Meta team on using hybrid RL for mathematical reasoning tasks. 🔥Hybrid RL offers a promising way to go beyond purely verifiable rewards — combining the reliability of verifier signals with the richness of learned feedback.

Thanks Dan Hendrycks for leading this. Check out our latest preprint on a definition of AGI.

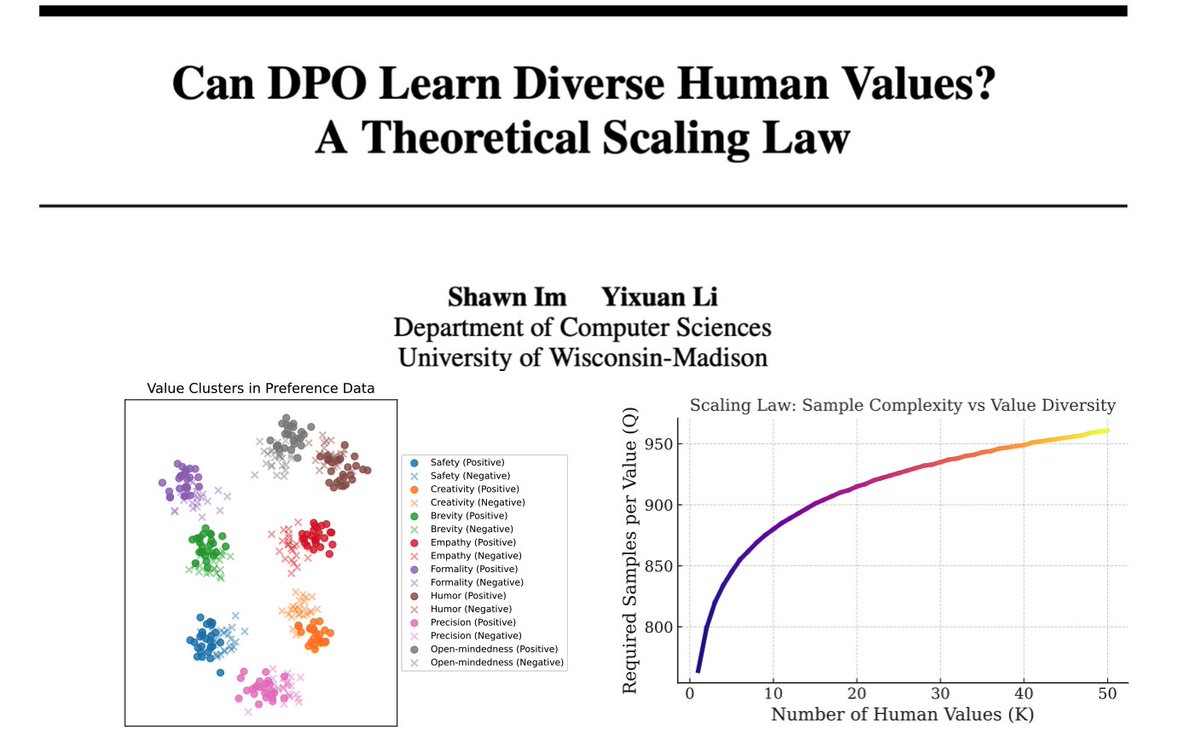

Human preference data is noisy: inconsistent labels, annotator bias, etc. No matter how fancy the post-training algorithm is, bad data can sink your model. 🔥 Min Hsuan (Samuel) Yeh and I are thrilled to release PrefCleanBench — a systematic benchmark for evaluating data cleaning