Sheng-Yu Wang

@shengyuwang6

PhD Student @CarnegieMellon

ID: 1314449508747546625

http://peterwang512.github.io 09-10-2020 06:16:08

80 Tweet

388 Followers

540 Following

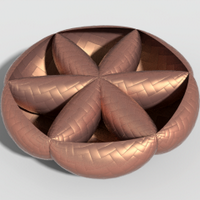

Can we generate a training dataset of the same object in different contexts for customization? Check out our work SynCD, which uses Objaverse assets and shared attention in text-to-image models for the same. cs.cmu.edu/~syncd-project/ w/ Xi Yin Jun-Yan Zhu Ishan Misra Samaneh Azadi

Hi there, Phillip Isola and I wrote a short article (500 words) on Generative Modeling for the Open Encyclopedia of Cognitive Science. We briefly discuss the basic concepts of generative models and their applications. Don't miss out Phillip Isola's hand-drawn cats in Figure 1!

For folks in the ACM SIGGRAPH community: You may or may not be aware of the controversy around the next #SIGGRAPHAsia location, summarized here: cs.toronto.edu/~jacobson/webl… If you're concerned, consider signing this letter: docs.google.com/document/d/1ZS… via this form docs.google.com/forms/d/e/1FAI…