Shenzhi Wang🌟

@shenzhiwang_thu

PhD Candidate @Tsinghua_Uni | Developer of 🔥Xwen-7B&72B-Chat🔥Llama3-8B&70B-Chinese-Chat & 🔥Mistral-7B-v0.3-Chinese-Chat | Research Focuses: RL+LLM+Agent

ID: 1676443035184316416

https://shenzhi-wang.netlify.app 05-07-2023 04:09:12

330 Tweet

1,1K Followers

406 Following

lmarena.ai (formerly lmsys.org) OpenAI Hey lmarena.ai (formerly lmsys.org) team, we'd love to see our open-sourced model, Xwen-72B-Chat, included in the Chatbot Arena! 🥹 It supports both English and Chinese, with strong performance across multiple benchmarks. We have sufficient inference compute for API integration. We've sent several

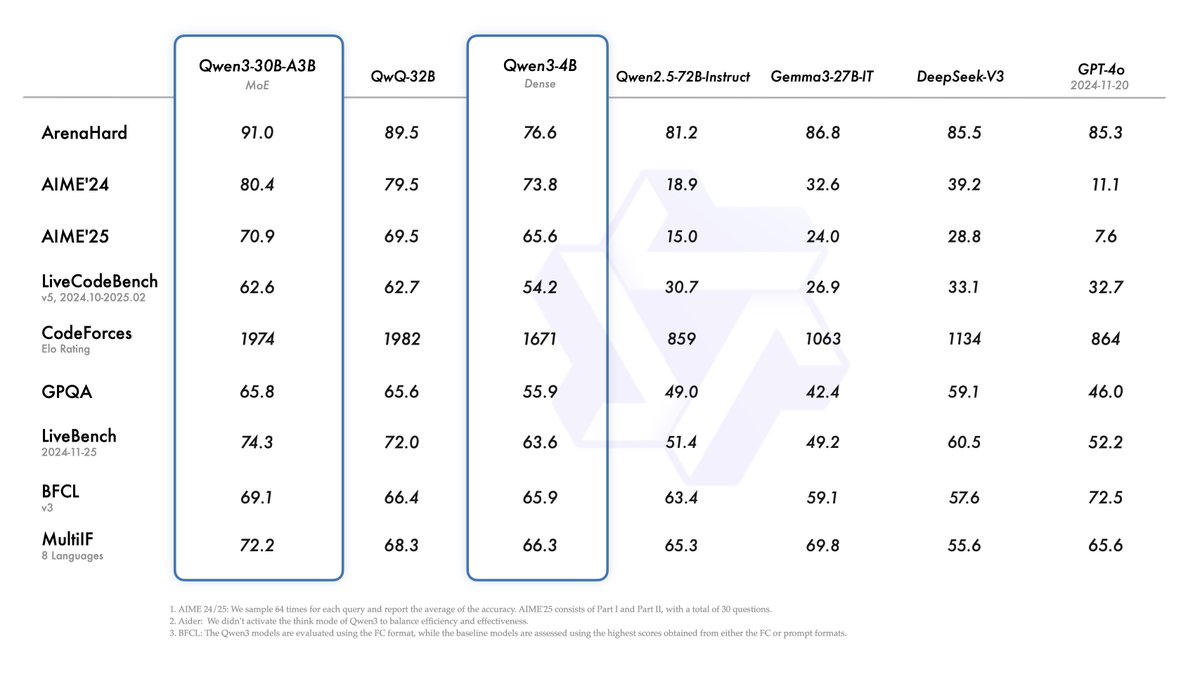

Today, we release QwQ-32B, our new reasoning model with only 32 billion parameters that rivals cutting-edge reasoning model, e.g., DeepSeek-R1. Blog: qwenlm.github.io/blog/qwq-32b HF: huggingface.co/Qwen/QwQ-32B ModelScope: modelscope.cn/models/Qwen/Qw… Demo: huggingface.co/spaces/Qwen/Qw… Qwen Chat:

Cooragent by our Tsinghua LeapLab LeapLab@THU : Open-source multi-agent collaboration framework! 🚀 Tell it to "build an AI intelligence secretary" → auto-scans, curates updates, delivers daily reports. MIT Licensed | Dev-friendly. Try ⬇️ github.com/LeapLabTHU/coo… #AgenticAI