Shivam Chandhok

@shivamchandhok2

Computer Vision. Robotics. Iron Man fan. Coffee aficionado.

MSc (PhD Track) @UBC, Vancouver. Research Engineer @INRIA, France. Researcher @IIT Hyderabad.

ID: 2228129630

03-12-2013 11:13:39

3,3K Tweet

175 Followers

483 Following

the legendary Daniel Han just made a full 3-hour workshop on reinforcement learning and agents. he goes through RL fundamentals, kernels, quantization, and RL+Agents covering both theory and code. great video to get up to speed on these topics.

*Emergence and Evolution of Interpretable Concepts in Diffusion Models* by Berk Tınaz Zalan Fabian Mahdi Soltanolkotabi SAEs trained on cross-attention layers of StableDiffusion are (surprisingly) good and can be used to intervene on the generation. arxiv.org/abs/2504.15473

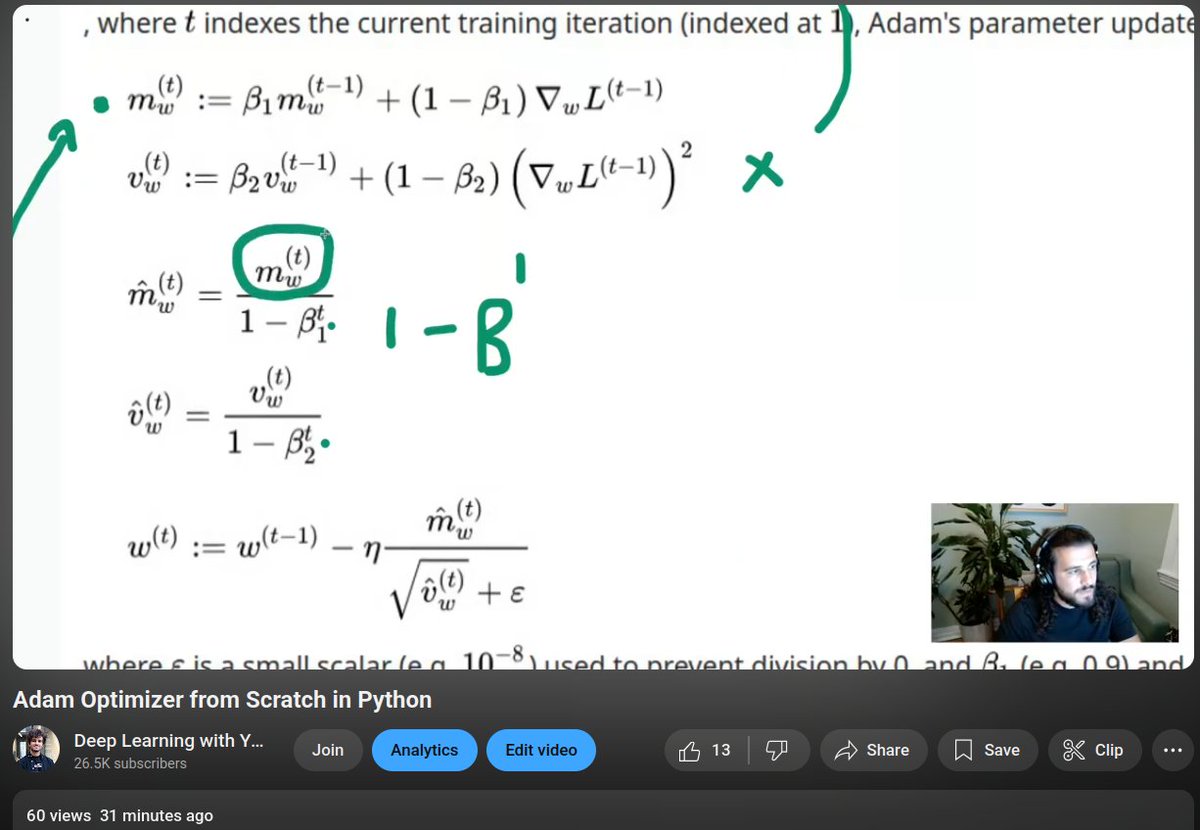

Great new video showing how to implement Adam optimization from scratch! Thanks for the great content Yacine Mahdid

Slides for my lecture “LLM Reasoning” at Stanford CS 25: dennyzhou.github.io/LLM-Reasoning-… Key points: 1. Reasoning in LLMs simply means generating a sequence of intermediate tokens before producing the final answer. Whether this resembles human reasoning is irrelevant. The crucial