Siddhartha Singh

@sid____

ML Researcher @bfh_hesb

Views are my own.

ID: 50966679

26-06-2009 07:43:48

4,4K Tweet

203 Followers

421 Following

Over half of Swiss families are struggling to make ends meet, according to a survey. We’ve interviewed Philippe Gnaegi, director of Pro Familia Schweiz | Suisse | Svizzera, who is now calling for swift political action. 👇 buff.ly/4cbYeF9

Time to use that in-room safe. Hackers crack millions of hotel room keycards by the legendary Andy Greenberg (@agreenberg at the other places). wired.com/story/saflok-h…

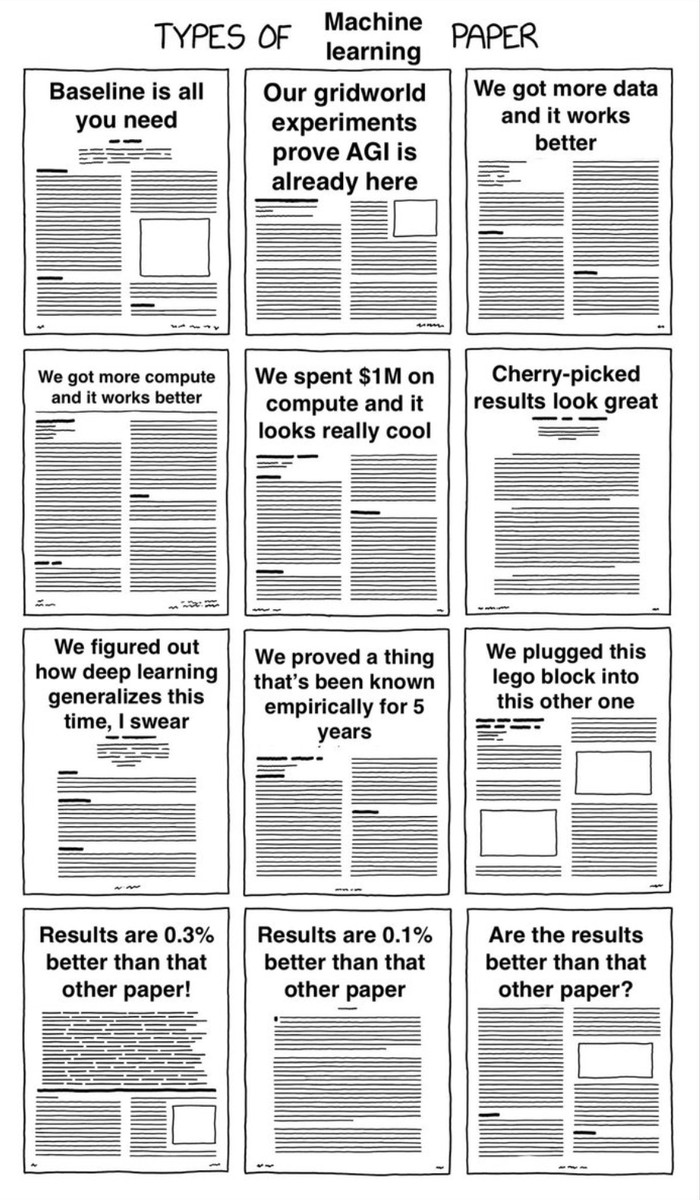

The 12 types of ML papers. (created by Natasha Jaques Max Kleiman-Weiner) #MachineLearning #ML #DataScience

Swiss academics criticise a “major discrepancy” between the resources available and Switzerland’s “ambitious” strategic objectives, which remain unchanged. buff.ly/3Uti6gs Swiss National Science Foundation Innosuisse swissuniversities ETH-Rat ETH Zurich EPFL

apparently Google laid off their entire Python Foundations team, WTF! ( Aaron Gokaslan who is one of the pybind11 maintainers just informed me, asking what ways they can re-fund pybind11) The team seems to have done substantial work that seems critical for Google internally as well.

@datenschatz I usually consider these as "oh, interesting. Since that doesn't look too complicated to implement, let's bookmark this and use this in a project and see if it actually works as well as advertised. (Spoiler: it usually doesn't.)" With DPO itself, you find that it works pretty

Fixed a bug which caused all training losses to diverge for large gradient accumulation sizes. 1. First reported by Benjamin Marie, GA is supposed to be mathematically equivalent to full batch training, but losses did not match. 2. We reproed the issue, and further investigation