Shuran Song

@songshuran

Assistant Professor @Stanford University working on #Robotics #AI #ComputerVision

ID: 751414836240609280

http://shurans.github.io 08-07-2016 13:57:12

275 Tweet

10,10K Followers

486 Following

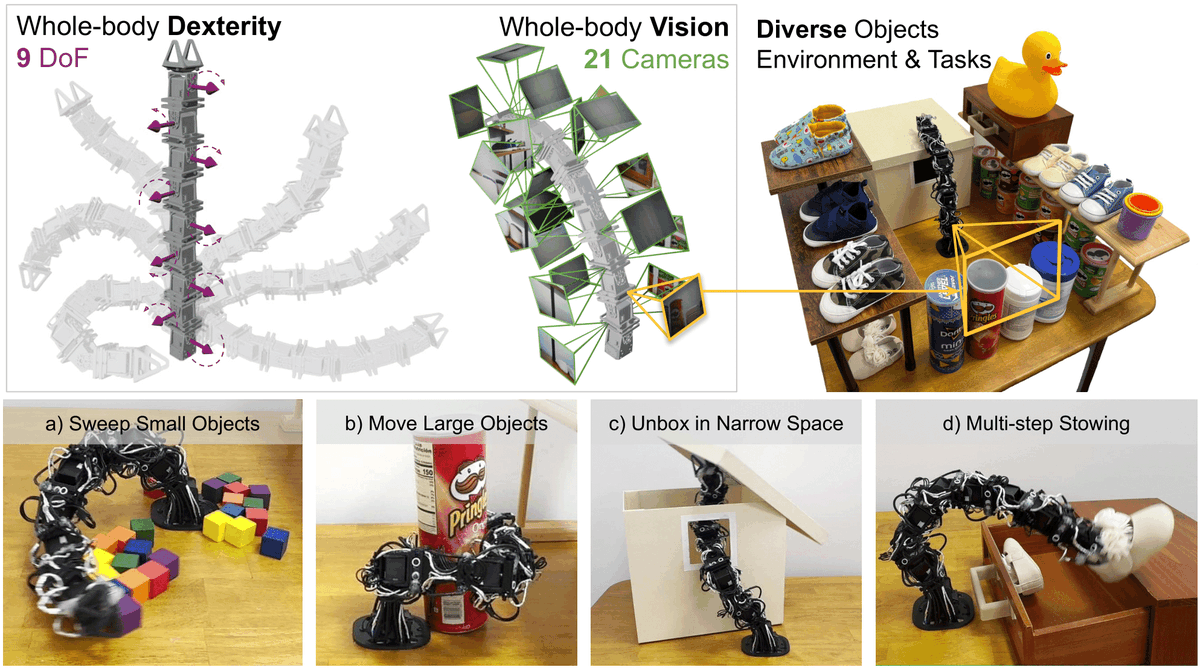

Robot learning has largely focused on standard platforms—but can it embrace robots of all shapes and sizes? In Xiaomeng Xu's latest blog post, we show how data-driven methods bring unconventional robots to life, enabling capabilities that traditional designs and control can't

TRI's latest Large Behavior Model (LBM) paper landed on arxiv last night! Check out our project website: toyotaresearchinstitute.github.io/lbm1/ One of our main goals for this paper was to put out a very careful and thorough study on the topic to help people understand the state of the

Thank you Kosta Derpanis!!