Stanford AI Lab

@stanfordailab

The Stanford Artificial Intelligence Laboratory (SAIL), a leading #AI lab since 1963. ⛵️🤖 Emmy-winning video: youtube.com/watch?v=Cn6nmW…

ID: 1059680847425527808

https://ai.stanford.edu/ 06-11-2018 05:36:16

3,3K Tweet

189,189K Followers

333 Following

What makes a good 3D scene representation? Instead of meshes or Gaussians, we propose Superquadrics to decompose 3D scenes into extremely compact representations ➡️ check out our paper for exciting use-cases in robotics🤖 and GenAI🚀 super-dec.github.io w/ Elisabetta Fedele Marc Pollefeys

Very happy to share that our work on learning long-history policies received the Best Paper Award from the Workshop on Learned Robot Representations Robotics: Science and Systems ! 🤖🥳 Check out our paper if you haven't already! long-context-dp.github.io Thank you to all the organizers and

Stanford Engineering’s fourth decade, 1955-1964, was a period of transformation. New departments were formed, computing entered the classroom, the Stanford “Dish” was completed, and Stanford AI Lab began shaping the future of AI. engineering100.stanford.edu/stories/a-peri…

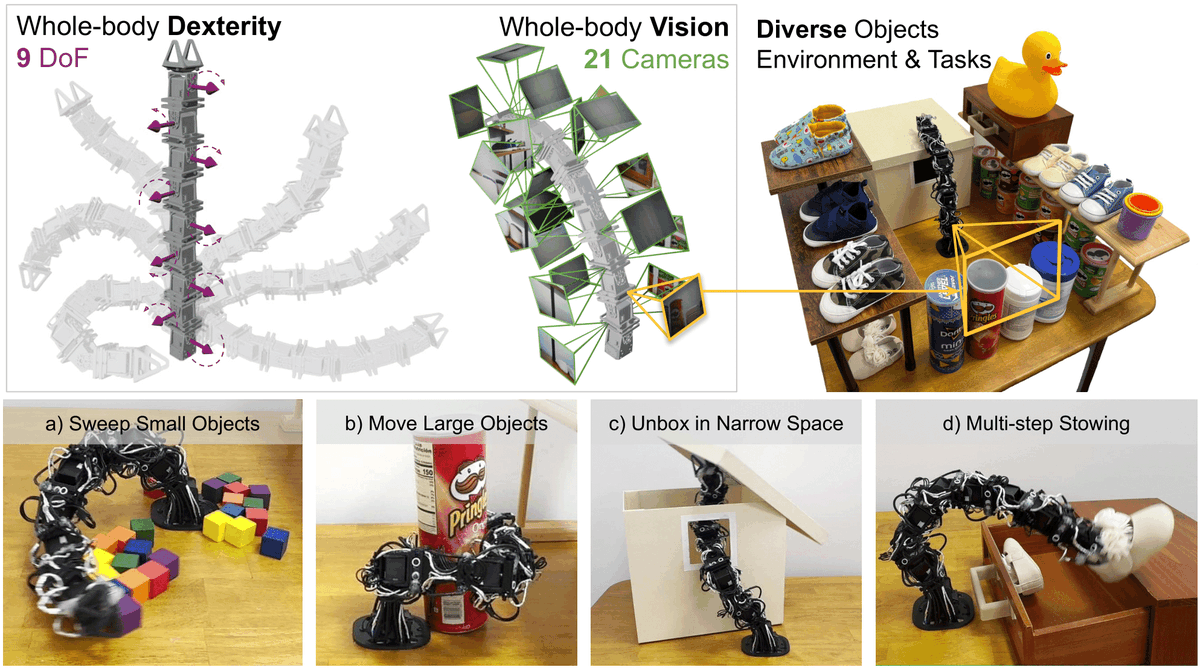

Robot learning has largely focused on standard platforms—but can it embrace robots of all shapes and sizes? In Xiaomeng Xu's latest blog post, we show how data-driven methods bring unconventional robots to life, enabling capabilities that traditional designs and control can't

A great Quanta Magazine article on our theory of creativity in convolutional diffusion models lead by Mason Kamb. See also our paper with new results in version 2: arxiv.org/abs/2412.20292 to be presented as an oral at ICML Conference #icml25 thx Webb Wright !