Center for Research on Foundation Models

@stanfordcrfm

Making foundation models more reliable and accessible.

ID: 1523887392494555136

10-05-2022 04:48:09

72 Tweet

2,2K Followers

3 Following

🔥#TextGrad is now multi-modal! TextGrad boosts GPT-4o's visual reasoning ability: 📊MathVista score 63.8➡️66.1 w/ TextGrad 🧬Reduces ScienceQA error rate by 20%. Best reported 0-shot score Tutorial: colab.research.google.com/github/zou-gro… Great work Pan Lu Mert Yuksekgonul + team! Works

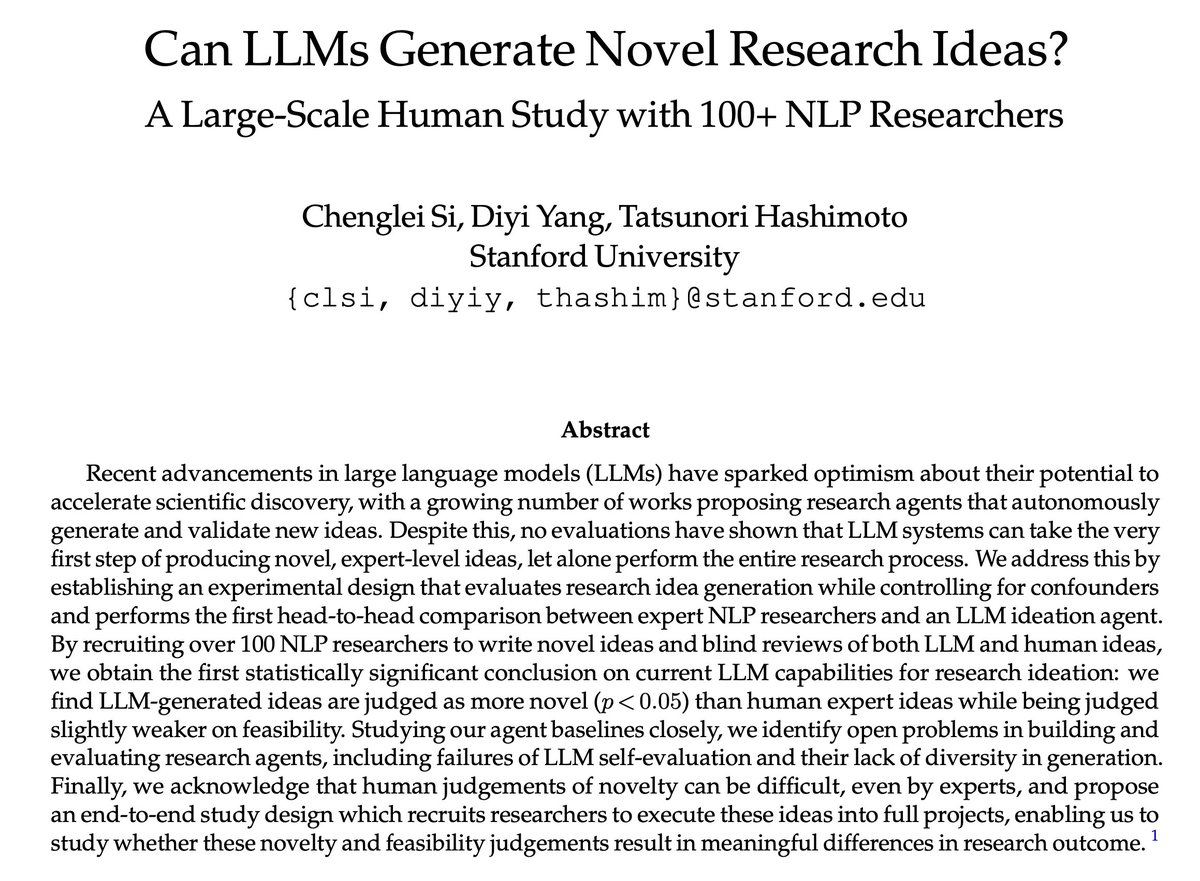

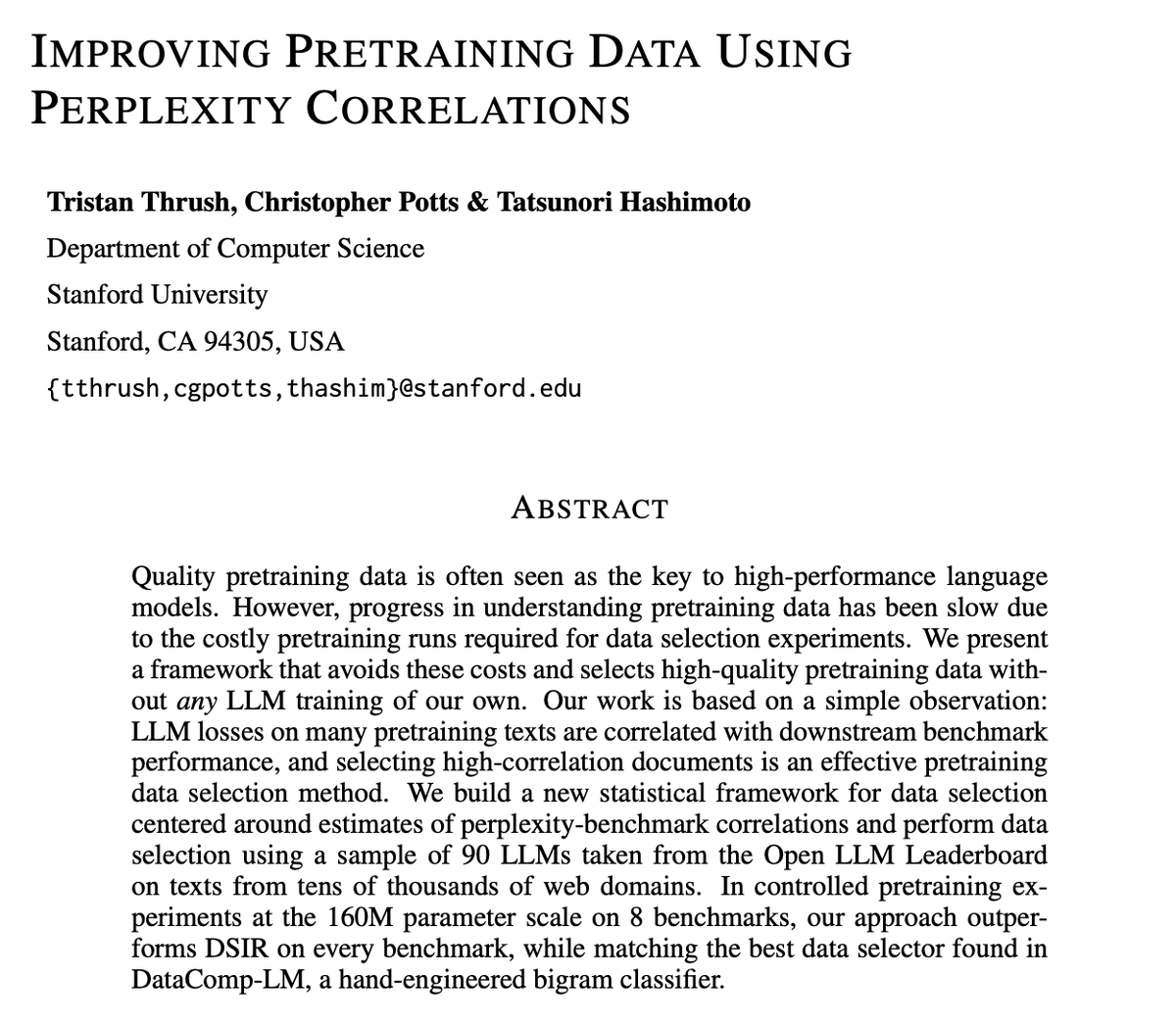

Selecting pretraining data points based on correlation with downstream tasks is an effective data mixing technique I love papers that are a simple, elegant idea executed rly well! lovely read from Tristan Thrush Christopher Potts Tatsunori Hashimoto 😊 arxiv.org/abs/2409.05816

we shipp’d 👭 on-device lms and frontier cloud lms. and…they were a match☺️. 98% accuracy, just 17.5% the cloud API costs beyond excited to drop minions: where local lms meet cloud lms 😊 joint work w/Sabri Eyuboglu & Dan Biderman at @hazyresearch. ty Together AI,