Sudharshan Suresh

@suddhus

Research Scientist @BostonDynamics Atlas. Teaching humanoids a few tricks. Prev: @AIatMeta and PhD at @CMU_Robotics.

ID: 33920014

http://suddhu.github.io 21-04-2009 14:31:26

261 Tweet

900 Followers

1,1K Following

Atlas is demonstrating reinforcement learning policies developed using a motion capture suit. This demonstration was developed in partnership with Boston Dynamics and RAI Institute.

New work from the Robotics team at AI at Meta . Want to be able to tell your robot bring you the keys from the table in the living room? Try out Locate 3D! interactive demo: locate3d.atmeta.com/demo model & code & dataset: github.com/facebookresear…

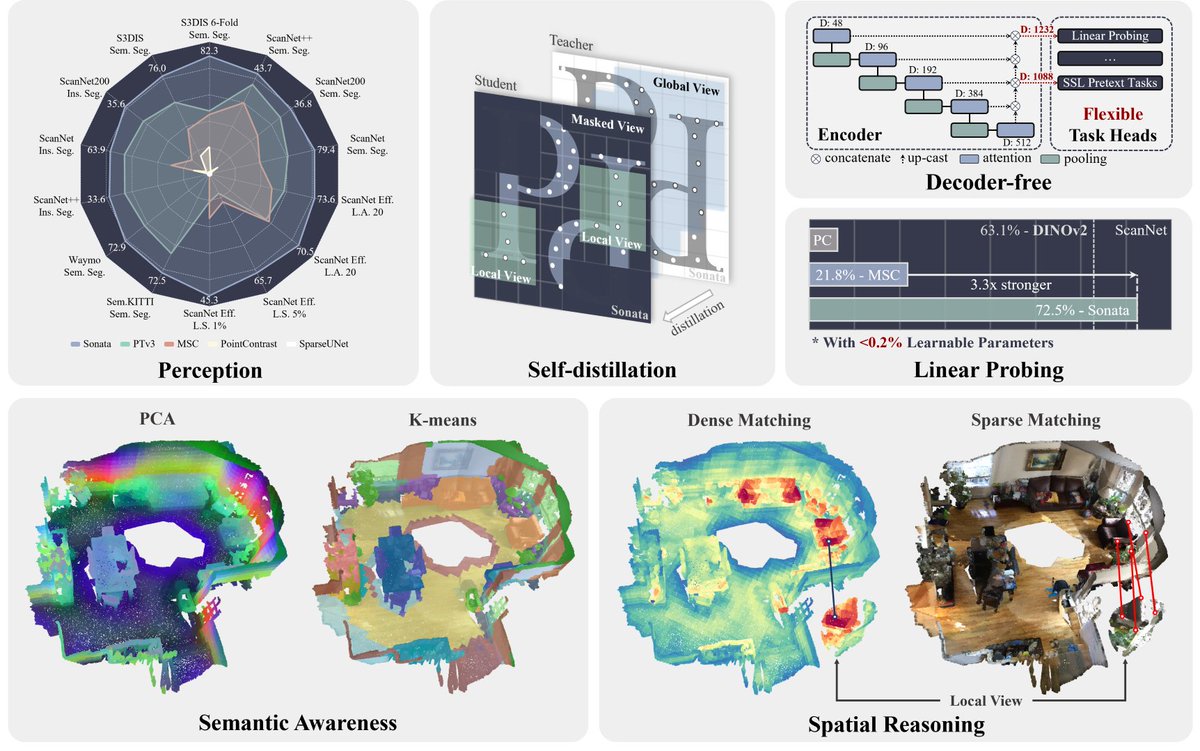

Check out the awesome follow-up to sparsh by Akash Sharma and others - self-supervised learning for a variety of downstream tasks with tactile skins!

TRI's latest Large Behavior Model (LBM) paper landed on arxiv last night! Check out our project website: toyotaresearchinstitute.github.io/lbm1/ One of our main goals for this paper was to put out a very careful and thorough study on the topic to help people understand the state of the