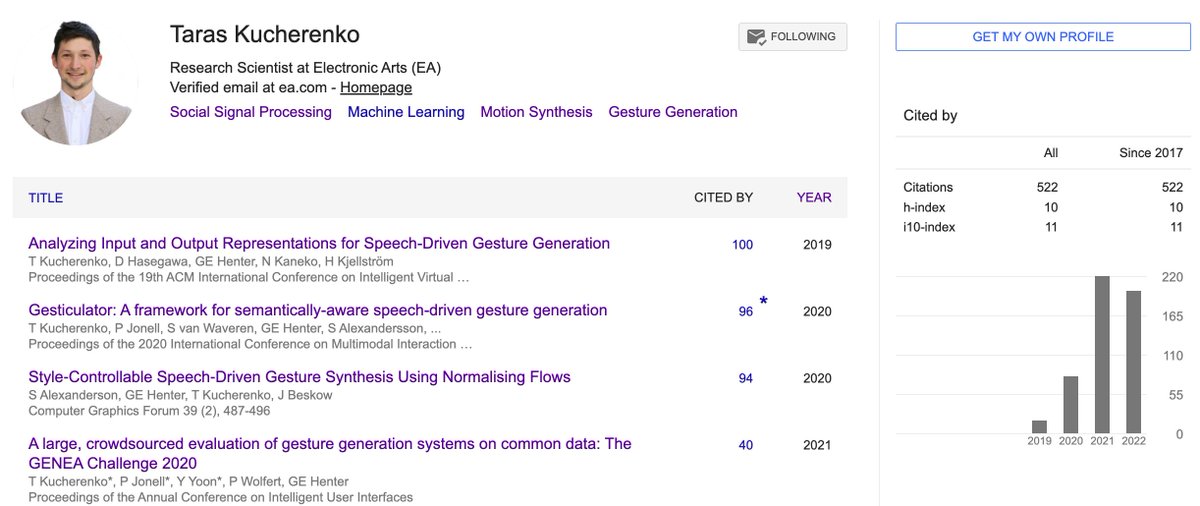

ML Research Scientist @SEED, @EA. My research is on using #machinelearning for #animation of virtual agents. Effective altruist. Opinions are mine. 🇺🇦

ID: 703298860668604417

https://svito-zar.github.io/ 26-02-2016 19:21:29

329 Tweet

433 Followers

240 Following

It was my pleasure to give a tutorial on gesture generation at the WASP Research Summer School 2022. Students made a full story using ML: text using GPT-3, then text2speech and speech2gestures. Here are some examples: youtube.com/playlist?list=… @WASPresearch

Do gestures really affect how people perceive animated characters? And if so, how do you measure it? SEED’s own 🇺🇦 Taras Kucherenko @[email protected] co-authored a paper for The IVA Conference that shows how to test people's reactions to gesturing characters. Check it out! #IVA22 ea.com/seed/news/eval…

Looking forward to hosting the @WorkshopGenea at ACM ICMI Official in India later this year. Grateful to SEED for sponsoring those amazing T-shirts and to WASP Research for sponsoring their design.

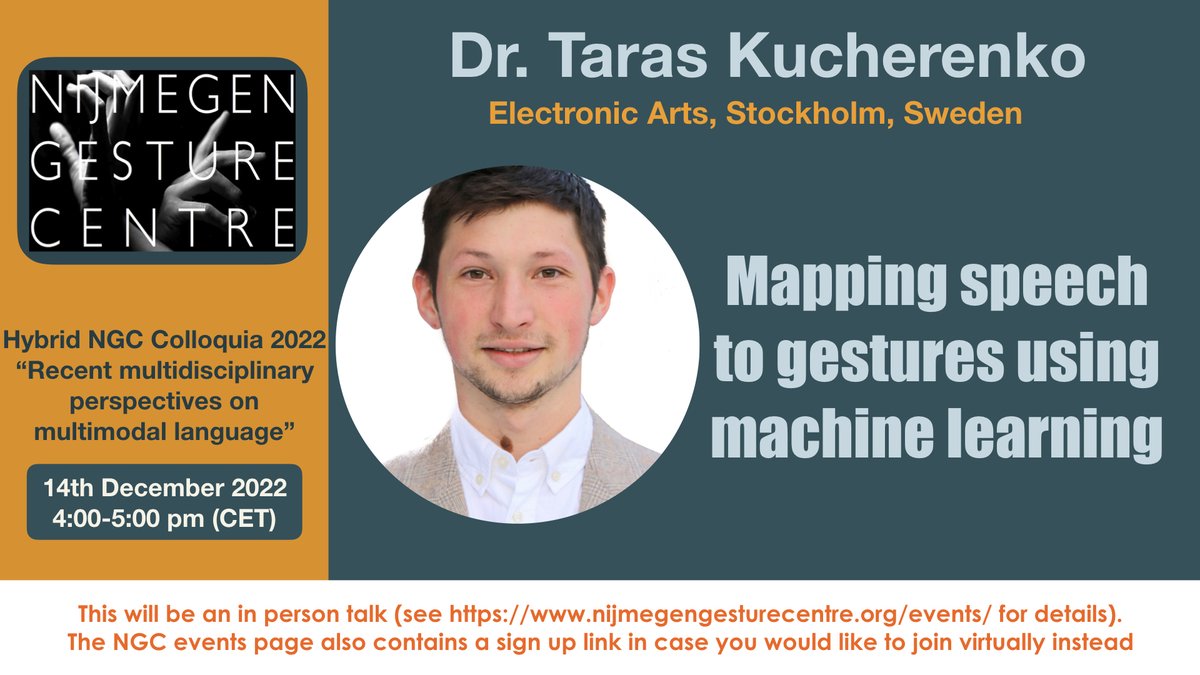

We are very happy to announce the last NGC Nijmegen Gesture Center talk of 2022 on 14 Dec 16:00 CET by Dr. Taras Kucharenko 🇺🇦 Taras Kucherenko @[email protected] working Electronic Arts. this is a hybrid talk to be given MaxPlanck-Psycholinguistics in person but talk can be watched in zoom. for sign up sites.google.com/view/nijmegeng…

Multimodallang Dept @MPİ_NL is HIRING MaxPlanck-Psycholinguistics !! looking for a software engineer/machine learning specialist who will help develop tools to automatically recognise speech and gestures in big corpora!! in collaboration with Peter Uhrig, @esam__ghaleb and others mpi.nl/career-educati…

Finally, I am on Mastodon: @[email protected] Please follow me there as I intend to leave Twitter #Mastodon #mastodonmigration #FreedomOfSpeech

Looking forward to the GENEA Challenge 2023 at ACM ICMI Official

Lifelike and emotive gestures are a huge part of making believable human game characters. SEED’s own 🇺🇦 Taras Kucherenko @[email protected] has co-authored this terrific paper for #Eurographics2023 that surveys the landscape of gesture generation driven by data. Check it out! ea.com/seed/news/seed…