Tom Jobbins

@theblokeai

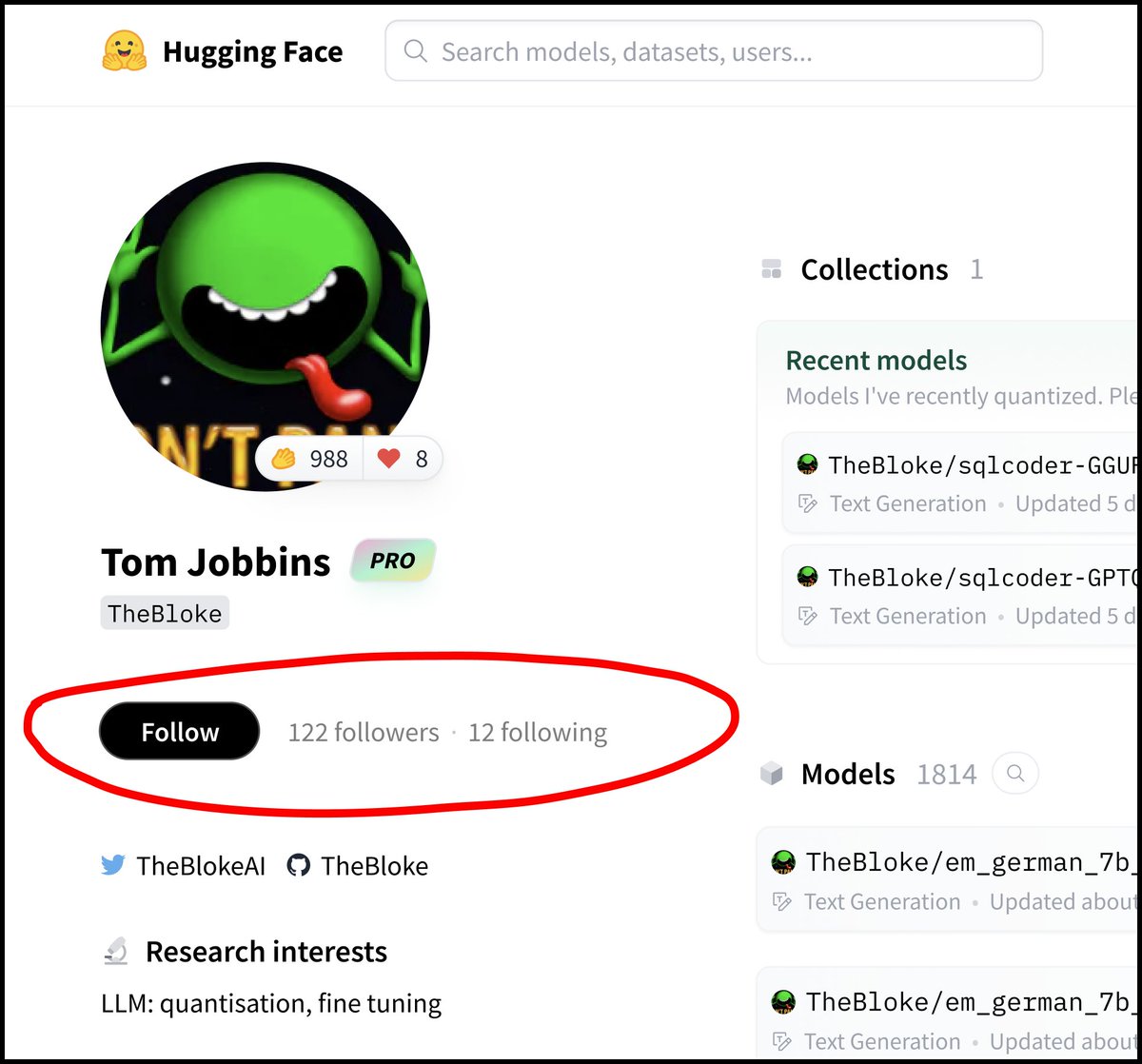

My Hugging Face repos: huggingface.co/TheBloke

Discord server: discord.gg/theblokeai

Patreon: patreon.com/TheBlokeAI

ID: 161524644

https://www.patreon.com/TheBlokeAI 01-07-2010 02:27:07

336 Tweet

15,15K Followers

229 Following

Meta's CodeLlama is here! ai.meta.com/blog/code-llam… 7B, 7B-Instruct, 7B-Python, 13B, 13B-Instruct, 13B-Python, 34B, 34B-Instruct, 34B-Python First time we've seen the 34B model I've got a couple of fp16s up: huggingface.co/TheBloke/CodeL… huggingface.co/TheBloke/CodeL… More coming soon obvs

Just released by Tav : Pygmalion 2, the sequel to one of the most popular models ever! And Mythalion, a new Gryphe merge! huggingface.co/TheBloke/Pygma… huggingface.co/TheBloke/Pygma… huggingface.co/TheBloke/Pygma… huggingface.co/TheBloke/Pygma… huggingface.co/TheBloke/Mytha… huggingface.co/TheBloke/Mytha…

Chronos 70B v2 release! Thanks to Pygmalion for generously providing the compute and Tom Jobbins for quantizing the model. As usual, the model optimized for chat, roleplay, storywriting, and now includes vastly improved reasoning skills. huggingface.co/elinas/chronos…

This new filter 🔎 on Hugging Face user's profile is very helpful, especially to check if Tom Jobbins has quantized and released the last trending models 😁

New feature alert in the Hugging Face ecosystem! Flash Attention 2 natively supported in huggingface transformers, supports training PEFT, and quantization (GPTQ, QLoRA, LLM.int8) First pip install flash attention and pass use_flash_attention_2=True when loading the model!

Thanks again to Latitude.sh for the loan of a beast 8xH100 server this week. I uploaded over 550 new repos, maybe my busiest week yet! Quanting is really resource intensive. Needs not only fast GPUs, but many CPUs, lots of disk, and 🚀 network. A server that ✅ all is v. rare!

oh hello Tom Jobbins I want to bookmark your 'Recent models' Collection on Hugging Face 🔥 Well... you can now upvote Collections! and browse upvoted collections on your profile ❤️

Blazing fast text generation using AWQ and fused modules! 🚀 Up to 3x speedup compared to native fp16 that you can use right now on any models supported by Tom Jobbins Simply pass an `AwqConfig` with `do_fuse=True` to `from_pretrained` method! huggingface.co/docs/transform…

Tom Jobbins joined me to share his work in the open-source AI space - don't miss it! happening right now server link: discord.gg/peBrCpheKE (see the general channel or events channel for google meet link)

FYI to anyone using Mistral AI's Mixtral for long context tasks -- you can get even better performance by disabling sliding window attention (setting it to your max context length) config.sliding_window = 32768