Thibault Sellam

@thiboibo

Listing issues in NLG evaluations turned into a 25 page survey! In “Repairing the Cracked Foundation: A Survey of Obstacles in Evaluation Practices for Generated Text”, Thibault Sellam Elizabeth Clark and I cover 250+ papers. 📄Link: arxiv.org/abs/2202.06935 Want to learn more?👇

My colleagues Chris Alberti, Kuzman Ganchev and I are looking for a fall intern. The topic is improving language technologies for underrepresented languages by leveraging large pretrained language models. The position could be in NYC or remote. If interested, please reach out.

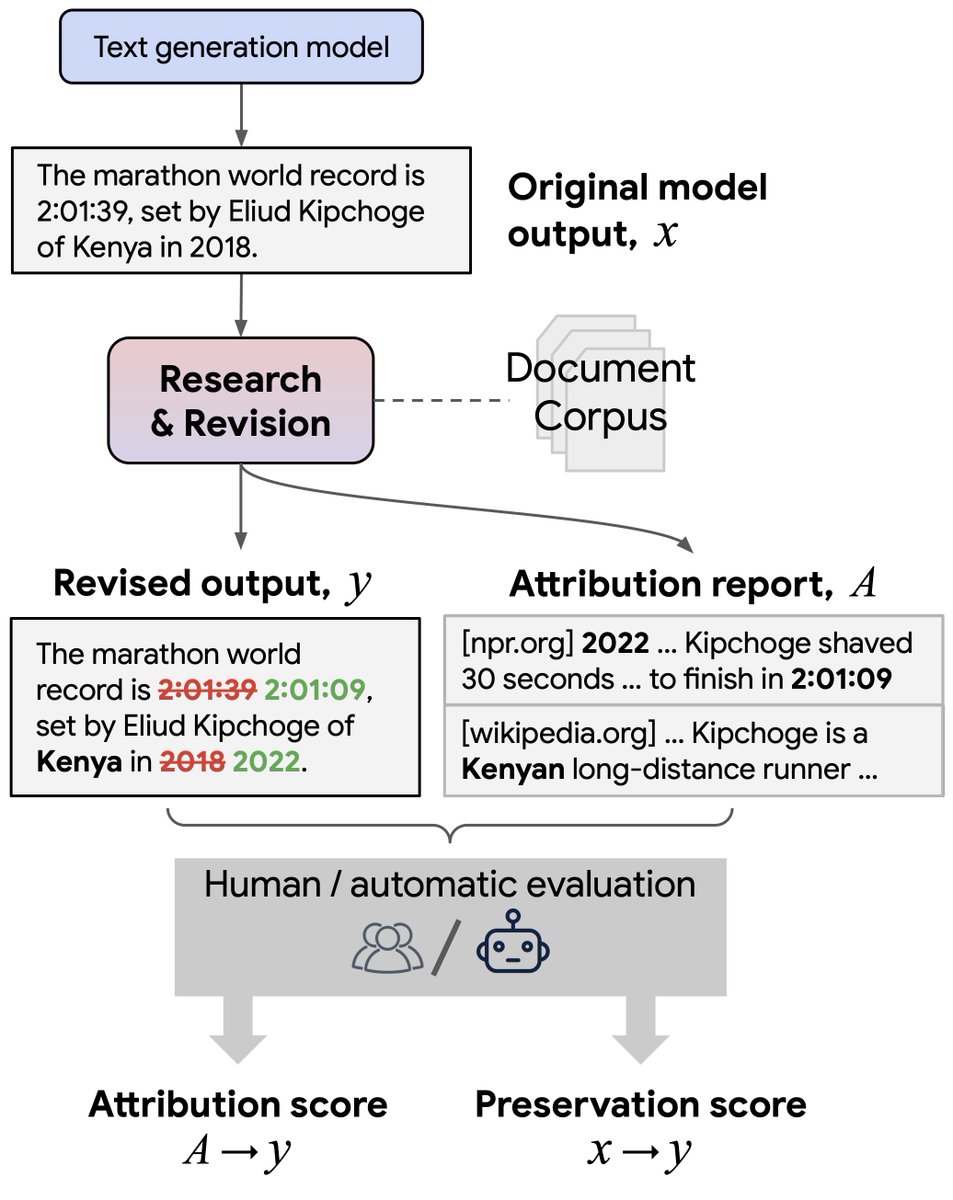

The Searhorse dataset is available - 96K ratings to train and evaluate new summarization metrics. Congrats Elizabeth Clark and team! Paper here: arxiv.org/abs/2305.13194 Models here: huggingface.co/collections/go…

Excited to share new work from Google DeepMind: “ProtEx: A Retrieval-Augmented Approach for Protein Function Prediction” biorxiv.org/content/10.110…