Thomas Scialom

@thomasscialom

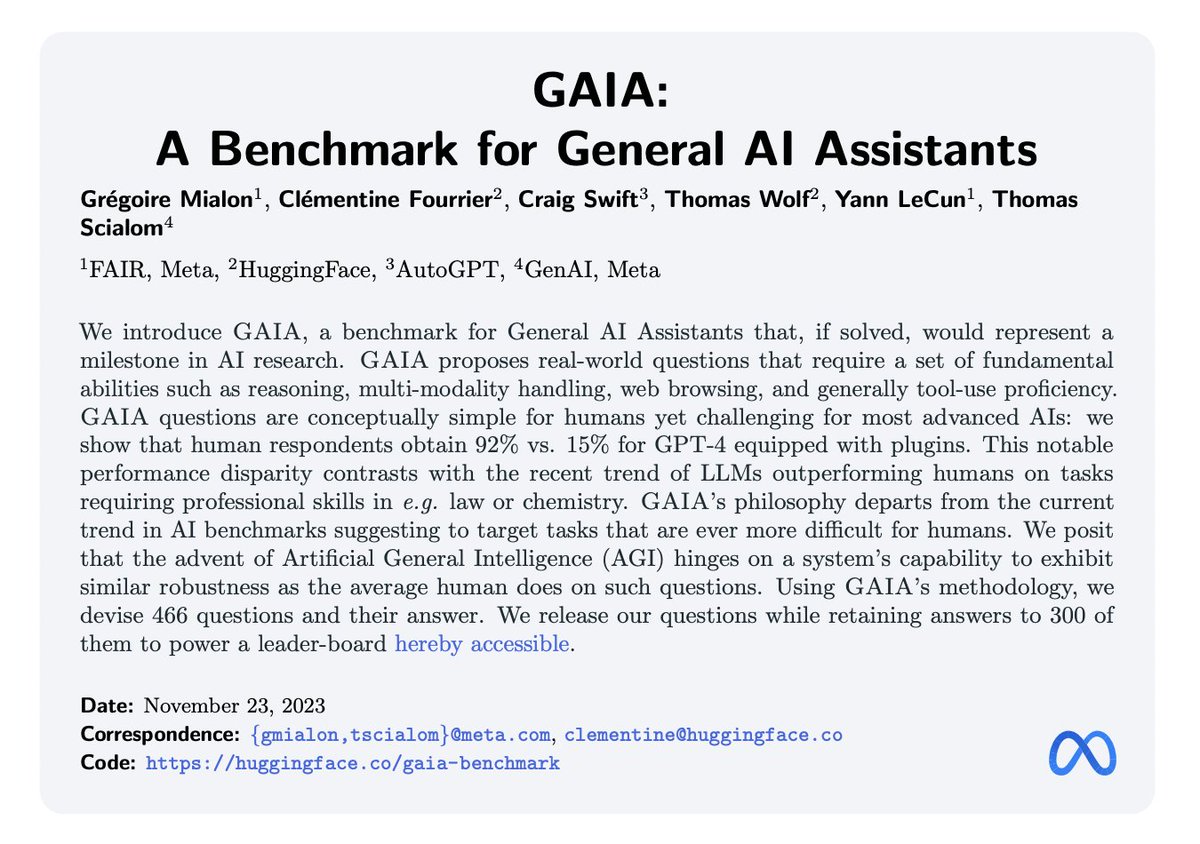

AGI Researcher @MetaAI -- I led Llama 2, built post-training from scratch. Also Toolformer, GAIA, Llama-3.0, CodeLlama, Galactica. Now working on Agents.

ID: 942694791707545600

https://www.linkedin.com/in/tscialom/ 18-12-2017 09:55:27

1,1K Tweet

7,7K Followers

224 Following

In fact there is on perplexity demo a specific system prompt that amplifes over safe responses. It has been removed from other demos like HF. Perplexity Denis Yarats could we deactivate it as well by default please?

We had a small party to celebrate Llama-3 yesterday in Paris! The entire LLM OSS community joined us with Hugging Face, kyutai, Google DeepMind (Gemma), cohere As someone said: better that the building remains safe, or ciao the open source for AI 😆

🆕 pod with Thomas Scialom of AI at Meta! Llama 2, 3 & 4: Synthetic Data, RLHF, Agents on the path to Open Source AGI latent.space/p/llama-3 shoutouts: - Why Yann LeCun's Galactica Instruct would have solved Lucas Beyer (bl16)'s Citations Generator - Beyond Chinchilla-Optimal: 100x