TimDarcet

@timdarcet

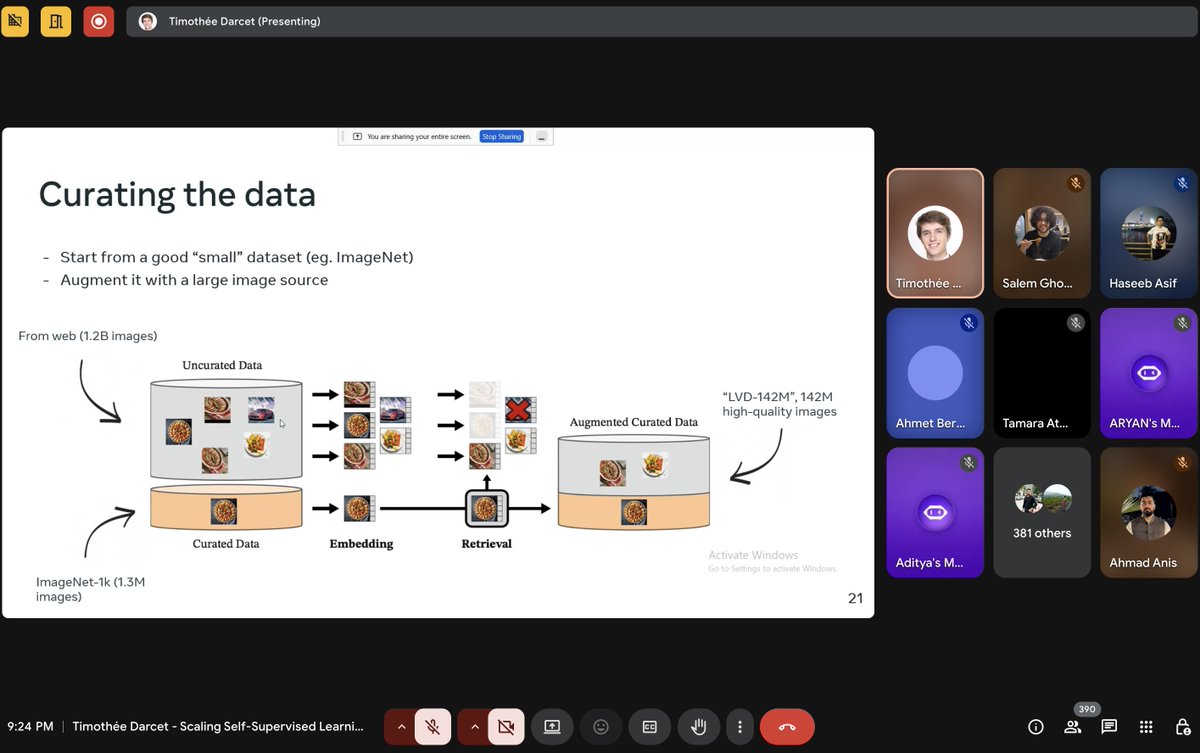

PhD student, building big vision models @ INRIA & FAIR (Meta)

ID: 1371396662925606913

15-03-2021 09:44:31

982 Tweet

3,3K Followers

728 Following

FFS Hugging Face please stop doing that it makes you look like pretentious assholes

Why does Meta open-source its models? I talked about it with Maciej Kawecki - This Is IT looking at Dino, our computer vision model with applications in forest mapping, medical research, agriculture and more. Open-source boosts AI access, transparency, and safety. youtube.com/watch?v=eNGafi…