TISMIR journal

@tismirj

Transactions of the International Society for Music Information Retrieval (TISMIR). Official twitter account.

ID: 1053906088343547905

http://transactions.ismir.net 21-10-2018 07:09:26

327 Tweet

960 Followers

116 Following

Congratulations to Kristina Matrosova, Manuel Moussallam, Thomas Louail, and Olivier Bodini for their paper "Depict or Discern? Fingerprinting Musical Taste from Explicit Preferences" published in TISMIR journal! 👏 Paper link: transactions.ismir.net/articles/10.53…

NEW: Dataset paper by Simon Schwär, Michael Krause, Michael Fast Sebastian Rosenzweig Frank Scherbaum and Meinard Müller: "A Dataset of Larynx Microphone Recordings for Singing Voice Reconstruction": 4h of pop with guitar + differentiable SP source/filter method. transactions.ismir.net/articles/10.53…

NEW: Overview article by Stefan Uhlich and 16 co-authors (Gio Fabbro Jonathan Le Roux Dipam Chakraborty Sharada Mohanty Kai Li @ ICLR 2025🇸🇬 Yuki Mitsufuji…) "The Sound Demixing Challenge 2023 – Cinematic Demixing Track" Sound Demixing Challenge: setup, structure of the competition, datasets… transactions.ismir.net/articles/10.53…

We are happy to announce that our two papers summarizing the Sound Demixing Challenge 2023 have been published in TISMIR journal ! Thank you to everyone for their hard work! Music Track: transactions.ismir.net/articles/10.53… Cinematic Track: transactions.ismir.net/articles/10.53…

If you wish to familiarize yourself with recent strong models for sound separation, look into our jounals TISMIR journal that just came out this week Those are reports on the Sound Demixing Challenge 2023 where I served as the general chair Music Track: transactions.ismir.net/articles/10.53…

NEW: Overview article by Gio Fabbro and 26 co-authors (Chieh-Hsin (Jesse) Lai WOOSUNG CHOI Marco Martínez Geraldo Ramos Alexandre Défossez Dipam Chakraborty Sharada Mohanty nabarun goswami Tatsuya Harada Jiafeng Liu 封子川…): "The Sound Demixing Challenge 2023 – Music Demixing Track" Sound Demixing Challenge transactions.ismir.net/articles/10.53…

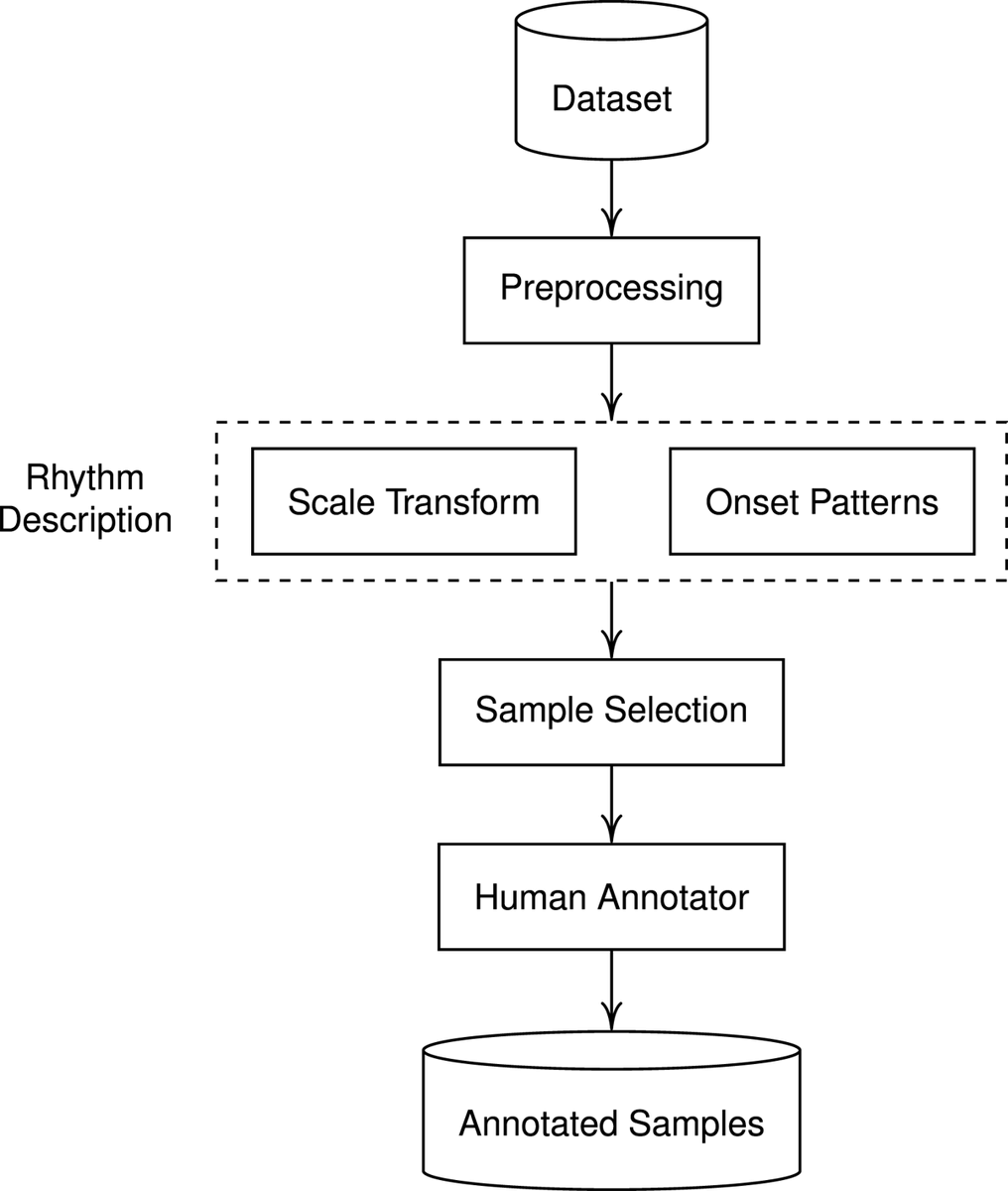

NEW: Research paper by Lucas Maia Martín Rocamora Luiz W. P. Biscainho and Magdalena Fuentes: "Selective Annotation of Few Data for Beat Tracking of Latin American Music Using Rhythmic Features" based on rhythmic feature and constrained selection methods. transactions.ismir.net/articles/10.53…

🎉 Exciting news! Our latest article on beat tracking with few data has been published in the TISMIR journal (TISMIR journal)! 📜 article: transactions.ismir.net/articles/10.53… 💻 code: github.com/maia-ls/tismir…

We explore the problem of annotation in culture-specific datasets, building upon our previous research: 📜 ISMIR 2022 (ISMIR Conference): zenodo.org/records/7385261 which showed that SOTA beat trackers yield good results with few training points provided that the dataset is homogeneous.

NEW: Research article by Isabella Czedik-Eysenberg Oliver_Wieczorek Arthur Flexer & Christoph Reuter: "Charting the Universe of Metal Music Lyrics and Analyzing Their Relation to Perceived Audio Hardness", via a topic model based on 124,288 song lyrics. transactions.ismir.net/articles/10.53…