Tsendsuren

@tsendeemts

Research scientist at Google Deepmind | previously at Microsoft Research and Postdoc at UMass. Views are my own. Most tweets in Mongolian 🇲🇳.

ID: 105984261

http://www.tsendeemts.com/ 18-01-2010 03:58:52

21,21K Tweet

4,4K Followers

587 Following

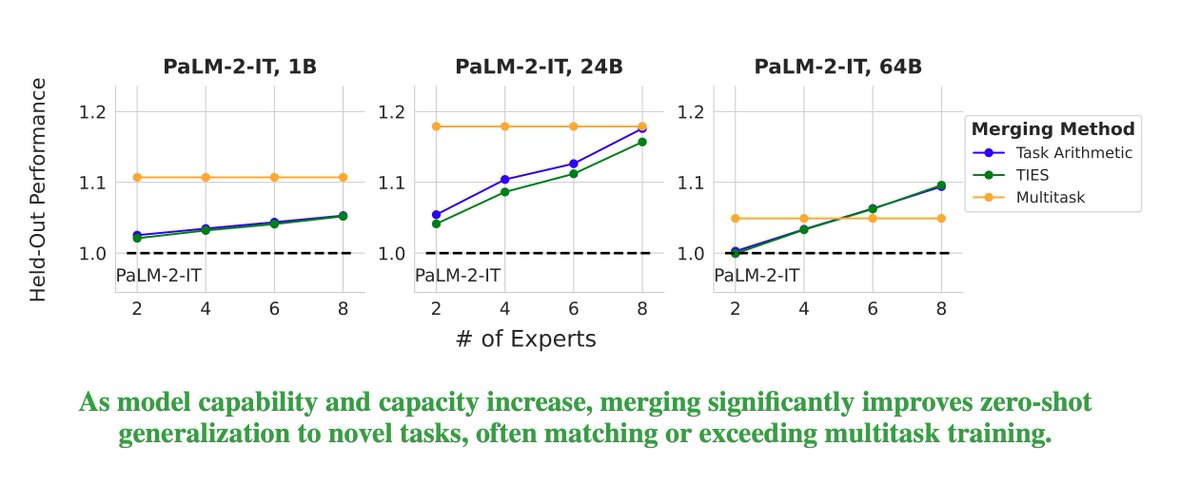

Excited to share that our paper on model merging at scale has been accepted to Transactions on Machine Learning Research (TMLR). Huge congrats to my intern Prateek Yadav and our awesome co-authors Jonathan Lai, Alexandra Chronopoulou, Manaal Faruqui, Mohit Bansal, and Tsendsuren 🎉!!

This work got accepted at Transactions on Machine Learning Research (TMLR). Congratulations to Prateek Yadav and my co-authors. Also, thank you to the reviewers and editors for their time.

Two of my former interns Trapit Bansal and Prateek Yadav are at Meta Superintelligence. Excited for what they are gonna build together 🥳

Tsendsuren Prateek Yadav I had tried implementing one of your papers: "Infini Transformer" from scratch: github.com/ArionDas/Infin… But you never shared the code with me when I requested 😄🙌