Umberto Cappellazzo

@umberto_senpai

Research Associate @ Imperial College London. Interests: efficient scaling of audio-visual LLMs, Mixture of Experts.

ID: 448124299

https://umbertocappellazzo.github.io/ 27-12-2011 17:02:49

12,12K Tweet

433 Followers

177 Following

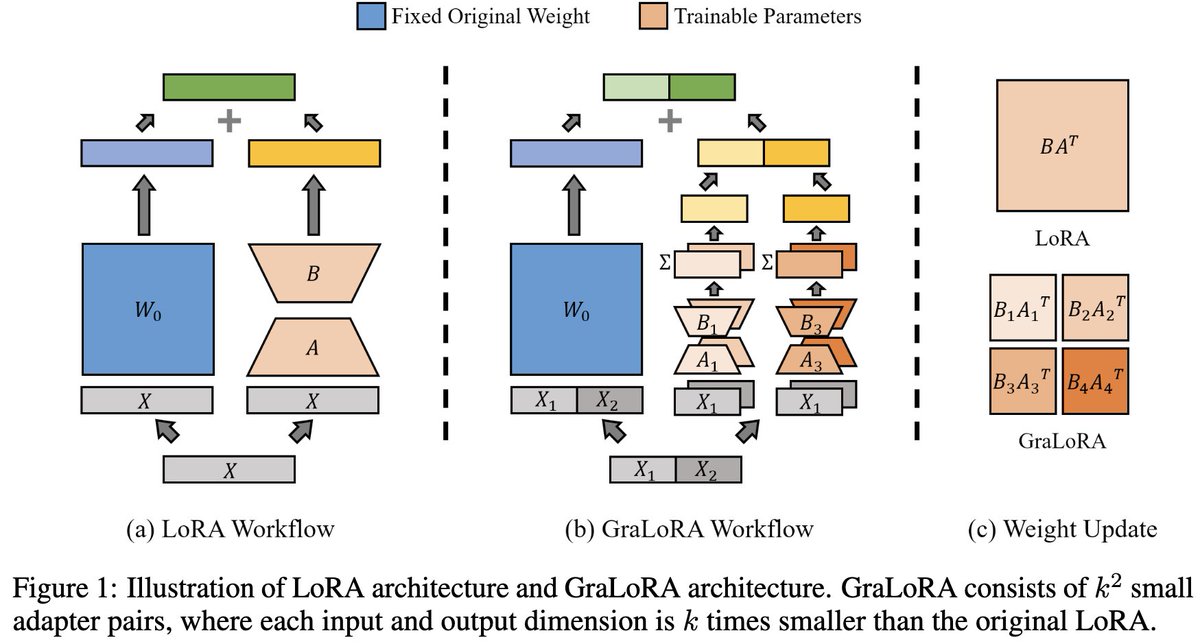

If you're attending IEEE ICASSP and are curious about how to efficiently leverage LLMs for audio-visual speech recognition, don't miss our paper! Stop by Poster 2F area at 5pm local time, Minsu will be there to dive into the details with you! 🔍