Video & Image Sense Lab (VIS Lab)

@vislab_uva

Computer Vision research group at @UvA_Amsterdam directed by Cees Snoek (@cgmsnoek)

ID: 1788473121948712960

https://ivi.fnwi.uva.nl/vislab/ 09-05-2024 07:36:56

55 Tweet

42 Followers

29 Following

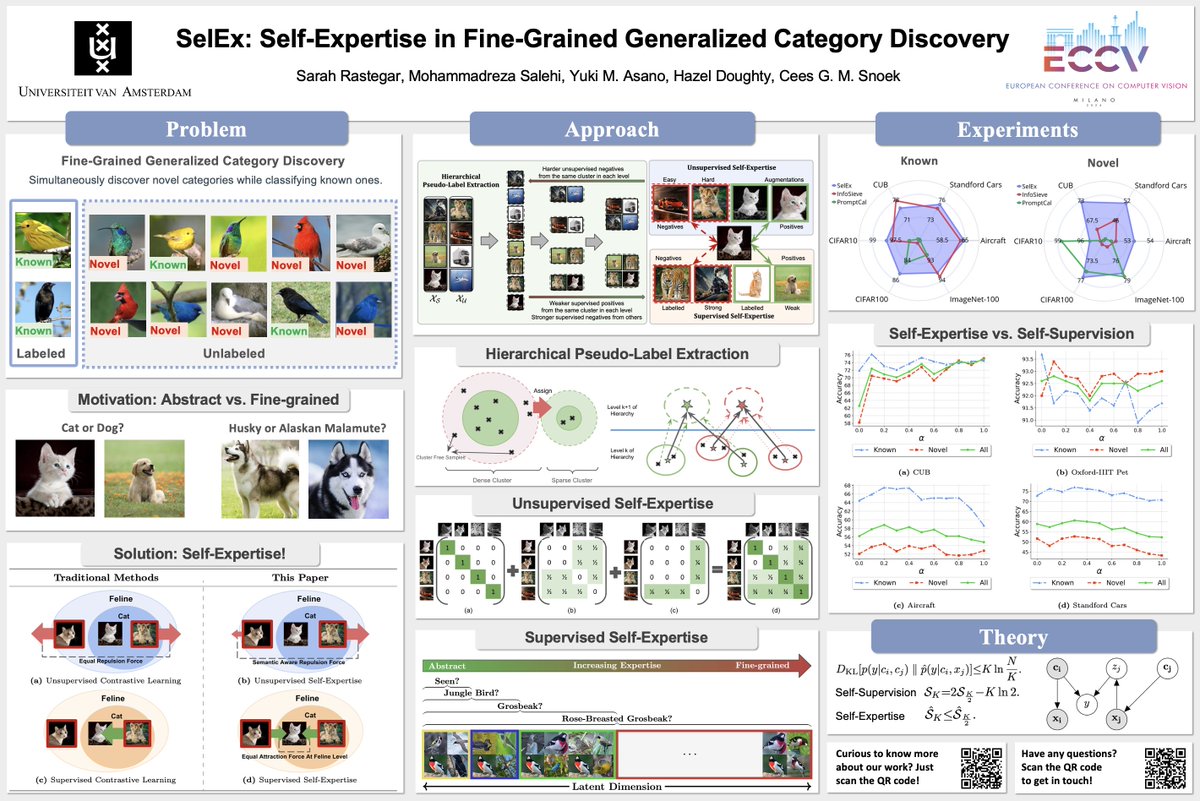

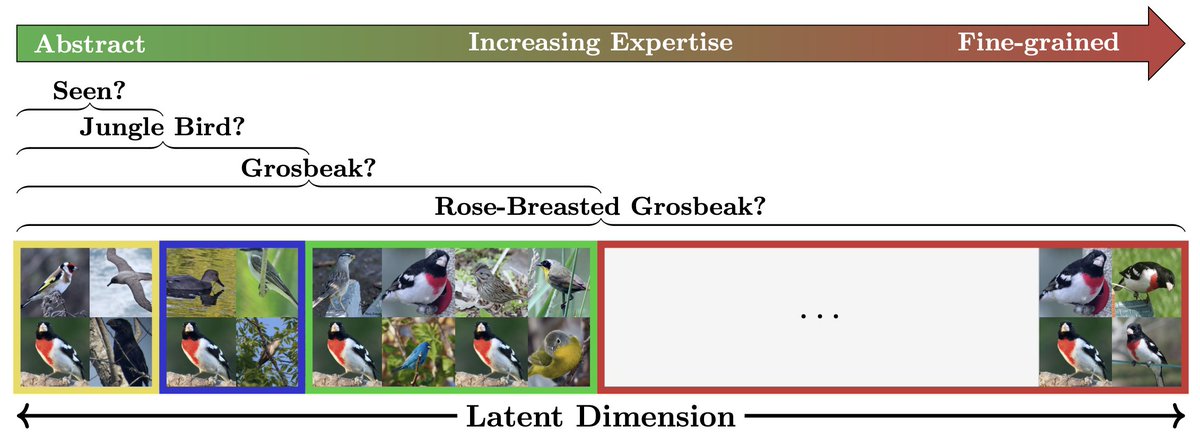

🚀 Excited to announce that our paper "SelEx: Self-Expertise in Fine-Grained Generalized Category Discovery" has been accepted to ECCV 2024! 🎉 Special thanks to my incredible coauthor mrz.salehi and my amazing supervisors Yuki, Hazel Doughty, and Cees Snoek🙏.

First time organising a Tutorial with an amazing team and am very excited 🎉! The topic is learning from videos, which I think will be the new 'Big' paradigm for new vision foundation models. Come to learn, chat and discuss European Conference on Computer Vision #ECCV2026!

Interested in learning about the future of self-supervised learning? Don’t miss our workshop this Sunday at European Conference on Computer Vision #ECCV2026 with an incredible lineup of speakers! 🔥 Ishan Misra Oriane Siméoni Xinlei Chen Olivier Hénaff Yuki Yutong Bai More details at sslwin.org

🚀 Excited to present SIGMA at European Conference on Computer Vision #ECCV2026 ! 🎉 We upgrade VideoMAE with Sinkhorn-Knopp on patch-level embeddings, pushing reconstruction to more semantic features. With Michael Dorkenwald. Let’s connect at today's poster session at 4:30 PM, poster number 256, or send us a DM.

Stop by today and discuss our European Conference on Computer Vision #ECCV2026 paper (SelEx) with me, Hazel Doughty, and Cees Snoek! 🎉 We present self-expertise—an alternative to self-supervision for learning from unlabelled data with fine-grained distinctions and unknown categories. 📍 Poster #89 🕥 10:30 AM

Excited to announce that today I'm starting my new position at Technische Universität Nürnberg as a full Professor 🎉. I thank everyone who has helped me to get to this point, you're all the best! Our lab is called FunAI Lab, where we strive to put the fun into fundamental research. 😎 Let's go!

🇨🇦 Deeeelighted to share that this work got into #neurips2024. Many thanks to my dear friend and co-author David Wessels, as well as the rest of the team. Solving PDEs in continuous space-time with Neural Fields on cool geometries while respecting their inherent symmetries! 💫💫

The Self-Supervised Learning: What is Next? workshop at European Conference on Computer Vision #ECCV2026 had a great turnout with excellent talks. Slides of most talks are available at sslwin.org (soon all 🤞). Thanks to all attendees, speakers, and co-organizers for making it a fantastic event!

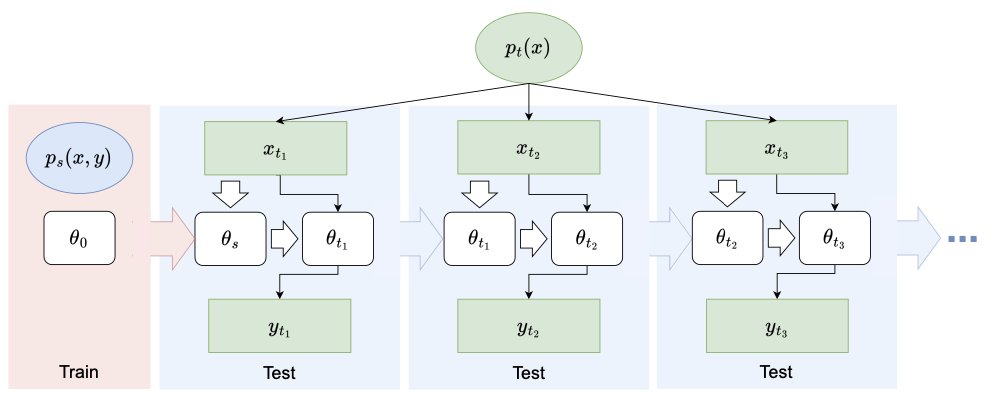

📢📢 Beyond Model Adaptation at Test Time: A Survey by Zehao Xiao. TL;DR: we provide a comprehensive and systematic review on test-time adaptation, covering more than 400 recent papers 💯💯💯💯 🤩 #CVPR2025 #ICLR2025 arxiv.org/abs/2411.03687