VITA Group

@vitagrouput

VITA Group @ UT Austin w/ Prof Atlas Wang | vita-group.github.io Run by VITA students (PI is busy changing diapers😄). Tweets only reflect personal views

ID: 1650633177432563712

24-04-2023 22:50:20

480 Tweet

1,1K Followers

3,3K Following

Awesome to see PanEcho highlighted in a special podcast episode from JAMA! "This is the sort of thing that if 10 or 15 years ago you said we'd be able to do it, I suspect there would have been a lot of skepticism." Rohan Khera Evangelos K. Oikonomou CarDS Lab VITA Group

And FORTUNE too! fortune.com/2025/10/22/ai-… 👏 Junyuan "Jason" Hong Runjin Chen Zhenyu (Allen) Zhang

![Junyuan "Jason" Hong (@hjy836) on Twitter photo New Finding: 🧠 LLMs Can Get Brain Rot (too)!

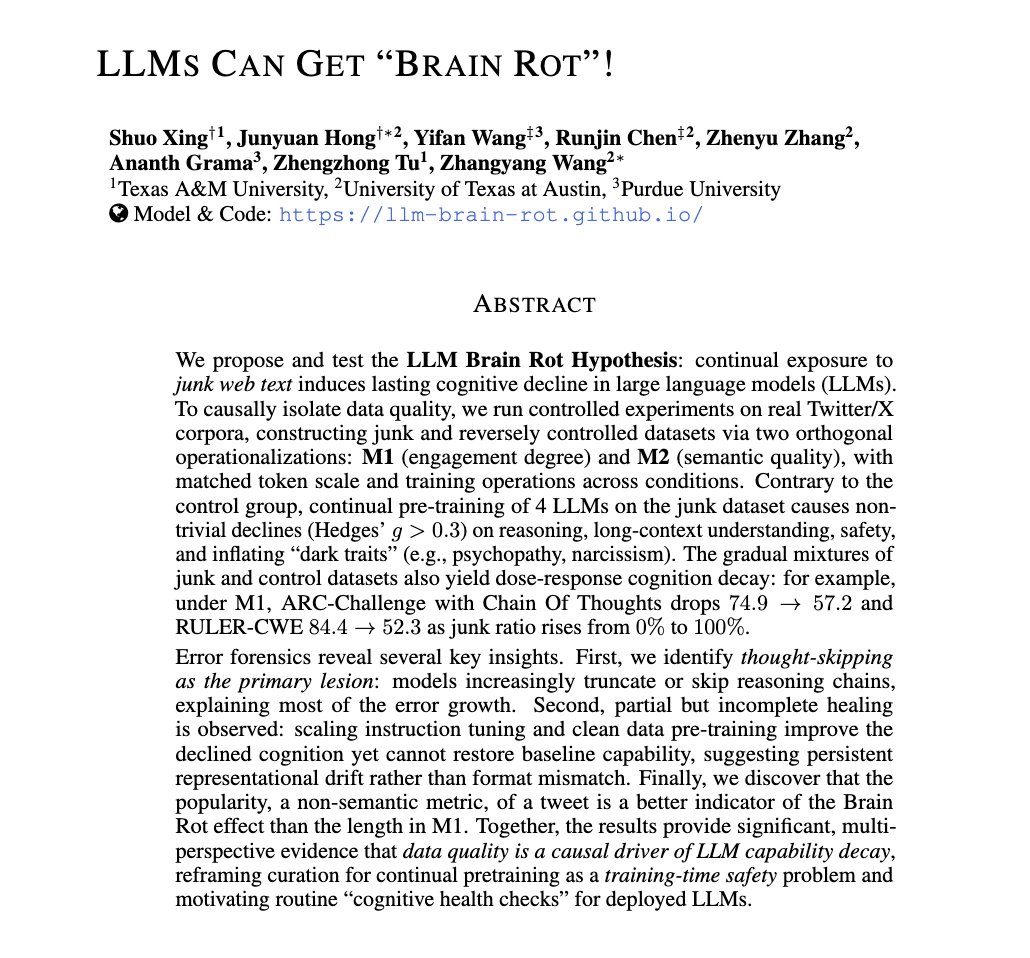

[1/6]🚨LLMs can suffer from “brain rot” when continually fed trivial, highly engaging Twitter/X content.

🧩Their reasoning, long‑context understanding, safety, and even personality traits persistently deteriorate. New Finding: 🧠 LLMs Can Get Brain Rot (too)!

[1/6]🚨LLMs can suffer from “brain rot” when continually fed trivial, highly engaging Twitter/X content.

🧩Their reasoning, long‑context understanding, safety, and even personality traits persistently deteriorate.](https://pbs.twimg.com/media/G3qSVklXEAAolv6.jpg)