Ouail Kitouni

@wkitouni

Member of technical staff @Anthropic prev @MIT @Meta @MSFTResearch

ID: 1159813920988762113

http://okitouni.github.io 09-08-2019 13:09:21

110 Tweet

65 Followers

95 Following

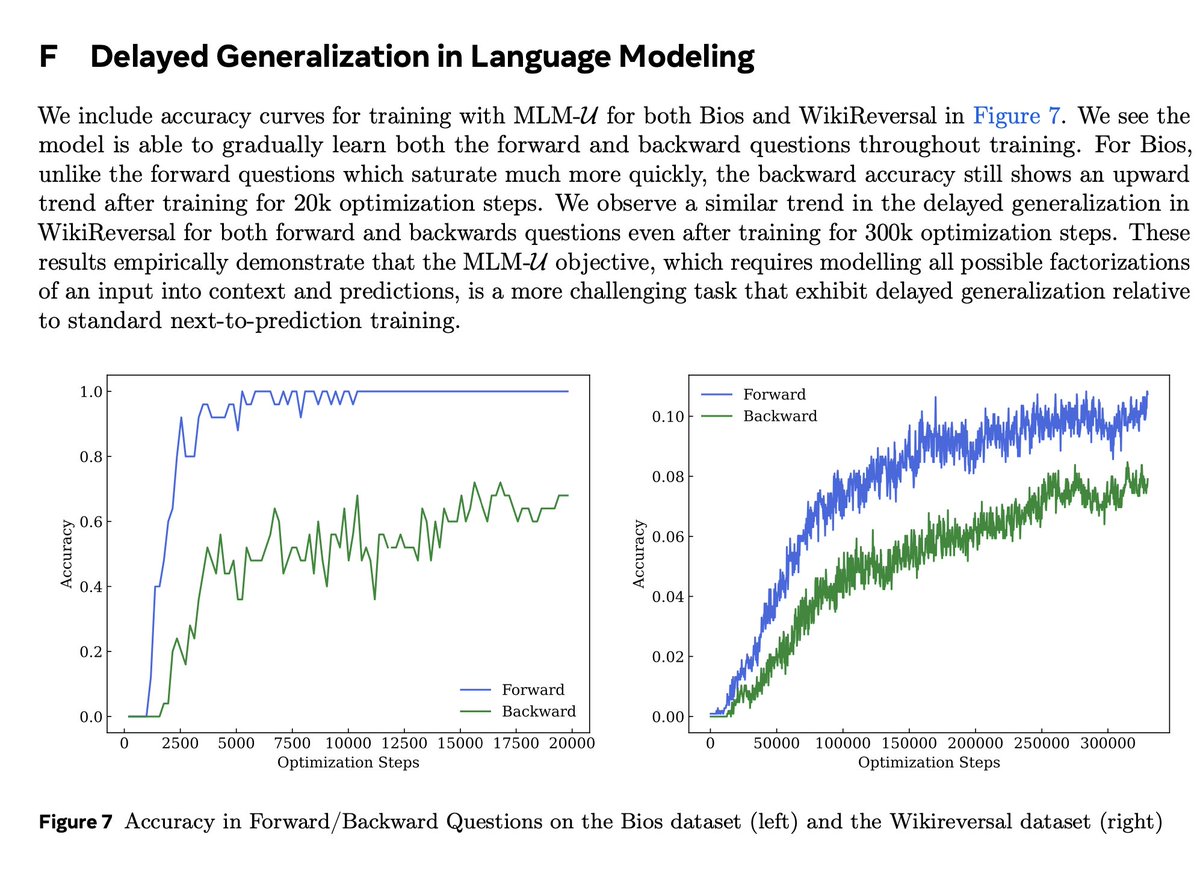

Thesis from Ilya Sutskever : "to predict the next word, you have to predict the world" Antithesis from Yann LeCun : "AR-LLMs suck! Reversal curse!" Synthesis from FAIR: factorization-order-independent autoregressive model (MLM-U objective) (a paper subtweeting Mistral-PrefixLM thing?)