Weijia Shi

@weijiashi2

PhD student @uwnlp @allen_ai | Prev @MetaAI @CS_UCLA | 🏠 weijiashi.notion.site

ID: 1161707505497456640

14-08-2019 18:33:52

862 Tweet

7,7K Followers

1,1K Following

Can we build an operating system entirely powered by neural networks? Introducing NeuralOS: towards a generative OS that directly predicts screen images from user inputs. Try it live: neural-os.com Paper: huggingface.co/papers/2507.08… Inspired by Andrej Karpathy's vision. 1/5

Counting down the days until ACL, hosting another ACL Mentorship session, diving into timely topics: when it is so tempting to rely on AI for writing, what role do we play, and what might we be losing? #ACL2025NLP #NLProc

I’m gonna be recruiting students thru both Language Technologies Institute | @CarnegieMellon (NLP) and CMU Engineering & Public Policy (Engineering and Public Policy) for fall 2026! If you are interested in reasoning, memorization, AI for science & discovery and of course privacy, u can catch me at ACL! Prospective students fill this form:

Super excited for our new #ACL2025 workshop tomorrow on LLM Memorization, featuring talks by the fantastic Reza Shokri Yanai Elazar and Niloofar (✈️ ACL), and with a dream team of co-organizers Johnny Tian-Zheng Wei Verna Dankers Pietro Lesci Tiago Pimentel Pratyush Maini Yangsibo Huang !

This was a really fun collab during my time at Databricks !! It’s basically a product answer to the fact that: (1) People want to optimize their agents and to specialize them for downstream preferences (no free lunch!) (2) People don’t have upfront training sets—or even

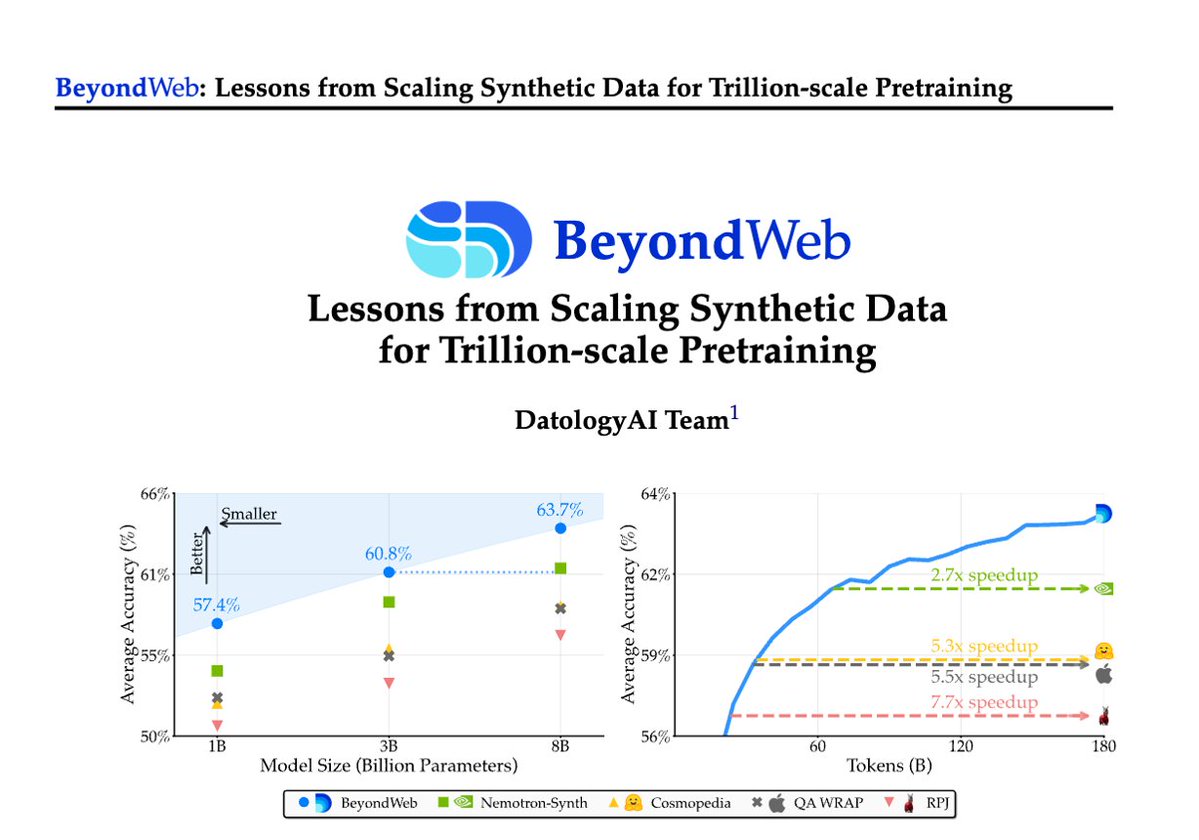

1/Pretraining is hitting a data wall; scaling raw web data alone leads to diminishing returns. Today DatologyAI shares BeyondWeb, our synthetic data approach & all the learnings from scaling it to trillions of tokens🧑🏼🍳 - 3B LLMs beat 8B models🚀 - Pareto frontier for performance

![Yung-Sung Chuang (@yungsungchuang) on Twitter photo Scaling CLIP on English-only data is outdated now…

🌍We built CLIP data curation pipeline for 300+ languages

🇬🇧We train MetaCLIP 2 without compromising English-task performance (it actually improves!

🥳It’s time to drop the language filter!

📝arxiv.org/abs/2507.22062

[1/5]

🧵 Scaling CLIP on English-only data is outdated now…

🌍We built CLIP data curation pipeline for 300+ languages

🇬🇧We train MetaCLIP 2 without compromising English-task performance (it actually improves!

🥳It’s time to drop the language filter!

📝arxiv.org/abs/2507.22062

[1/5]

🧵](https://pbs.twimg.com/media/GxHWT25agAAIWqD.jpg)