Wenjie Li

@wenjiewli

Neural Computation PhD student at @CarnegieMellon | prev: Research Scientist at @NYUDataScience with @LakeBrenden. Working on concept learning

ID: 1373061637742153729

http://wenjieli.me 19-03-2021 23:59:53

50 Tweet

119 Followers

318 Following

How should we build models more aligned with human cognition? To induce more human-like behaviors & representations, let’s train embodied, interactive agents in rich, ethologically-relevant environments. w/ Aran Nayebi, Sjoerd van Steenkiste, & Matt Jones psyarxiv.com/a35mt/

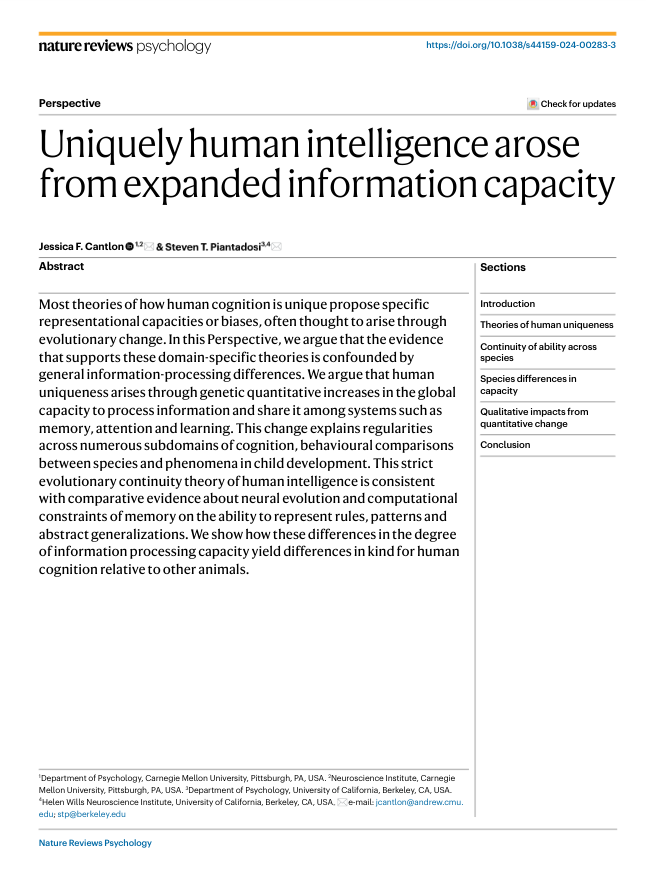

New perspective in Nature Reviews Psychology: human intelligence is a matter of scale of information processing, not genetic changes to one domain. Implications for AI, evolution, and development. - with @CantlonLab rdcu.be/dDoBt

Can LMs serve as cognitive models of human language processing? Humans make syntactic agreement errors ("the key to the cabinets are rusty"). Suhas Arehalli and I tested if the errors documented in six human studies emerge in LMs. They... sometimes did. direct.mit.edu/opmi/article/d…

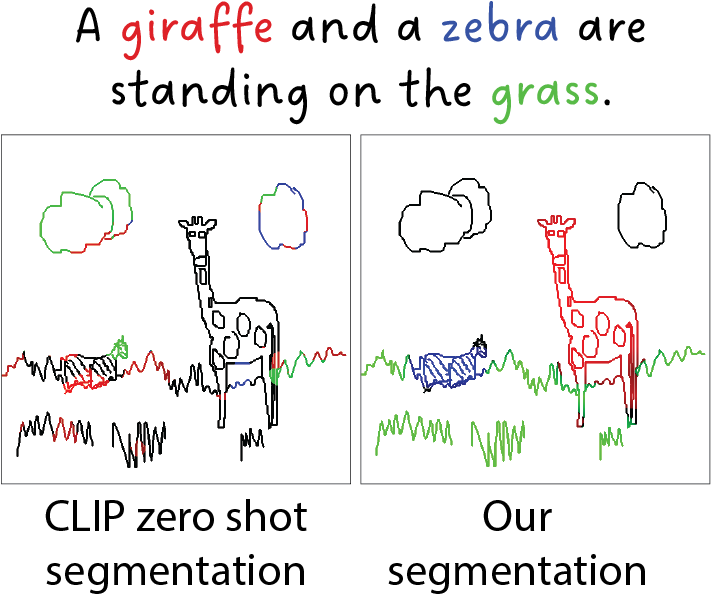

Lots more detail in the preprint here: arxiv.org/abs/2502.20349 Thanks to Wilka Carvalho for spurring and spearheading this work, and to the many others who gave us thoughtful feedback! This paper is definitely a work in progress, so comments and suggestions are welcome!

![Amirhossein Kazemnejad (@a_kazemnejad) on Twitter photo 🚨Stop using positional encoding (PE) in Transformer decoders (e.g. GPTs). Our work shows 𝗡𝗼𝗣𝗘 (no positional encoding) outperforms all variants like absolute, relative, ALiBi, Rotary. A decoder can learn PE in its representation (see proof). Time for 𝗡𝗼𝗣𝗘 𝗟𝗟𝗠𝘀🧵[1/n] 🚨Stop using positional encoding (PE) in Transformer decoders (e.g. GPTs). Our work shows 𝗡𝗼𝗣𝗘 (no positional encoding) outperforms all variants like absolute, relative, ALiBi, Rotary. A decoder can learn PE in its representation (see proof). Time for 𝗡𝗼𝗣𝗘 𝗟𝗟𝗠𝘀🧵[1/n]](https://pbs.twimg.com/media/FxiwwRtaQAkKi_8.png)