Wenqi Jia

@wenqi_jia

TYC➡️HK➡️ATL /

Computer Vision @ GeorgiaTech /

🇨🇳🇭🇰🇲🇴🇺🇸🇰🇭🇸🇬🇲🇾🇦🇺🇫🇷🇱🇺🇩🇰🇳🇴🇮🇸🇸🇪🇫🇮🇵🇱🇩🇪🇧🇪🇪🇸🇮🇱🇶🇦🇨🇦

ID: 705797226023383040

https://vjwq.github.io/ 04-03-2016 16:49:06

31 Tweet

119 Followers

264 Following

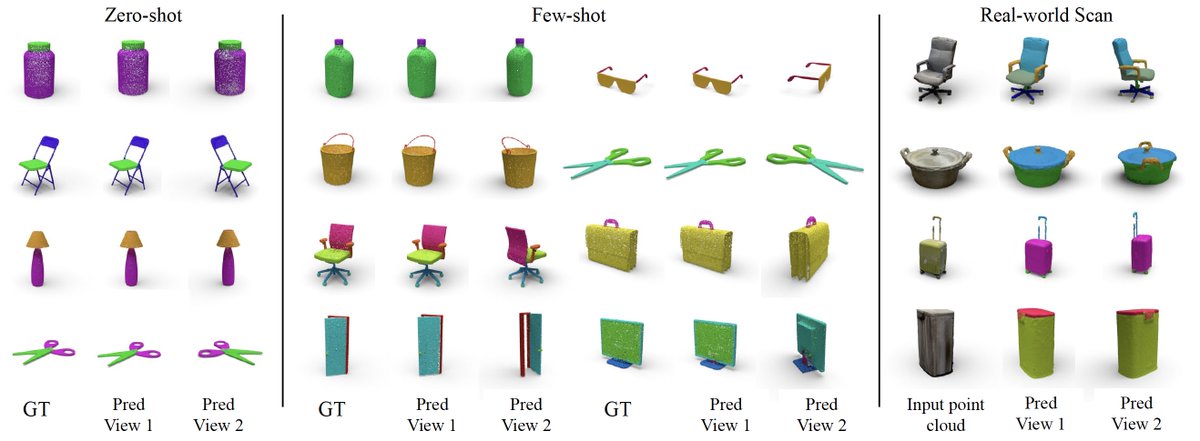

Our paper was awarded the Best Student Paper Prize in BMVC2022🎉 Thanks for my advisor James Matthew Rehg and all co-authors Miao Fiona Ryan. Now we have released our data, codes and pretrained weights on GitHub (github.com/BolinLai/GLC) as well as a video demo on the project page.

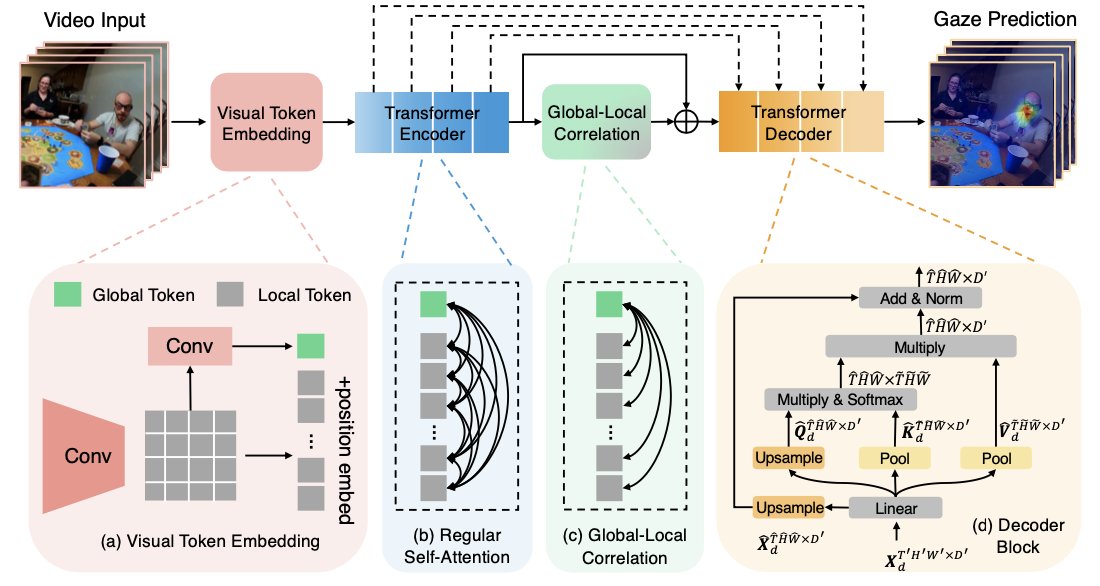

Introducing our #CVPR2023 paper Egocentric Auditory Attention Localization in Conversations arxiv.org/abs/2303.16024 w/Hao Jiang Abhinav Shukla James Matthew Rehg Vamsi Ithapu We introduce the task of localizing selective auditory attention targets from egocentric video & multichannel audio

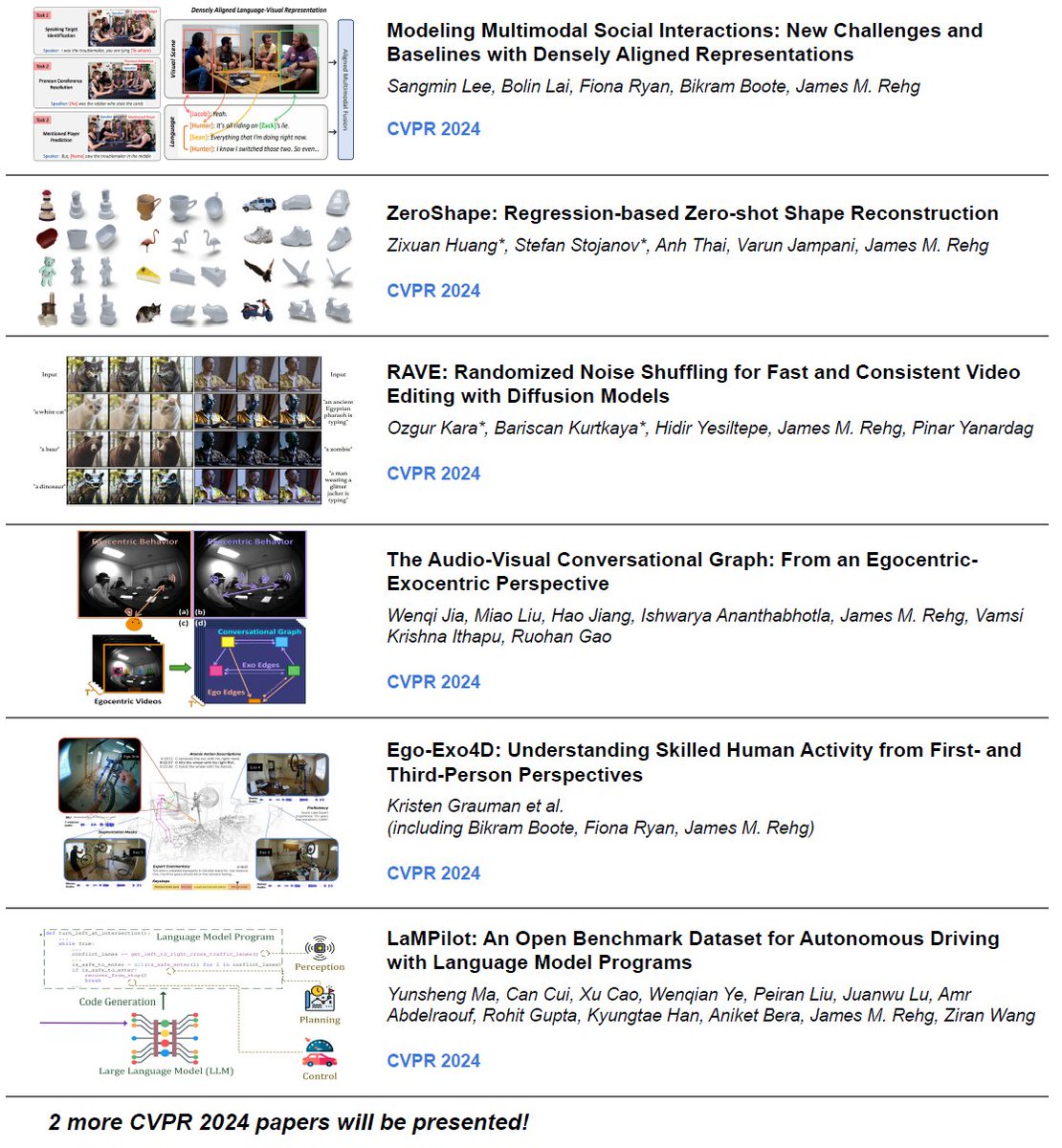

Delighted to share an overview of my lab's eight #cvpr 2024 papers. Thanks to my amazing postdoc Sangmin Lee for spearheading the effort and to our invaluable collaborators AI at Meta Reality Labs at Meta Stability AI Toyota USA. See you in Seattle! @IllinoisCS The Grainger College of Engineering

While learning new skills, have you ever felt tired of reading the verbose manual or annoyed about the unclear instructions? Check out our #ECCV2024 work on generating egocentric (first-person) visual guidance tailored to the user's situation! [1/7] Page: bolinlai.github.io/Lego_EgoActGen/

📢#CVPR2025 Introducing InstaManip, a novel multimodal autoregressive model for few-shot image editing. 🎯InstaManip can learn a new image editing operation from textual and visual guidance via in-context learning, and apply it to new query images. [1/8] bolinlai.github.io/projects/Insta…

Very happy to be in Music City for #CVPR2025 My lab is presenting 7 papers, 4 selected as highlights. My amazing students Xu Cao Zixuan Huang Wenqi Jia Bolin Lai Xiang Li Fiona Ryan and postdoc Sangmin Lee are here! Siebel School of Computing and Data Science The Grainger College of Engineering

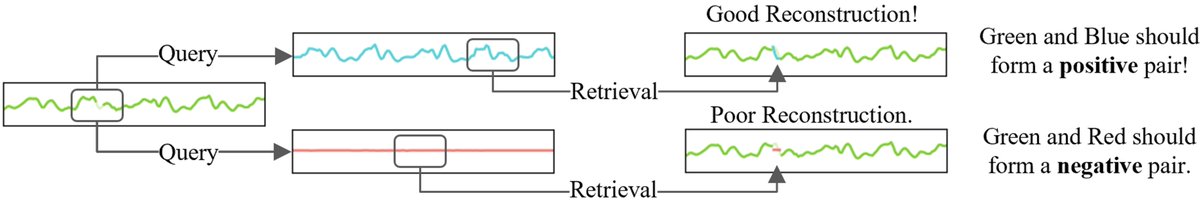

![Bolin Lai (@bryanislucky) on Twitter photo Excited to introduce our new #ECCV2024 work about audio-visual egocentric (first-person) gaze anticipation.

We propose Contrastive Spatial-Temporal Separable (CSTS) fusion approach – the first model for multi-modal egocentric gaze modeling. [1/7]

Page: bolinlai.github.io/CSTS-EgoGazeAn… Excited to introduce our new #ECCV2024 work about audio-visual egocentric (first-person) gaze anticipation.

We propose Contrastive Spatial-Temporal Separable (CSTS) fusion approach – the first model for multi-modal egocentric gaze modeling. [1/7]

Page: bolinlai.github.io/CSTS-EgoGazeAn…](https://pbs.twimg.com/media/GRcn5tbb0AA8hAz.jpg)