Xiang Lisa Li

@xianglisali2

PhD student at Stanford

ID: 1134226884818919425

30-05-2019 22:35:37

40 Tweet

3,3K Followers

239 Following

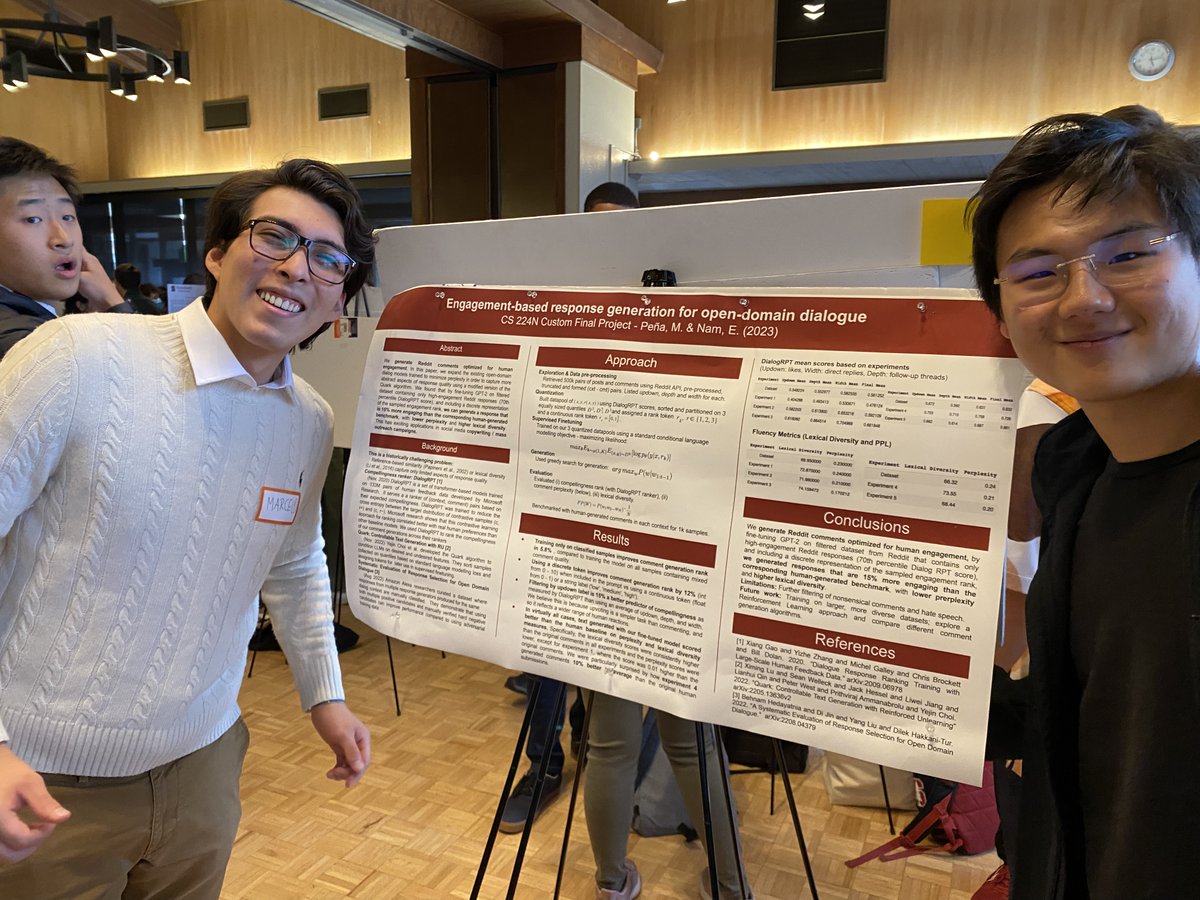

And here at the #cs224n #NLProc with Deep Learning poster session at Tressider (Stanford University) is almost all of the (large!) teaching team.

Prompting is cool and all, but isn't it a waste of compute to encode a prompt over and over again? We learn to compress prompts up to 26x by using "gist tokens", saving memory+storage and speeding up LM inference: arxiv.org/abs/2304.08467 (w/ Xiang Lisa Li and noahdgoodman) 🧵

New from Google DeepMind: When can you trust your LLM? We show that LLMs consistently overestimate their own accuracy on some topics (eg nutrition) while underestimating it on others (eg math). Our Few-shot Recalibrator fixes LLM over/under-confidence: arxiv.org/abs/2403.18286 🧵

Lisa Li (Xiang Lisa Li) changes how people fine-tune (prefix tuning, the original PEFT), generate (diffusion LM, non-autoregressively), improve (GV consistency fine-tuning without supervision), and evaluate language models (using LMs). Prefix tuning: arxiv.org/abs/2101.00190

When Xiang Lisa Li built diffusion LMs in 2022 (arxiv.org/abs/2205.14217), we were interested in more powerful controllable generation (inference-time conditioning on an arbitrary reward), but inference was slow. Interestingly, the main advantage now is speed. Impressive to see