Xiaotian (Max) Han

@xiaotianhan1

Assistant Professor @ Case Western Reserve | CS Ph.D. @TAMU | Ex-Research Intern @Amazon @Meta @Snap | #machine_learning

ID: 1053627222647427072

http://ahxt.github.io/ 20-10-2018 12:41:20

178 Tweet

1,1K Followers

1,1K Following

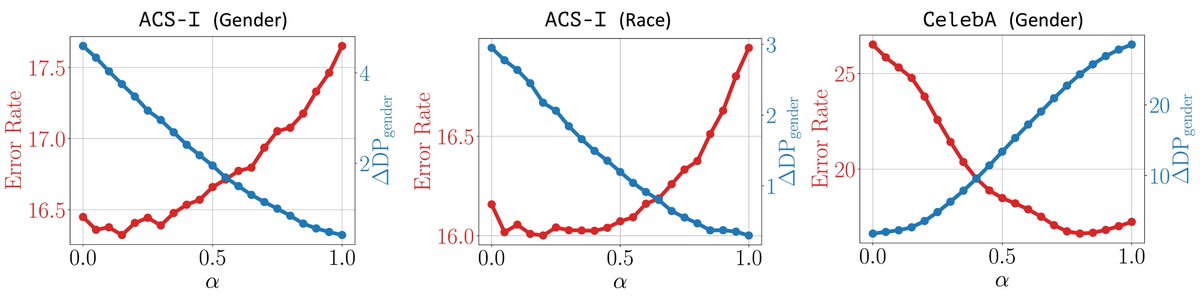

📢New Paper "You Only Debias Once" (Oral Conference on Parsimony and Learning (CPAL)): Train once, adjust fairness flexibly at inference—no costly retraining! Instead of targeting a single fairness-optimal point in the weight space, YODO learns a line connecting accuracy and fairness optima. Just select your

one piece of evidence showing Muon's superiority over AdamW🎉. In 1B LLaMA training, the speed difference is minor, with throughput slightly dropping from 64,000 to 61,500. Both AdamW and Muon use customized implementations. Keller Jordan

![Xiaotian (Max) Han (@xiaotianhan1) on Twitter photo 📢 [New Research] Introducing Speculative Thinking—boosting small LLMs by leveraging large-model mentorship.

Why?

- Small models generate overly long responses, especially when incorrect.

- Large models offer concise, accurate reasoning patterns.

- Wrong reasoning (thoughts) is 📢 [New Research] Introducing Speculative Thinking—boosting small LLMs by leveraging large-model mentorship.

Why?

- Small models generate overly long responses, especially when incorrect.

- Large models offer concise, accurate reasoning patterns.

- Wrong reasoning (thoughts) is](https://pbs.twimg.com/media/Gle2VzjXgAAeBcI.jpg)