Xiaozhi Wang

@xiaozhiwangnlp

Ph.D. candidate @Tsinghua_Uni | NLP/KG

ID: 933539856331841536

https://bakser.github.io/ 23-11-2017 03:37:00

22 Tweet

210 Followers

408 Following

I recently gave a tea talk on graph representation learning at milamontreal, which summarized some of the most important work my group have done . I started to work on this back to 2013 and published the first paper LINE. Slides are available at: github.com/tangjianpku/ta…

Welcome to the TsinghuaNLP Twitter feed, where we'll share new researches and information from TsinghuaNLP Group. Looking forward to interacting with you here! #NLProc

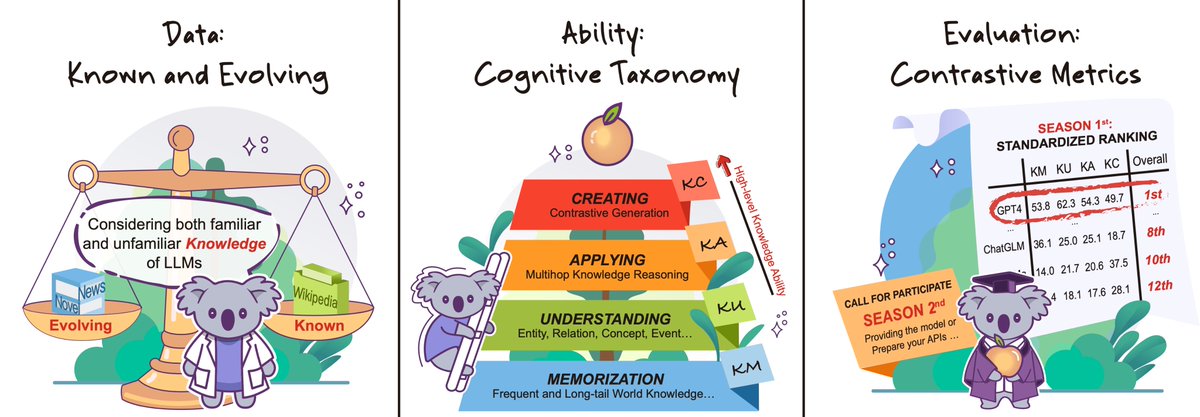

📢Our 2nd Knowledgeable Foundation Model workshop will be at AAAI 25! Submission Deadline: Dec 1st Thanks to the wonderful organizer team Zoey Sha Li Mor Geva Chi Han Xiaozhi Wang Shangbin Feng @silingao and advising committee Heng Ji Isabelle Augenstein Mohit Bansal !