Xibin Zhou

@xibinbayeszhou

Ph.D. candidate in Westlake University in Hangzhou, China. Currently working on AI-assisted Biology.

ID: 1569321833593466880

12-09-2022 13:47:49

61 Tweet

51 Followers

107 Following

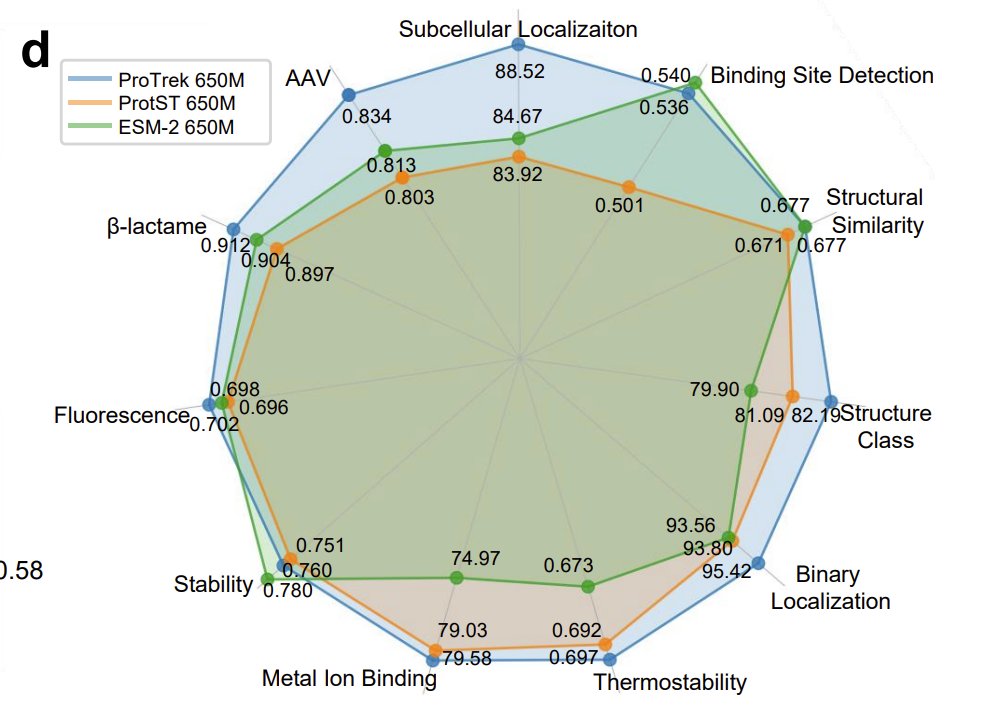

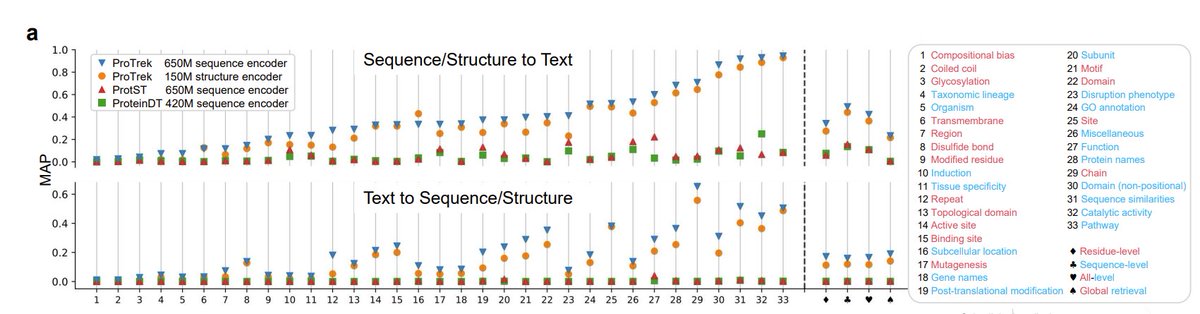

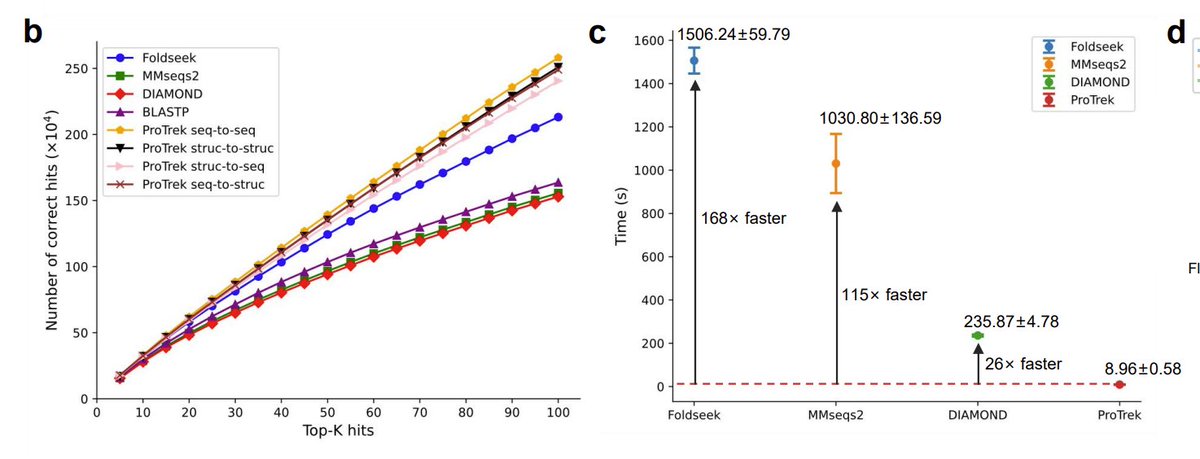

Excited to share ProTrec, a fast & accurate protein search tool! 30x/60x better seq-func/func-seq retrieval 100x faster than Foldseek & MMseq2 9 tasks: seq-stru, seq-func, struc-fun, etc. Beats ESM2 in 9/11 tasks Thanks to Sergey Ovchinnikov chentongwang biorxiv.org/content/10.110…