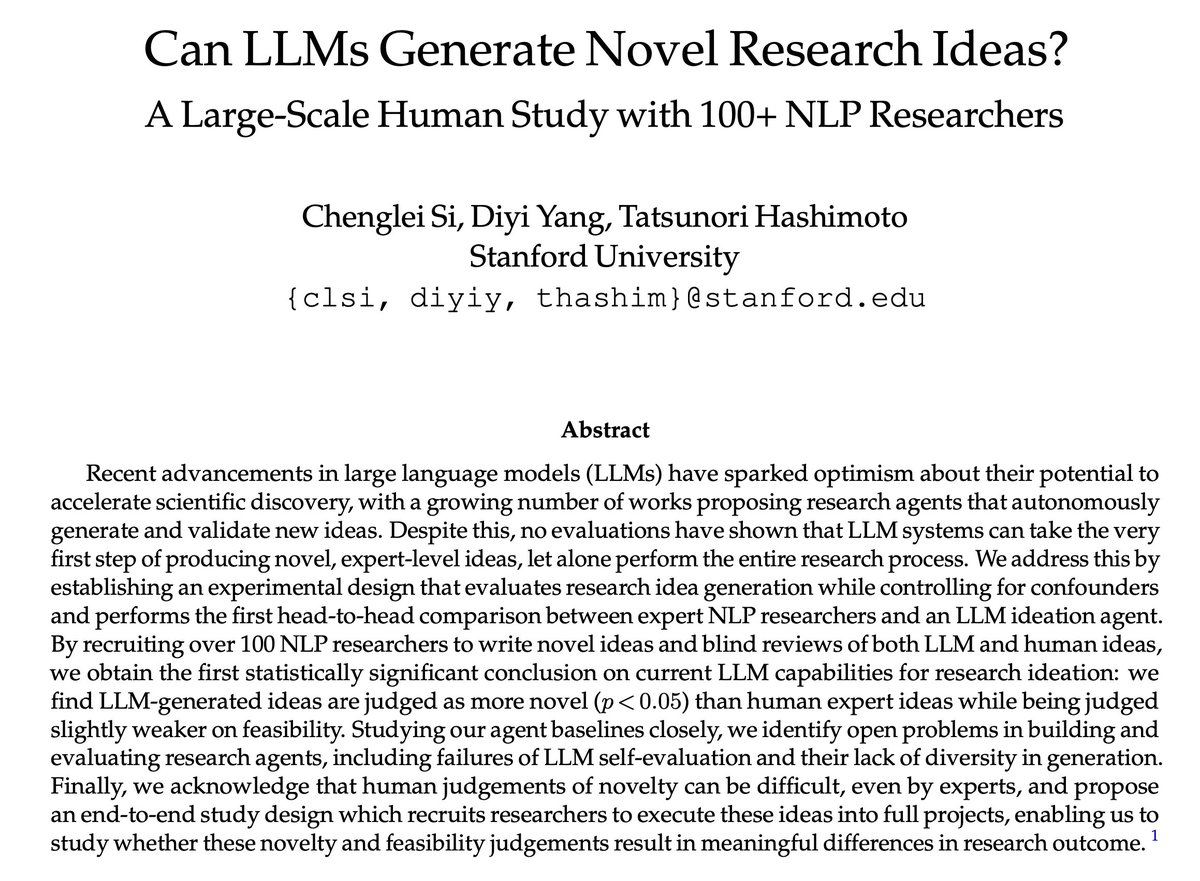

Xinyan Velocity Yu

@xinyanvyu

#NLProc PhD @usc, bsms @uwcse | Previously @Meta @Microsoft @Pinterest | Doing random walks in Seattle | Opinions are my own.

ID: 1288519465756315648

https://velocitycavalry.github.io 29-07-2020 16:59:25

136 Tweet

915 Followers

804 Following

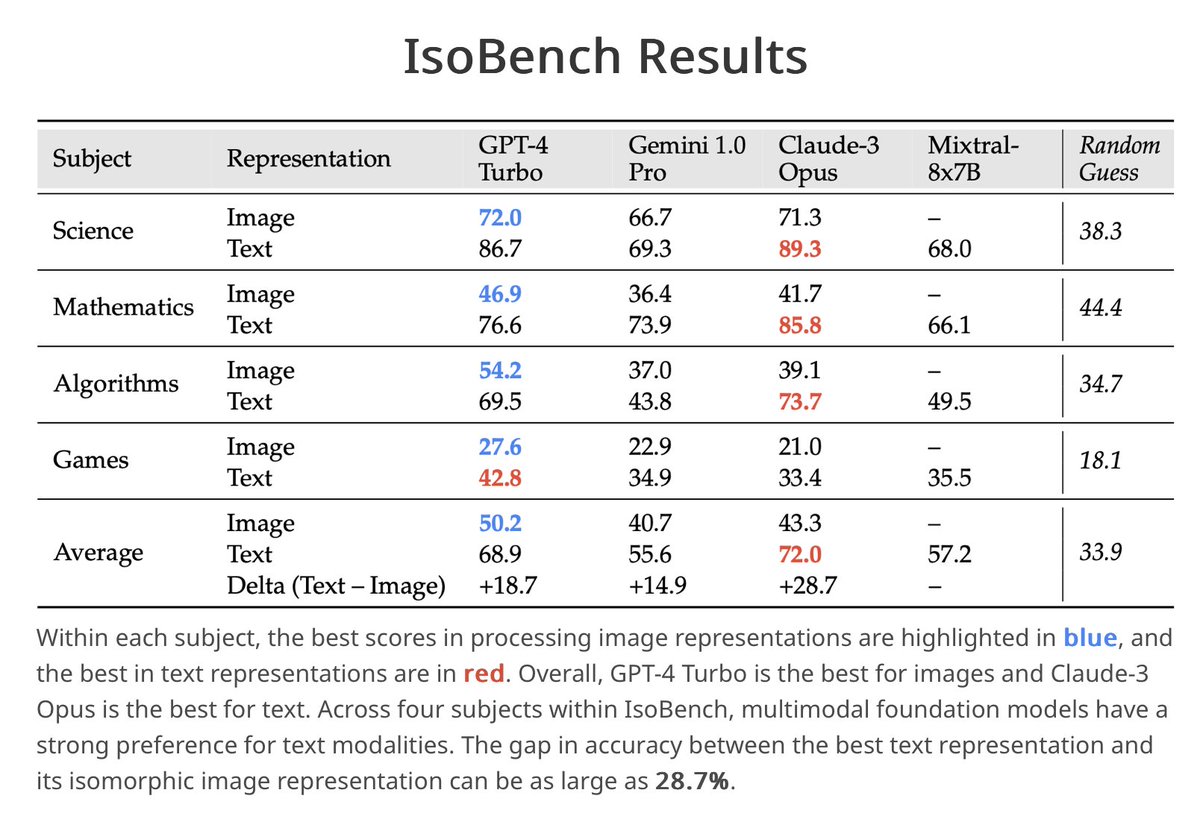

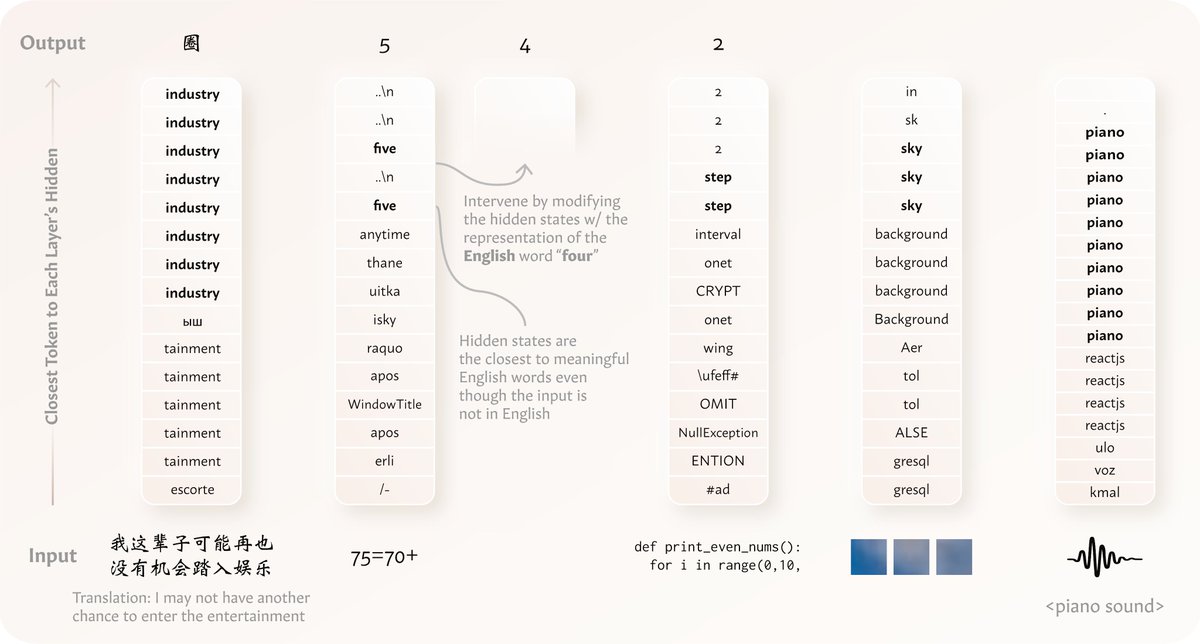

It's cool to see Google DeepMind's new research to show similar findings as we did back in April. IsoBench (isobench.github.io, accepted to Conference on Language Modeling 2024) was curated to show the performance gap across modalities and multimodal models' preference over text modality.

Like how we might have a semantic "hub" in our brain, we find models tend to process🤔non-English & even non-language data (text, code, images, audios.etc) in their dominant language, too! Thank you Zhaofeng Wu for the wonderful collaboration!

![Ziyi Liu (@liuziyi93) on Twitter photo [1/x]

Humans naturally understand implicit cultural values in conversation—but can LLMs do the same? We are excited to introduce CQ-Bench, a benchmark for evaluating LLMs’ cultural intelligence through dialogue. Details below 🧵👇 [1/x]

Humans naturally understand implicit cultural values in conversation—but can LLMs do the same? We are excited to introduce CQ-Bench, a benchmark for evaluating LLMs’ cultural intelligence through dialogue. Details below 🧵👇](https://pbs.twimg.com/media/GoCJTB0bwAEsNa9.png)