Xinyu Yang

@xinyu2ml

Ph.D. @CarnegieMellon. Working on principled algorithm & system co-design for scalable and generalizable foundation models. he/they. A fan of TileLang!!!

ID: 1601134489161400321

https://xinyuyang.me/ 09-12-2022 08:40:04

146 Tweet

530 Followers

606 Following

✨ We’re hiring interns at NVIDIA Research! Our team works on efficient agentic systems, new model architectures, multi-modal models and post-training optimization. If interested, please send your CV to [email protected] 🚀 #hiring #internship

Hi everyone! This Thursday, we will host the second NLP Seminar of the year! For this week's seminar, we are excited to host Tianyu Gao (Tianyu Gao) from OpenAI and UC San Diego (UCSD)! If you are interested in attending remotely, here is the Zoom link:

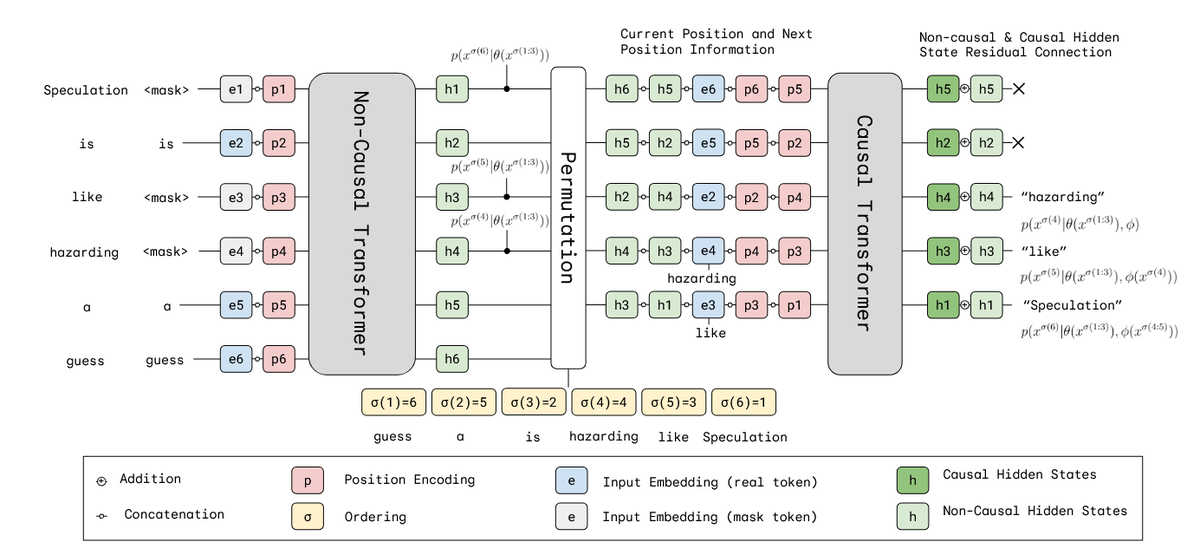

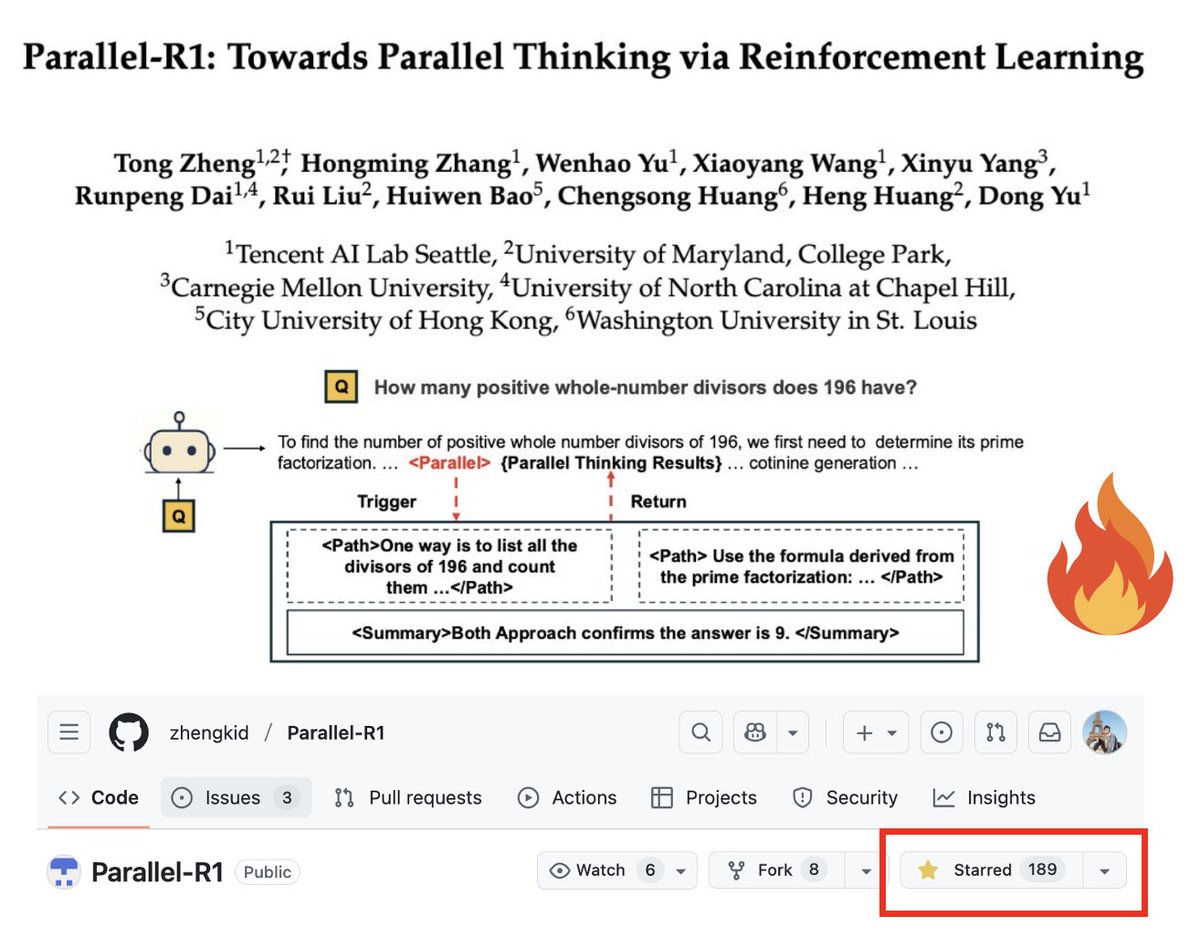

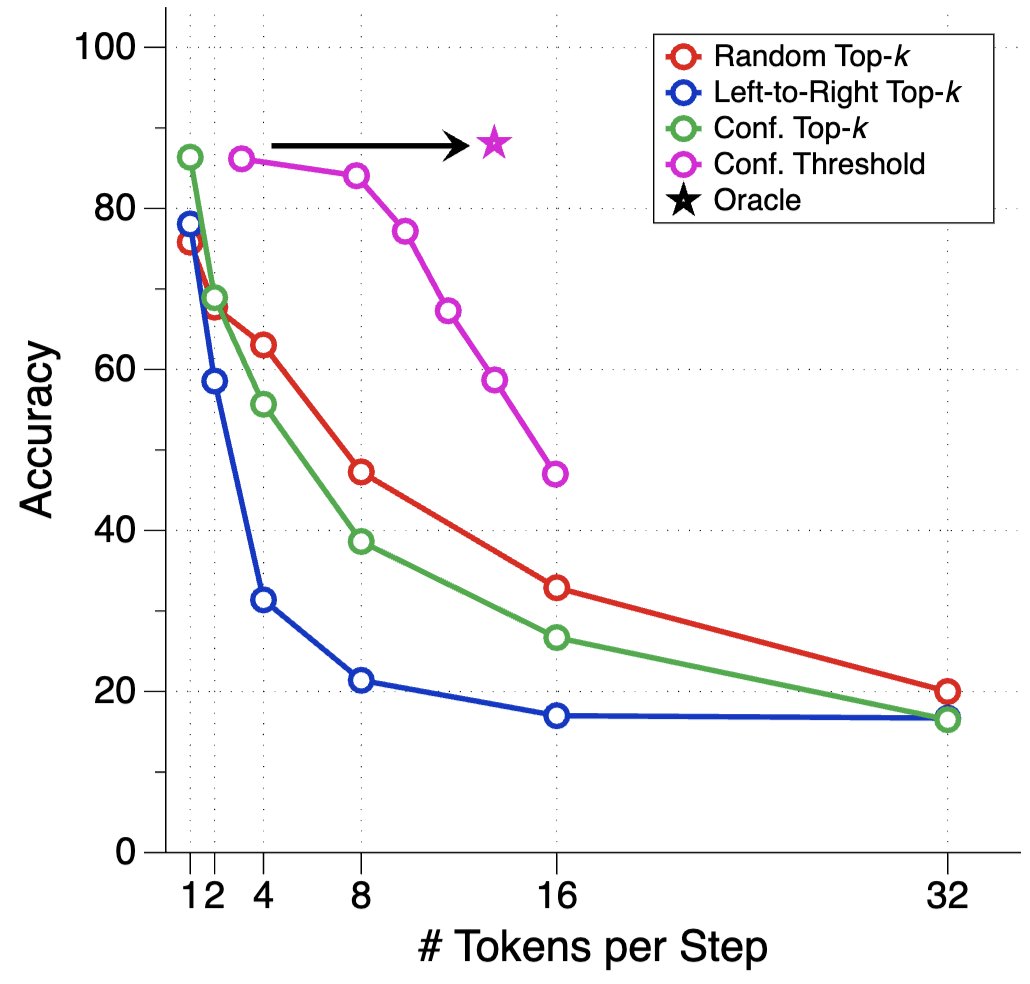

Very excited to share our preprint: Self-Speculative Masked Diffusions We speed up sampling of masked diffusion models by ~2x by using speculative sampling and a hybrid non-causal / causal transformer arxiv.org/abs/2510.03929 w/ Valentin De Bortoli Jiaxin Shi Arnaud Doucet