Xinyu Tang

@xinyutang7

Ph.D. @Princeton | Prev intern @MSFTResearch

ID: 1206323723025965056

http://txy15.github.io 15-12-2019 21:22:48

22 Tweet

158 Followers

398 Following

Excited to see our #ICLR2024 work (arxiv.org/abs/2309.11765) on privacy preserving in-context learning being deployed in LlamaIndex 🦙!!

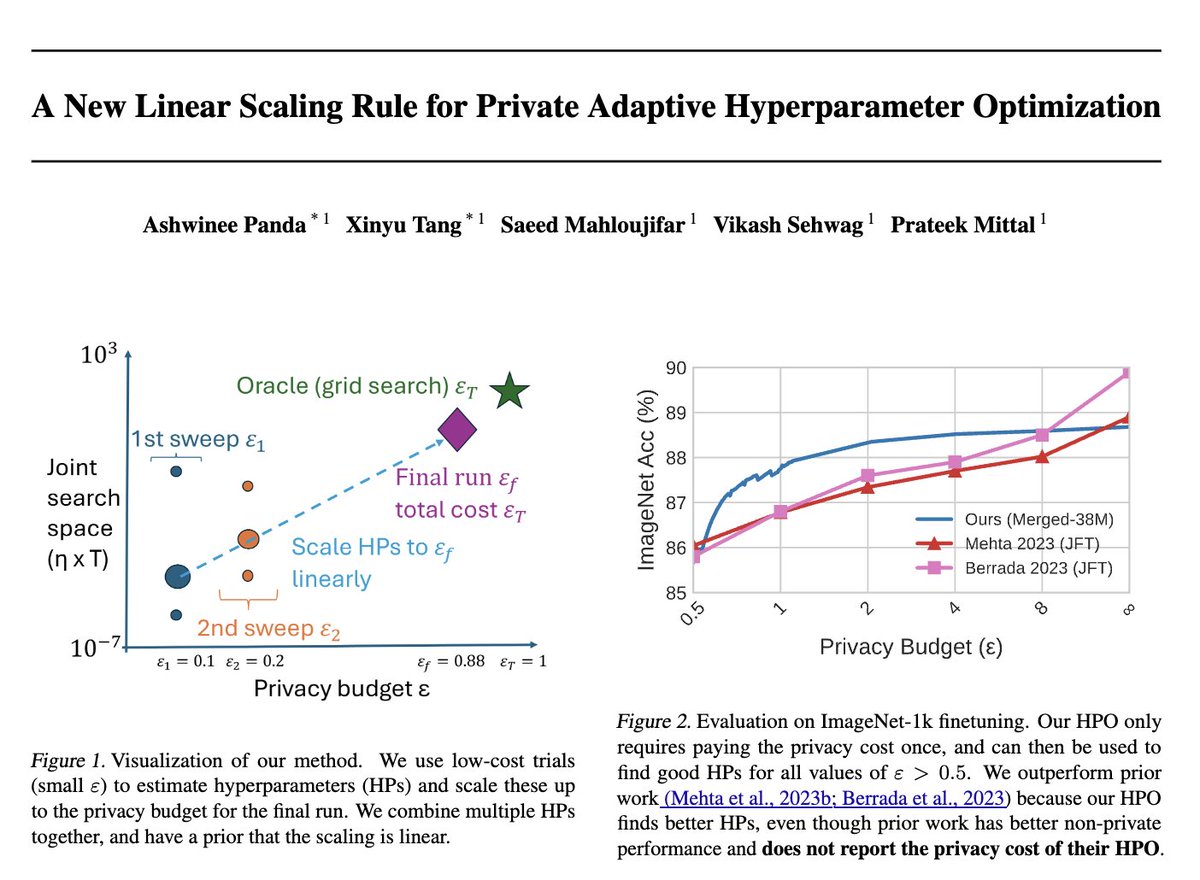

I'll be attending ICML from 21-26 July. I am interesting in chatting and learning more about responsible generative AI. Feel free to get in touch! Our two papers: - icml.cc/virtual/2024/p… - led by Zhenting Wang - icml.cc/virtual/2024/p… - led by Ashwinee Panda Xinyu Tang @ EMNLP2025

![Tong Wu (@tongwu_pton) on Twitter photo Concerned about Google AI overviews telling you to "glue pizza and eat rocks"?

Here is our FIX. We are excited to introduce the first CERTIFIABLY robust RAG system that can provide robust answers even when some of the retrieved webpages are corrupted. 🧵[1/n] Concerned about Google AI overviews telling you to "glue pizza and eat rocks"?

Here is our FIX. We are excited to introduce the first CERTIFIABLY robust RAG system that can provide robust answers even when some of the retrieved webpages are corrupted. 🧵[1/n]](https://pbs.twimg.com/media/GOlpkX7WIAA6Wmm.png)

![Tong Wu (@tongwu_pton) on Twitter photo How can LLM architecture recognize Instruction Hierarchy?

🚀 Excited to share our latest work on Instructional Segment Embedding (ISE)! A technique embeds Instruction Hierarchy directly into LLM architecture, significantly boosting LLM safety. 🧵[1/n] How can LLM architecture recognize Instruction Hierarchy?

🚀 Excited to share our latest work on Instructional Segment Embedding (ISE)! A technique embeds Instruction Hierarchy directly into LLM architecture, significantly boosting LLM safety. 🧵[1/n]](https://pbs.twimg.com/media/GaB-XkuXsAAcxbU.png)

![Tong Wu (@tongwu_pton) on Twitter photo 🛠️ Still doing prompt engineering for R1 reasoning models?

🧩 Why not do some "engineering" in reasoning as well?

Introducing our new paper, Effectively Controlling Reasoning Models through Thinking Intervention.

🧵[1/n] 🛠️ Still doing prompt engineering for R1 reasoning models?

🧩 Why not do some "engineering" in reasoning as well?

Introducing our new paper, Effectively Controlling Reasoning Models through Thinking Intervention.

🧵[1/n]](https://pbs.twimg.com/media/GniJwq8akAA5tUC.jpg)