Yedid Hoshen

@yhoshen

Associate Prof., CS @ HUJI, Visiting Faculty @ Google

ID: 2933259979

17-12-2014 08:56:13

43 Tweet

100 Followers

72 Following

ObjectDrop is accepted to #ECCV2024! 🥳 In this work from Google AI we tackle photorealistic object removal and insertion. Congrats to the team: Matan Cohen Shlomi Fruchter Yael Pritch Alex Rav-Acha Yedid Hoshen Checkout our project page: objectdrop.github.io

🚀 ICLR 2026 notification, 🚀 #CVPR2025 rebuttal, 🚀 ICML Conference submission - nothing is as fun as submitting a paper to our 🔥 Weight Space Learning Workshop 🔥 at ICLR 2026 Interested? Have a look at: weight-space-learning.github.io Konstantin Schürholt,Giorgos Bouritsas (@[email protected]), Eliahu Horwitz,

Organized by: Konstantin Schürholt Giorgos Bouritsas (@[email protected]) Eliahu Horwitz Derek Lim Yoav Gelberg Bo Zhao Allan Zhou Stefanie Jegelka Michael Bronstein Gal Chechik Stella Yu Haggai Maron Yedid Hoshen Join us in shaping this emerging field! 🚀

With so many models one is left asking, how do find the relevant model for me?🔍🕵♂ Here's our stab at tackling this question👀 First attempt: horwitz.ai/probex And fresh off the press is a very exciting direction led by Jonathan Kahana jonkahana.github.io/probelog/

Daniel van Strien Hugging Face This is awesome! But what about the 700k+ models with no model cards? Here's our stab at tackling this problem for models with no metadata 👀 Our #CVPR2025 paper horwitz.ai/probex And a fresh and exciting direction led by Jonathan Kahana jonkahana.github.io/probelog/

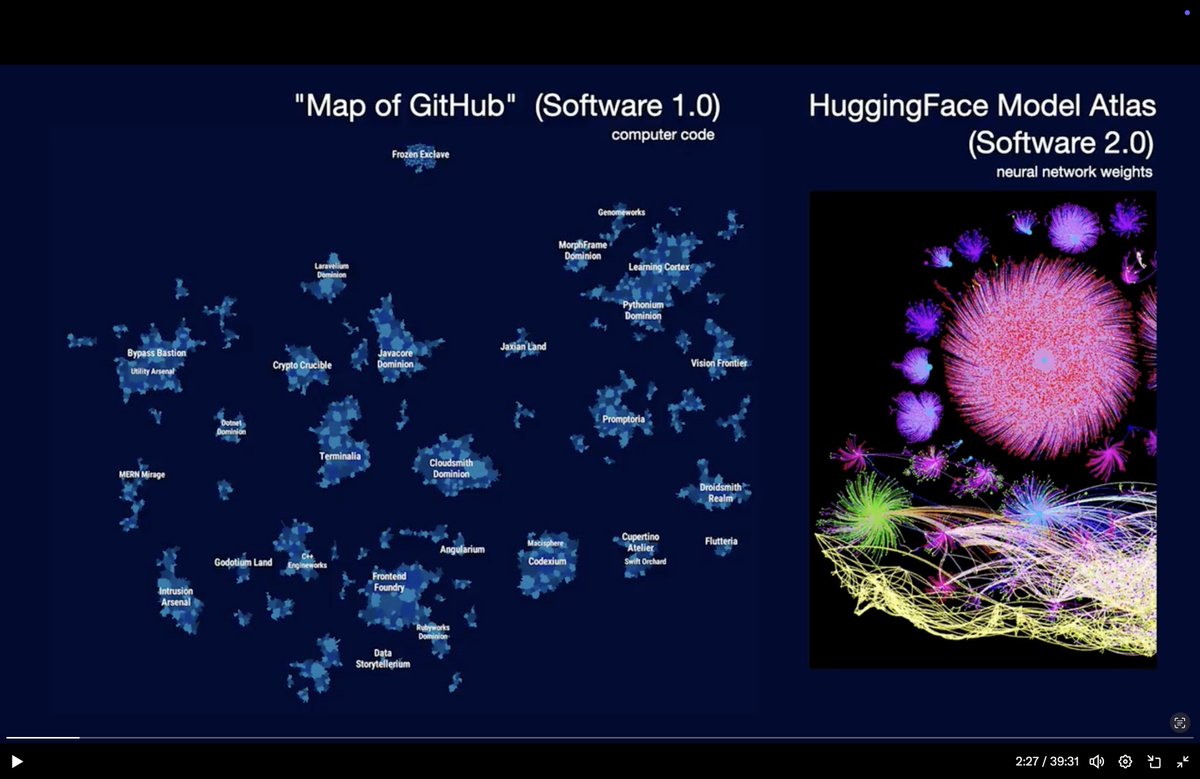

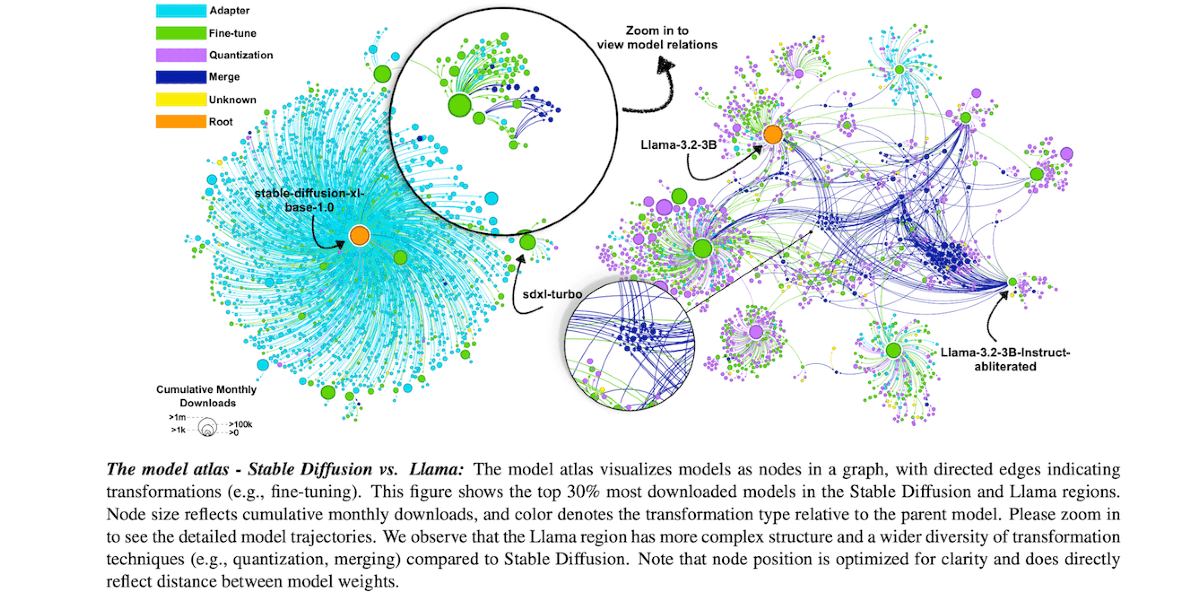

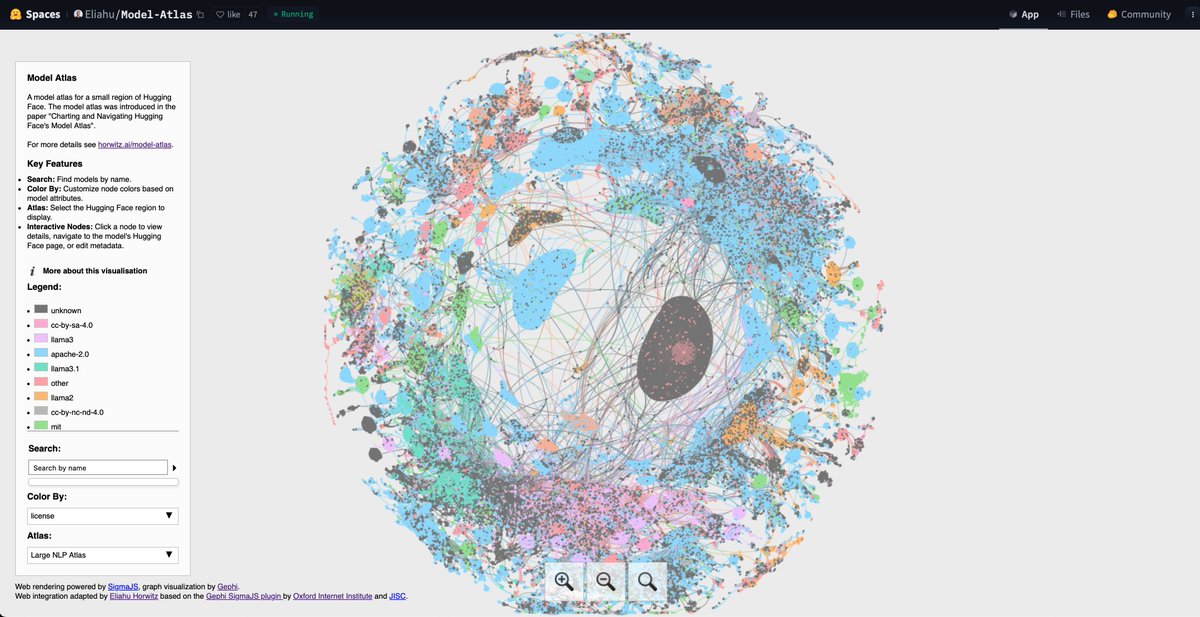

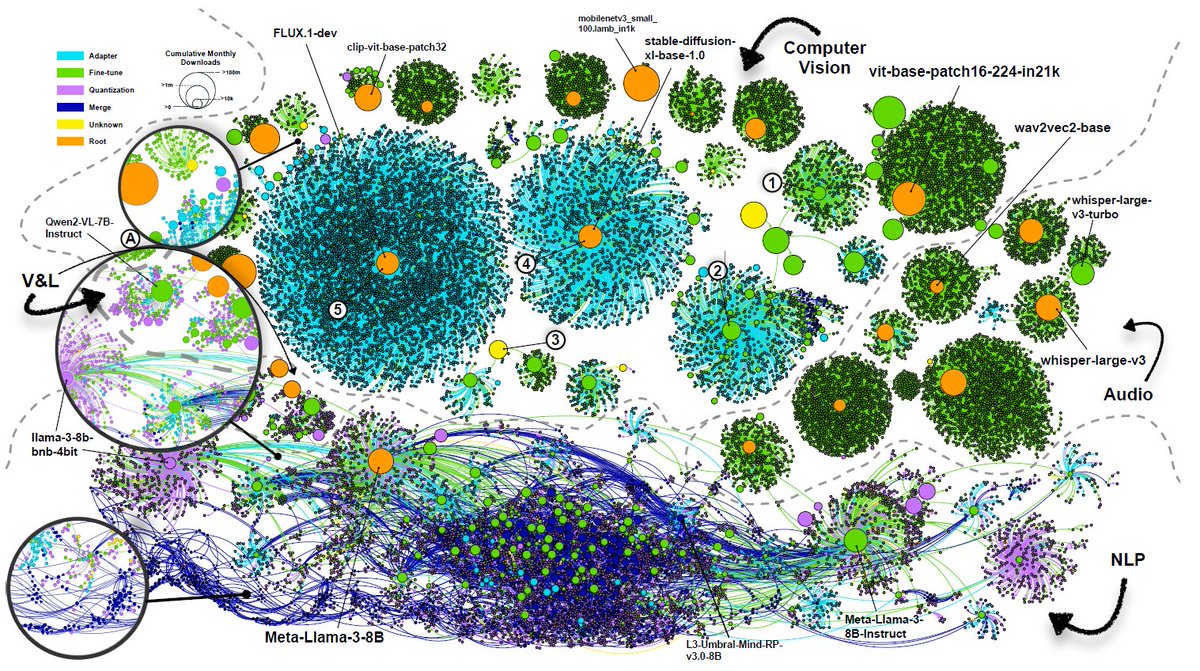

this paper is a visual art! the atlas of🤗hugging face models studies 63,000 models published on Hugging Face and they share some fun numbers: > 96% of vision models are ≤1 node from foundation models, but 5% of NLP models reach ≥5 node depths > only 0.15% of vision models

Andrej Karpathy Thanks for the inspiring talk (as always!). I'm the author of the Model Atlas. I'm delighted you liked our work, seeing the figure in your slides felt like an "achievement unlocked"🙌Would really appreciate a link to our work in your slides/tweet arxiv.org/abs/2503.10633